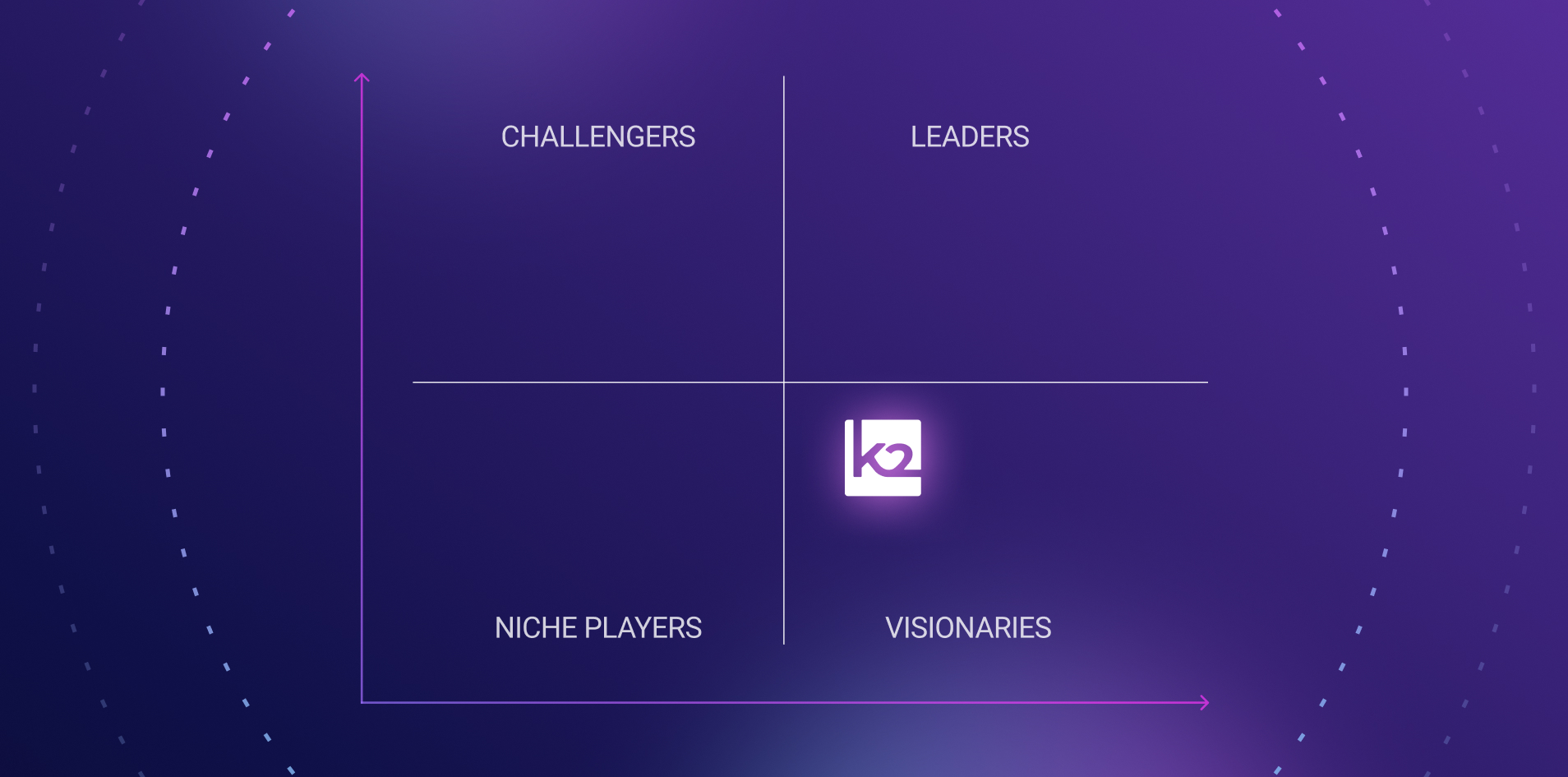

Gartner names K2view a Visionary in its Magic Quadrant for Data Integration Tools for the 3rd year in a row and rates us higher in our ability to execute.

- Platform

-

Solutions

Data Privacy and Compliance

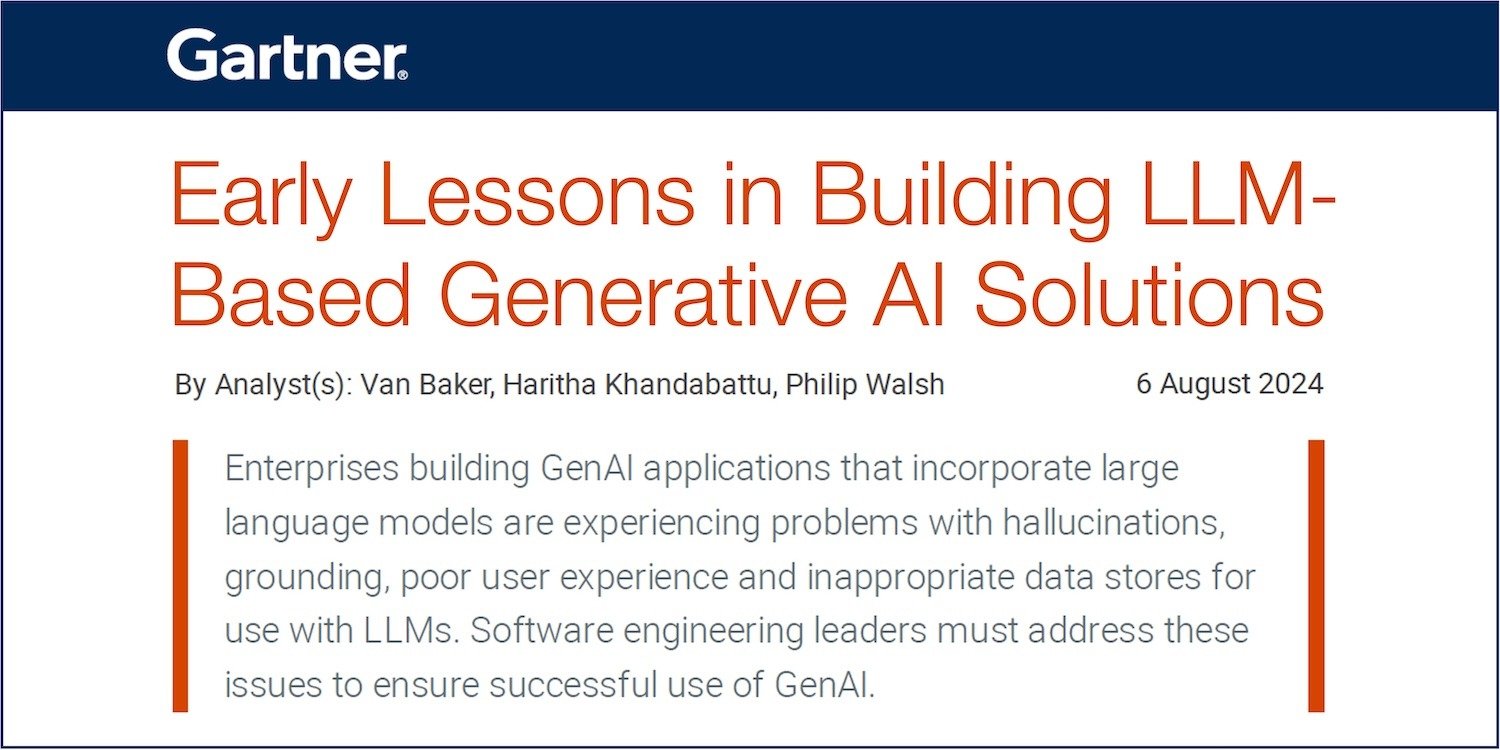

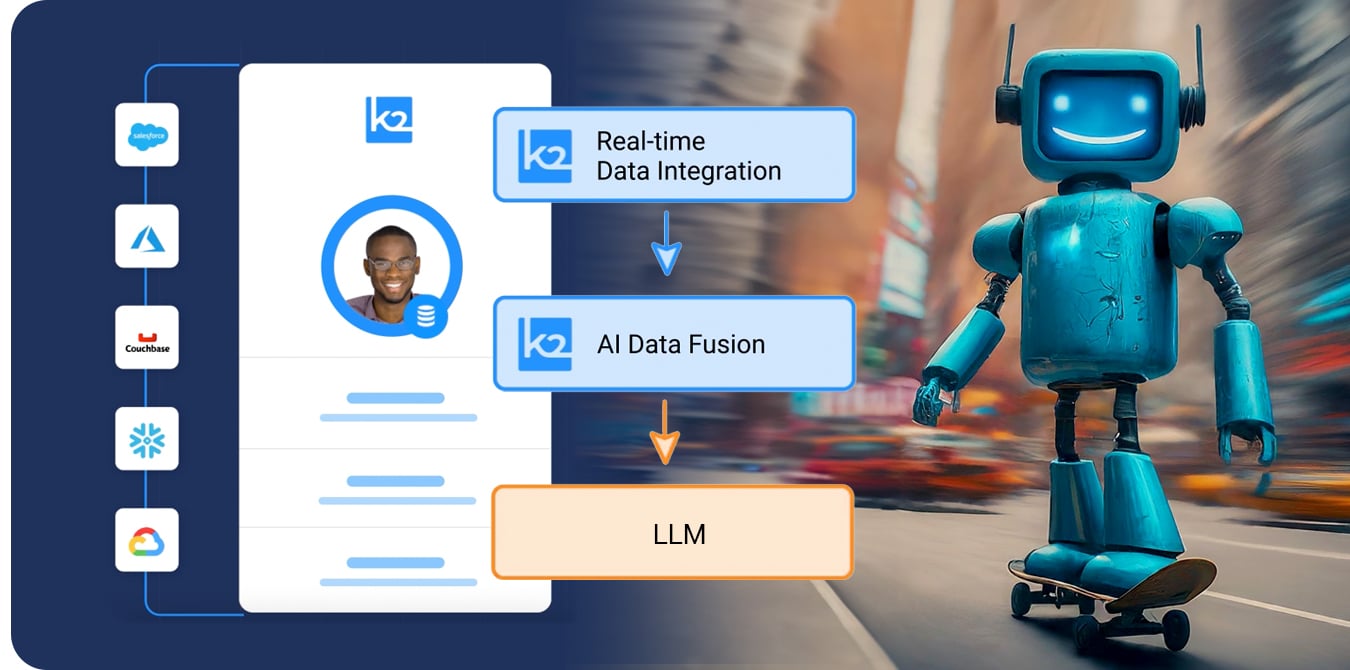

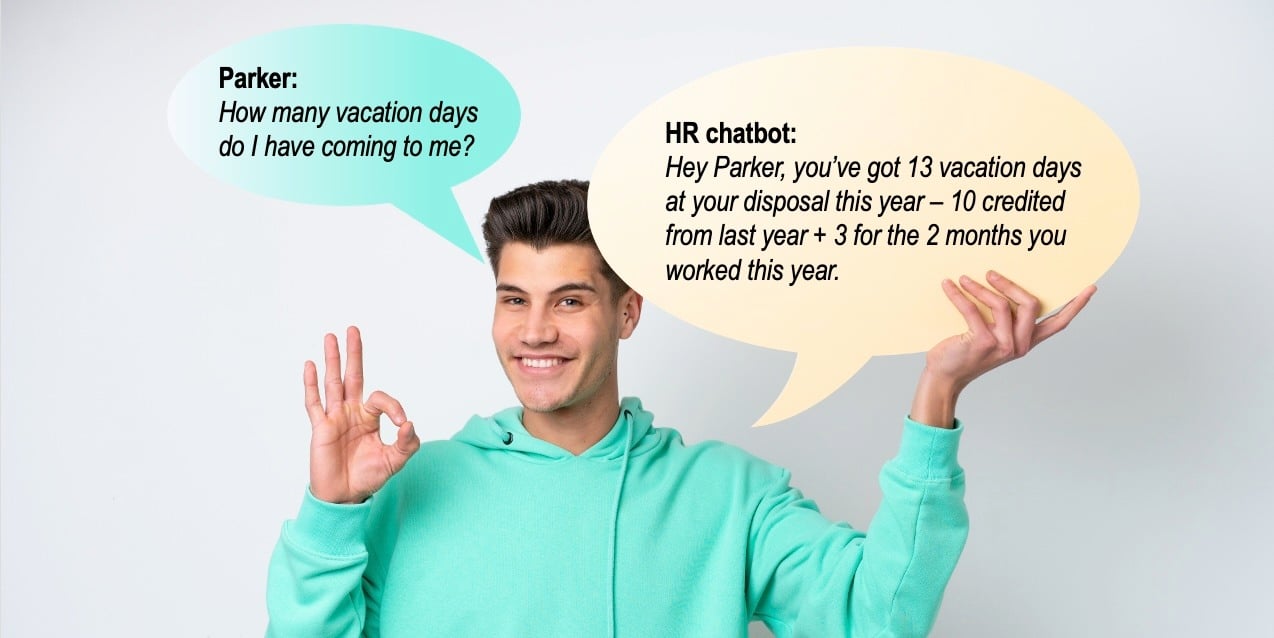

Data for Generative AI

Data Integration

-

Company

Company

Reach Out

News Updates

- K2view Recognized as Visionary for Third Consecutive Year in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

- K2view secures $15M to fuel the next generation of Agentic AI, powered by AI-ready data

- Rocket Software and K2view partner to accelerate mainframe modernization

- K2view Data Agent Builder: No-code tool for agentic AI app development

-

Resources

Resources

Education & Training