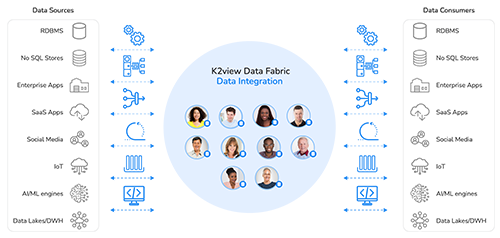

All data integration and delivery methods

From any source, to any target

.png)

Integrate and deliver data from any data source – on premises or in the cloud – to any data consumer, using any data delivery method: bulk ETL, data streaming, data virtualization, log-based data changes (CDC), message-based data integration, Reverse ETL, and APIs.

Robust, reusable data integration

Entity-based data integration

A data product approach

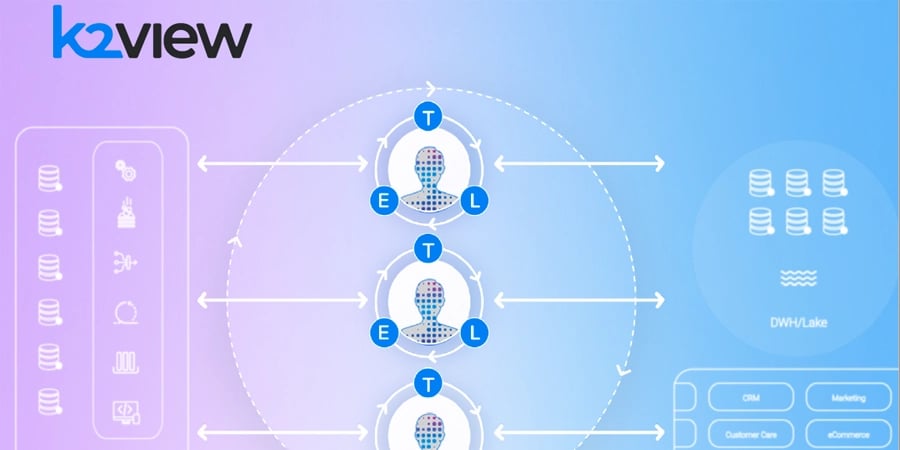

Ingest and deliver data by business entities

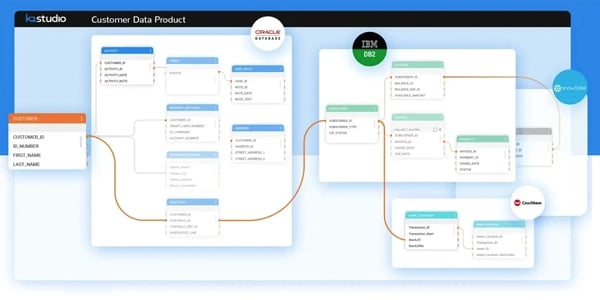

K2view takes a data product approach to data integration.

Data engineers create and manage reusable data pipelines that integrate, process, and deliver data by business entities – customers, employees, orders, loans, etc.

- The data for each business entity is ingested and organized into its own high-performance Micro-Database™.

- The schema for a business entity is auto-discovered from the underlying source systems.

- Data masking, transformation, enrichment, and orchestration are applied – in flight – to make entity data accessible to authorized data consumers, while complying with data privacy and security regulations.

.png?width=657&height=426&name=integration%20(1).png)

easy and lightning-fast

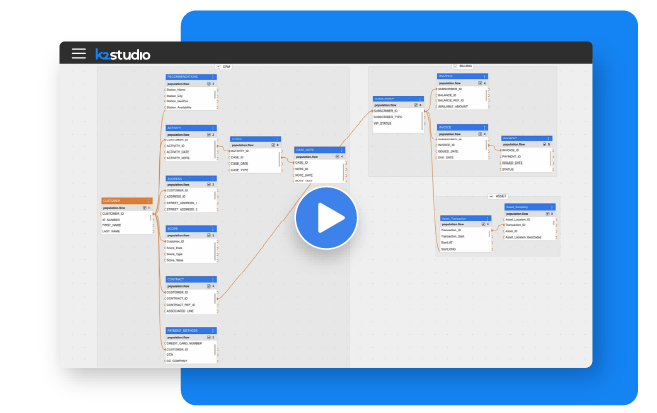

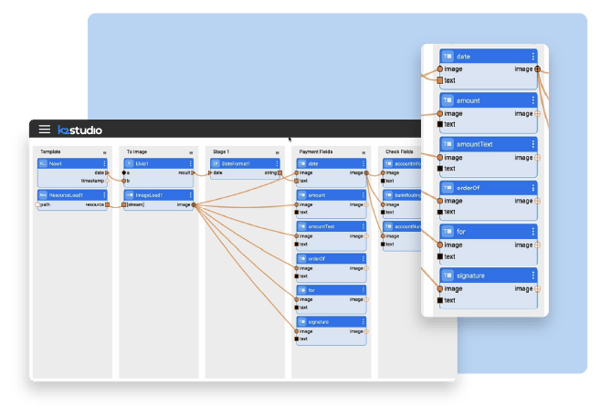

No-code data integration and transformation

K2view data integration includes no-code tooling for defining, testing, and debugging data transformations to address simple and complex needs, with ease. Data transformation is applied in the context of a business entity, making performance lightning fast, in support of high-scale data preparation and delivery.

-

Basic data transformations

Data type conversions, string manipulations, and numerical calculations. -

Intermediate data transformations

Lookup/replace using reference files, aggregations, summarizations, and matching. -

Advanced data transformations

Complex parsing, unstructured data processing, combining data and content sources, text mining, correlations, custom enrichment functions, data validation, and cleansing.

Linear scalability on commodity hardware

Real-time performance at enterprise scale

K2view Data Product Platform scales linearly to manage hundreds of concurrent pipelines, and billions of Micro-Databases, in support for high-scale operational and analytical data integration workloads.

- In-memory computing, patented Micro-Database technology, and a distributed architecture, are integrated to deliver unmatched source-to-target data performance.

- K2view can be deployed on premises or in the cloud, in support of a data mesh architecture, data fabric architecture, or data hub architecture.

- Data pipelining monitoring and control is built in, with observability provided at the business entity level.

Key features and capabilities

Data integration tools for any task

No-code/ low-code

No-code and low-code tooling to support varying levels of data engineering expertise

Any data sources

Connectors to hundreds data sources and applications

Any delivery method

Multiple data delivery styles, including CDC, ETL, streaming, and messaging

Data virtualization

Data virtualization support provides an easy-to-access logical abstraction layer

Data transformation

Data transformation of unprecedented sophistication

Auto-discovery

Metadata discovery and data classification accelerate implementation

Data quality

Data quality is enforced in-flight, through customizable business rules

Deploy anywhere

Support for cloud, hybrid, or on-premise data integration

-1.png?width=600&height=445&name=Data-IntegrationData-Integration-2_Enterprise%20(1)-1.png)