DATA PIPELINING via generative DATA PRODUCTs

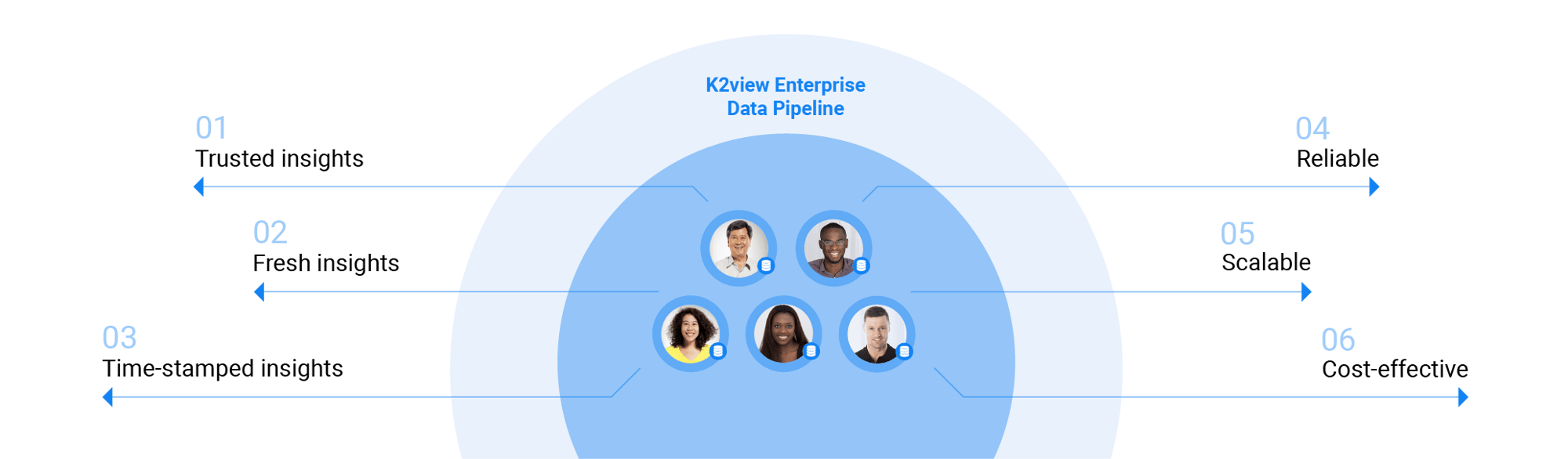

Complete, compliant, current data – every time

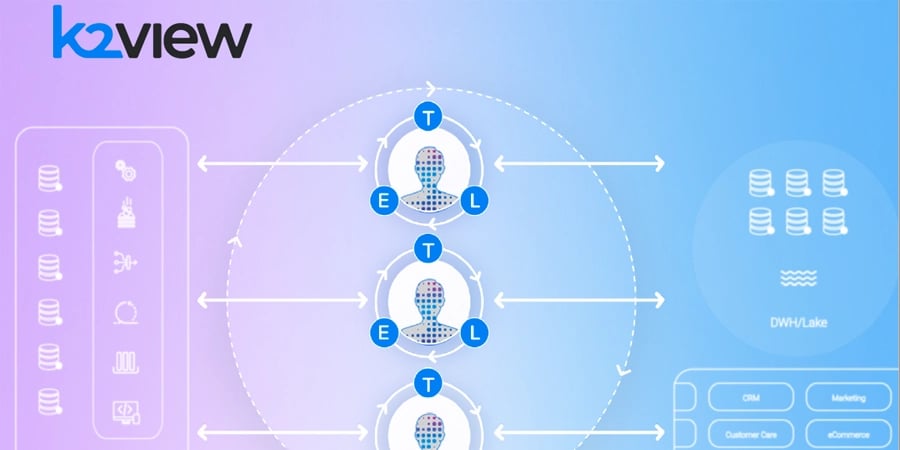

A generative data product approach to ETL/ELT ensures high-performance data pipelines, data integrity, and business agility. Data engineers can now prepare and deliver fresh, trusted data, from all sources, to all targets, at AI speed and scale.

Prepare and deliver data

with generative data products

OPERATIONALIZING DATA PREPARATION AND DELIVERY

Accelerate time to insights

Data pipeline ingestion, processing, and transformation flows can be configured, tested, and packaged as data products. The data products can be reused to operationalize data preparation, and accelerate time to insights.

-

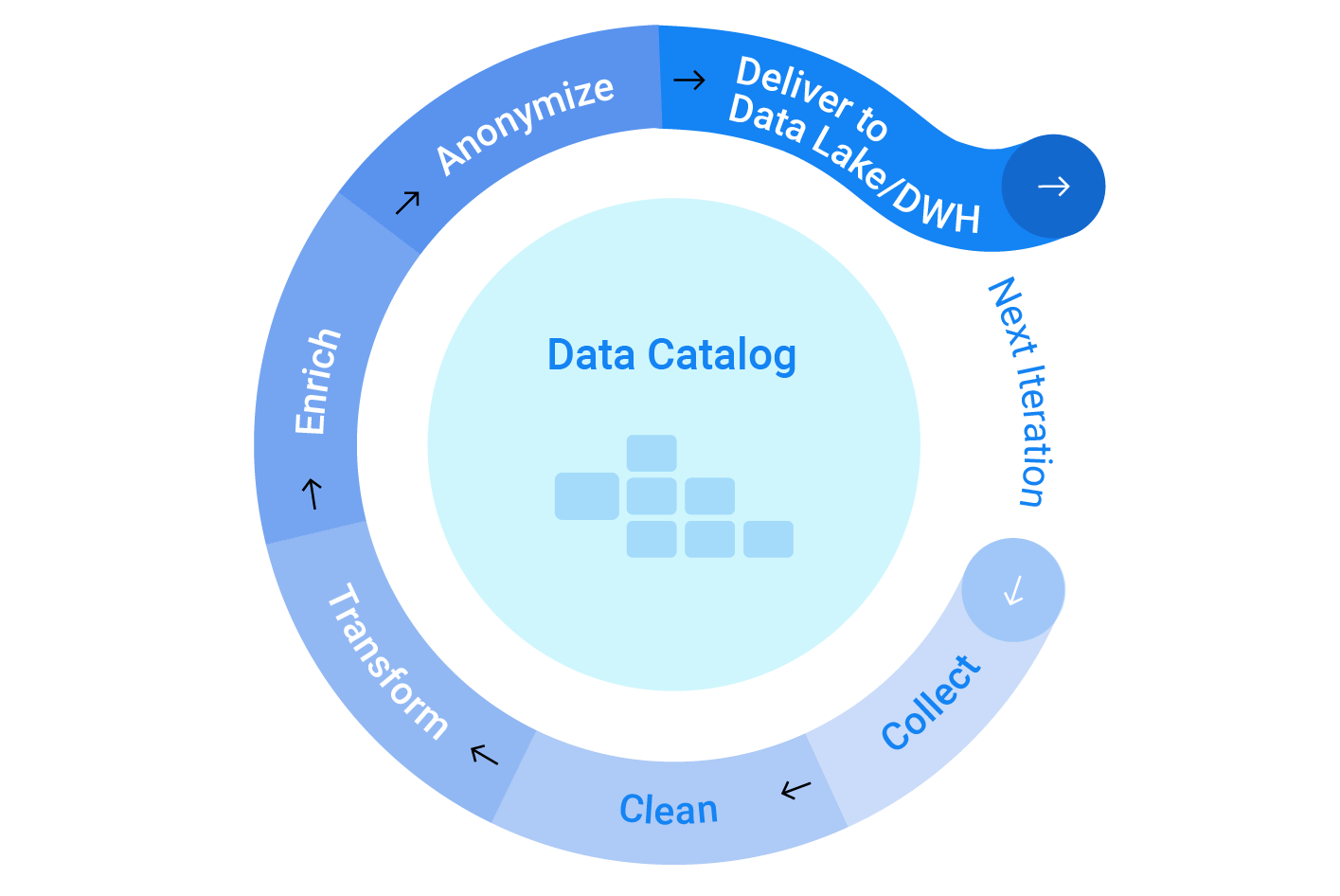

Configure your data ingestion and delivery pipelines – cleansing, transforming, enriching, and governing the data – as needed.

-

Support all your operational data sources and analytical data targets with one solution.

-

Automate the creation of data ingestion logic using the data product schema.

DATA GOVERNANCE: FEDERATED OR CENTRALIZED

Keep your data safe and well-governed

-

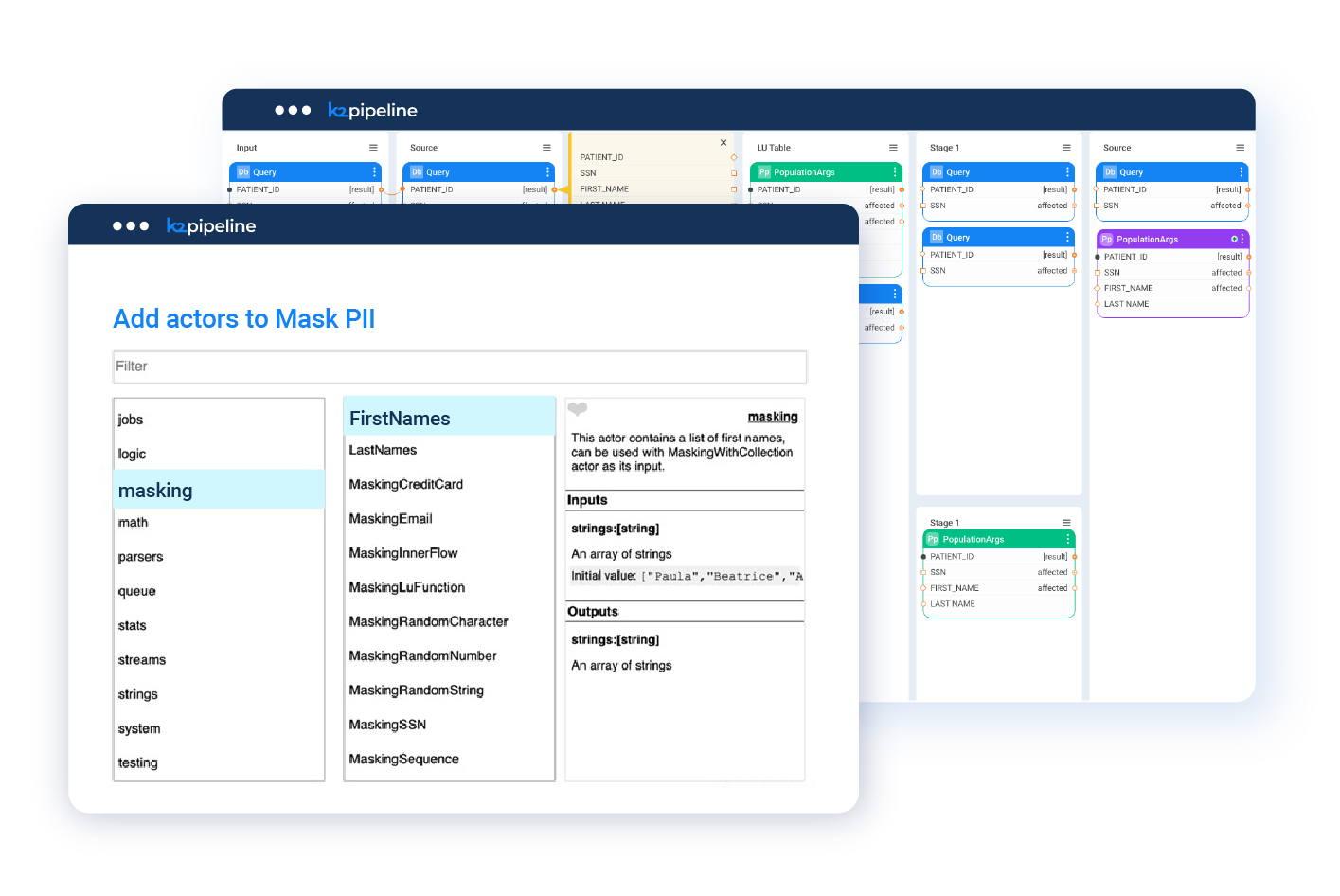

Mask sensitive data inflight, while preserving data integrity, to adhere to data privacy and security regulations.

- Cleanse the data in real time, to ensure data quality.

-

Encrypt data from the time it is ingested from the source systems, to the moment it is served to data lakes and data warehouses.

-

Leverage for both operational and analytical workloads, in a data mesh or data fabric – on premises, in the cloud (iPaaS), or across hybrid environments.

-

Deploy in a cluster – close to sources and targets – to reduce bandwidth costs, enhance security, and increase speed.

ANY SOURCE, TO ANY TARGET, ANYWHERE

All data integration and delivery methods

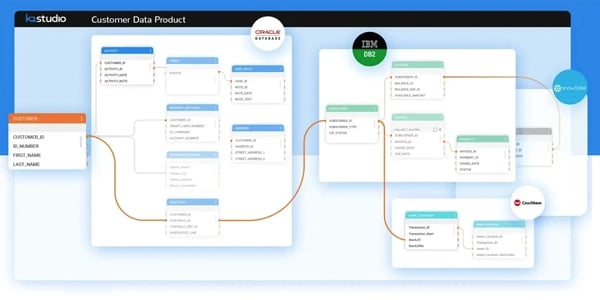

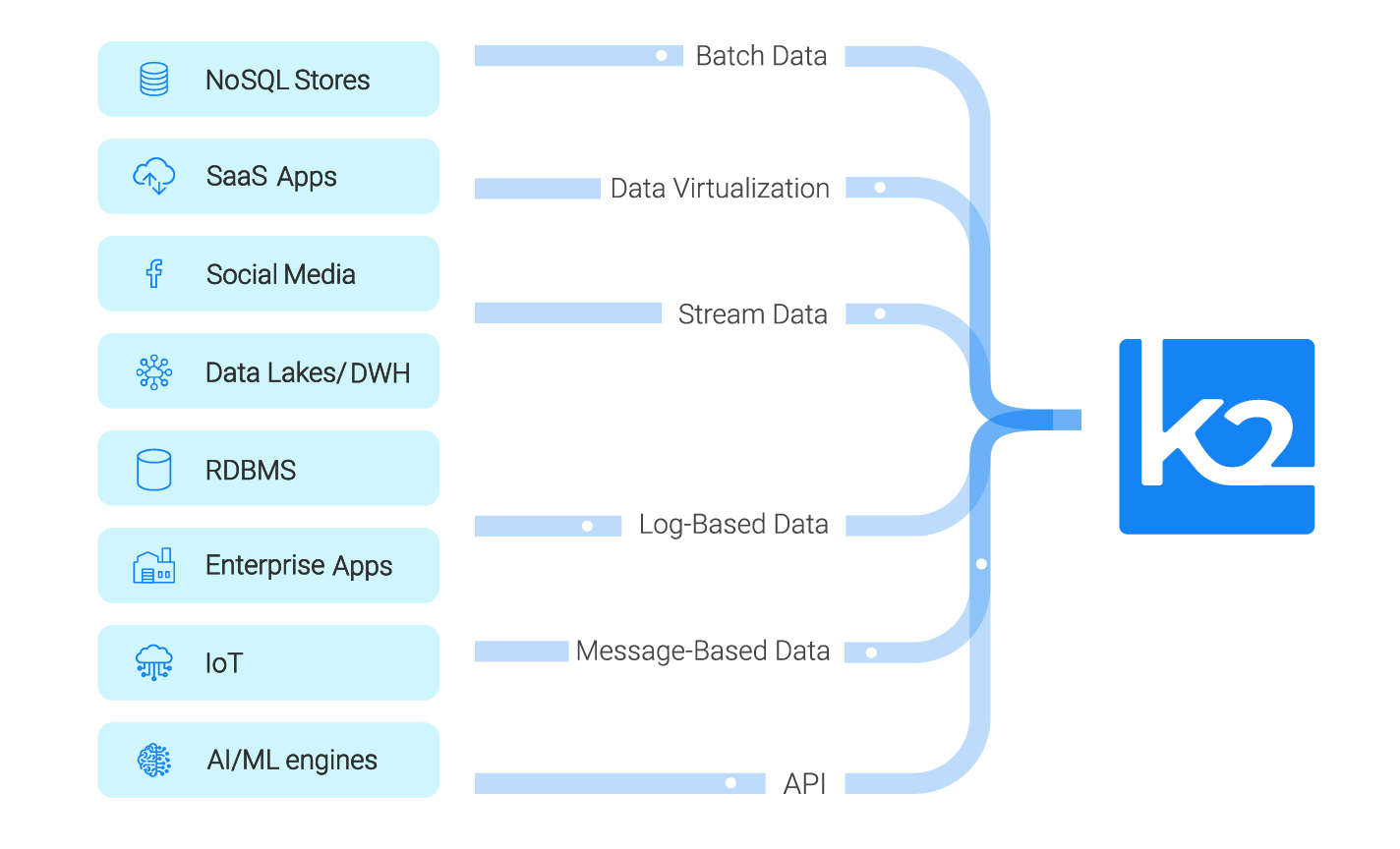

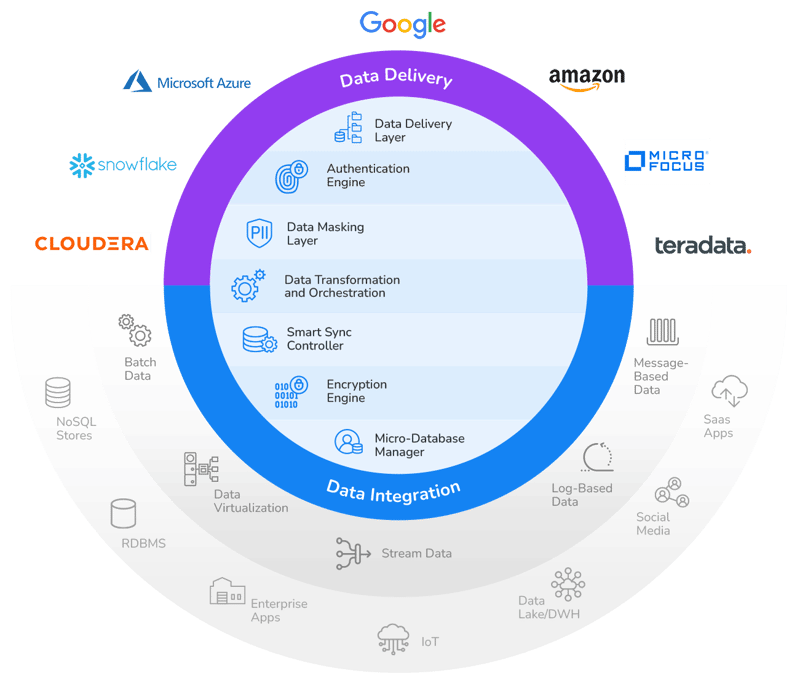

K2view prepares and pipelines data from any data source to any data lake or data warehouse, in any format, across cloud and on-premise environments.

-

Dozens of pre-built connectors to hundreds of relational databases, NoSQL sources, legacy mainframes, flat files, data lakes, and data warehouses.

-

Supported data integration and delivery methods include bulk ETL, reverse ETL, data virtualization, data streaming, log-based data changes, message-based data integration, and APIs.

Augmented Data Transformation

Save time and resources with AI

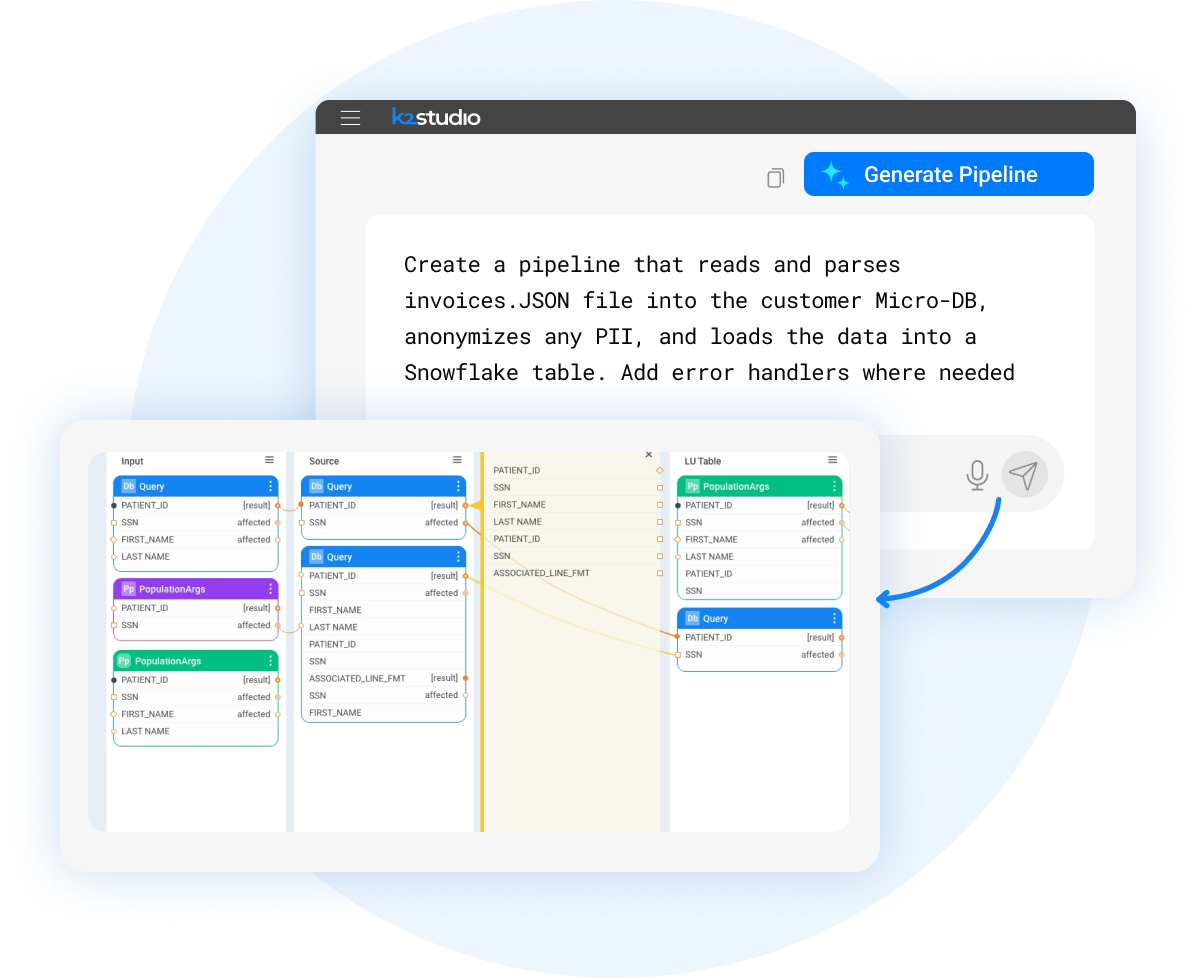

An AI-driven copilot provides contextual recommendations for implementing transformations, cleansing, and orchestration. It automates:

- Data pipeline generation and documentation

- Text-to-SQL conversion

- Data classification

- Data product schema modeling

DATA PIPELINE TOOLS

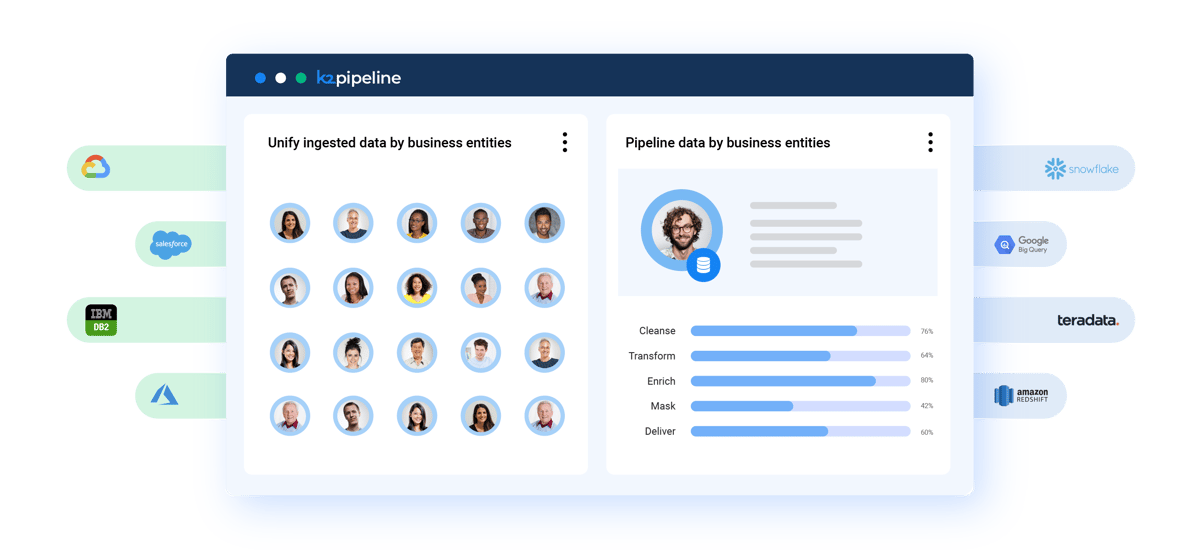

Massive-scale, multi-cloud,

and on-premise deployments

K2view Data Pipeline supports massive-scale, hybrid, multi-cloud, and

on-premise deployments. It ingests data from all source systems and delivers it to all data lakes and data warehouses.

DATA PIPELINE TOOLS

Data pipeline tools and capabilities

Business entity approach

Collect, process, and serve data by business entity.

Referential integrity

Ingest and unify data from all sources while ensuring data integrity.