Retrieval-Augmented Generation (RAG) prompt engineering is a generative AI technique that enhances the responses generated by Large Language Models (LLMs).

What is RAG prompt engineering?

RAG (Retrieval-Augmented Generation) prompt engineering is a methodology for designing prompts that leverages LLM agents and functions in retrieval and generative models to produce more accurate and contextually relevant outputs. RAG is a generative AI framework for improving the accuracy and reliability of LLMs using relevant data from private sources.

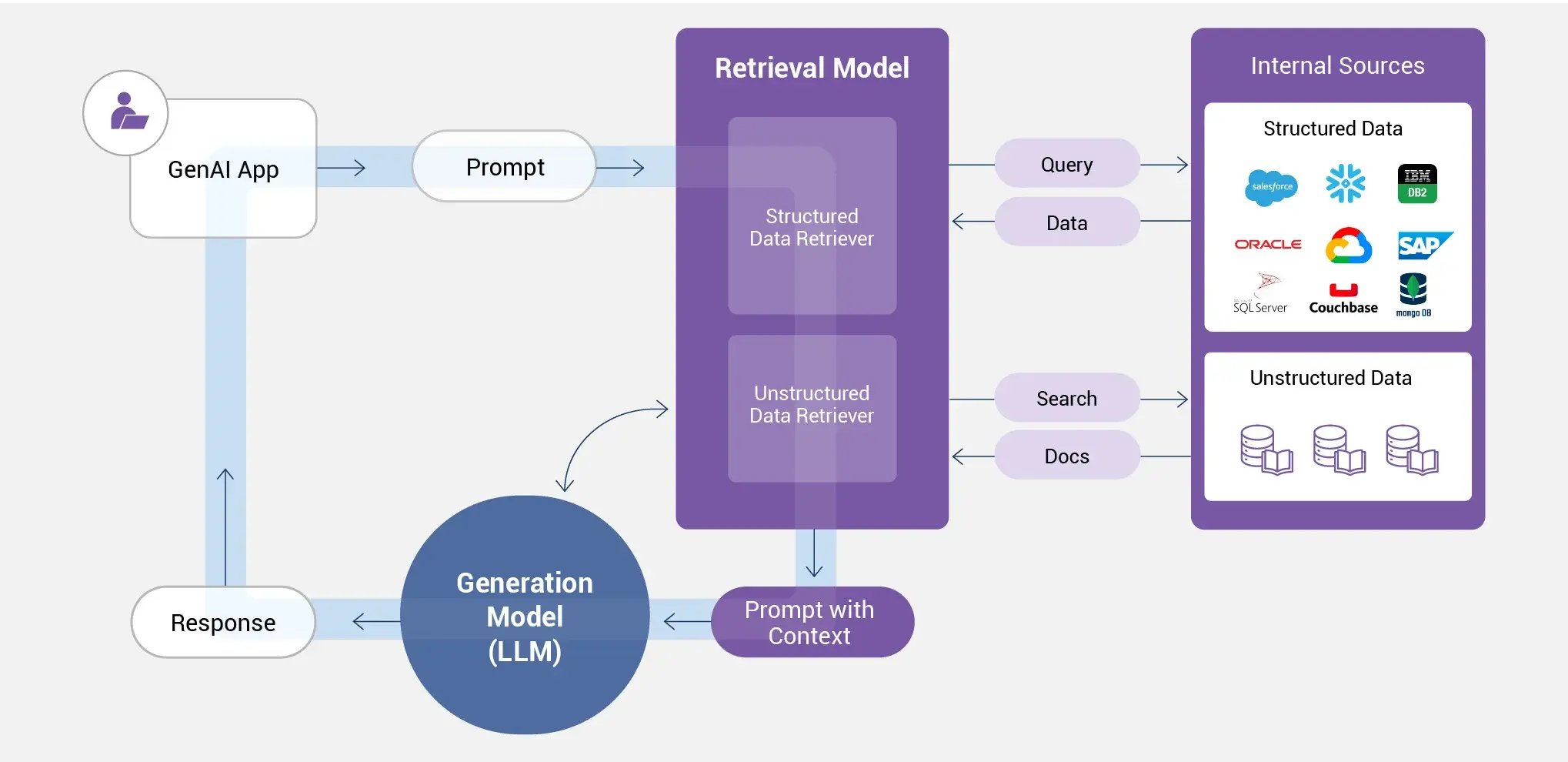

In the retrieval-augmented generation data flow, RAG prompt engineering comes into play in steps 3 and 4, below:

-

The user enters a prompt, which triggers the data retrieval model to access your organization’s internal sources as needed.

-

The retrieval model queries your enterprise systems for structured data, and your knowledge bases for unstructured data.

-

RAG prompt engineering is used to bundle the user’s original prompt with additional context.

-

The generation model (LLM) uses the enhanced prompt to create a more accurate and informed response, which is then sent to the user.

Grounding LLMs with rag prompt engineering

To harness the potential of Large Language Models (LLMs), we need to provide clear instructions – in the form of LLM prompts. An LLM prompt is text that instructs the LLM on what kind of response to generate. It acts as a starting point, providing context and guiding the LLM towards the desired outcome. Here are some examples of different types of LLM prompts:

Task-oriented prompts

- "Write a poem about a cat chasing a butterfly."(Instructs the LLM to generate creative text in the form of a poem with a specific theme.)

- "Translate 'Hello, how are you?' from English to Spanish: " (Instructs the LLM to perform a specific task – translation.)

Content-specific prompts

- "Write a news article about climate change based on the latest sources." (Provides specific content focus and emphasizes factual accuracy.)

- "Continue this story: When my spaceship landed on a strange planet, I..." (Offers context for the LLM to continue a creative narrative.)

Question-answering prompts

- "What is the capital of Bolivia?" (Instructs the LLM to access and process information to answer a specific question.)

- "What are the pros and cons of genetic engineering based on the attached publication?" (Provides context from a source and asks the LLM to analyze and answer a complex question.)

Code generation prompts

- "Write a Python function to calculate the factorial of a number." (Instructs the LLM to generate code that performs a specific programming task.)

- "Complete the attached JavaScript code snippet to display an alert message on the screen." (Provides partial code and instructs the LLM to complete the functionality.)

The effectiveness of an LLM prompt depends largely on its clarity and detail. By providing specific instructions, relevant context, and the desired task or format, we help the LLM provide more accurate and informed outputs.

Augmenting LLMs with extra knowledge via RAG

Retrieval-Augmented Generation (RAG) is a technique that enhances LLM capabilities by incorporating additional knowledge – from trusted private sources – into the process of generating text. Here's how it works:

- Information retrieval

The first step involves a retrieval system. This system functions like a search engine, sifting through a vast collection of documents (like Wikipedia or a company's internal knowledge base) to identify information most relevant to a given user prompt. - Prompt augmentation

Once relevant information is retrieved, RAG merges it with the original user prompt. The result is a more comprehensive set of instructions that not only conveys the user's intent but also incorporates auxiliary knowledge. - Enhanced LLM response

The augmented prompt is then fed into the LLM. By having access to this additional context, the LLM is better equipped to generate a response that is factually accurate, relevant to the user's query, and potentially more personalized than what it could achieve with the original prompt alone.

The RAG approach offers several benefits:

- Improved factual accuracy

LLMs often have issues with factual grounding. RAG helps to mitigate this phenomenon by providing access to reliable internal and external sources that the LLM can leverage during the response generation process. - Enhanced relevance

With the right RAG prompt engineering, the LLM provides responses that are more focused and directly address the user's needs. - Domain-specific knowledge

RAG can be particularly valuable when dealing with tasks that require specialized knowledge in a particular field. By accessing domain-specific knowledge bases, RAG can empower LLMs to answer more intelligently.

Note that the effectiveness of LLM responses relies heavily on crafting effective RAG prompt engineering to guide the retrieval system to the most appropriate information sources.

RAG prompt engineering makes LLMs more effective

RAG prompt engineering is particularly beneficial for tasks that require access to real-world knowledge. It helps address the limitations of LLMs, such as their tendency to generate AI hallucinations that may not be grounded in fact. However, effective RAG prompt engineering requires careful consideration. The system needs clear instructions on what information to retrieve and how the LLM should utilize it.

But how do we ensure the retrieved information is truly helpful? This is where RAG prompt engineering comes in. Here are the key elements:

- Specifying the data needs

RAG prompt engineering starts by pinpointing the exact type of knowledge the LLM needs, be it factual details, historical context, or relevant research findings. The clearer the instructions, the more targeted the response. - Guiding LLM interpretation

Data retrieval isn't enough. We also need to tell the LLM how to use the data. RAG prompt engineering makes sure the LLM focuses on the most pertinent details and avoids irrelevant tangents. - Tailoring language style and tone

Effective communication requires adapting language based on the context. RAG prompt engineering can also specify the desired tone of voice for the most seamless information delivery.

By concentrating on RAG prompt engineering, we empower a RAG LLM to deliver more comprehensive and reliable responses, making them more powerful than they were before.

RAG prompt engineering with GenAI Data Fusion

The K2view suite of RAG tools, also known as GenAI Data Fusion, creates contextual RAG prompts grounded in your enterprise data, from any source, in real time. It harnesses chain-of-thought prompting to enhance any GenAI application – making sure, for instance, that your RAG chatbot can leverage your customer data to unleash the true potential of your LLM.

GenAI Data Fusion:

- Incorporates real-time data concerning a specific customer or any other business entity into prompts.

- Masks sensitive or PII (Personally Identifiable Information) dynamically.

- Fields data service access requests and suggests cross-sell recommendations.

- Accesses enterprise systems – via API, CDC, messaging, or streaming – to assemble data from many different source systems.

GenAI Data Fusion powers your AI data apps to respond more with accurate recommendations, information, and content – thanks in part to RAG prompt engineering.

Discover K2view AI Data Fusion, the RAG tools with built-in RAG prompt engineering