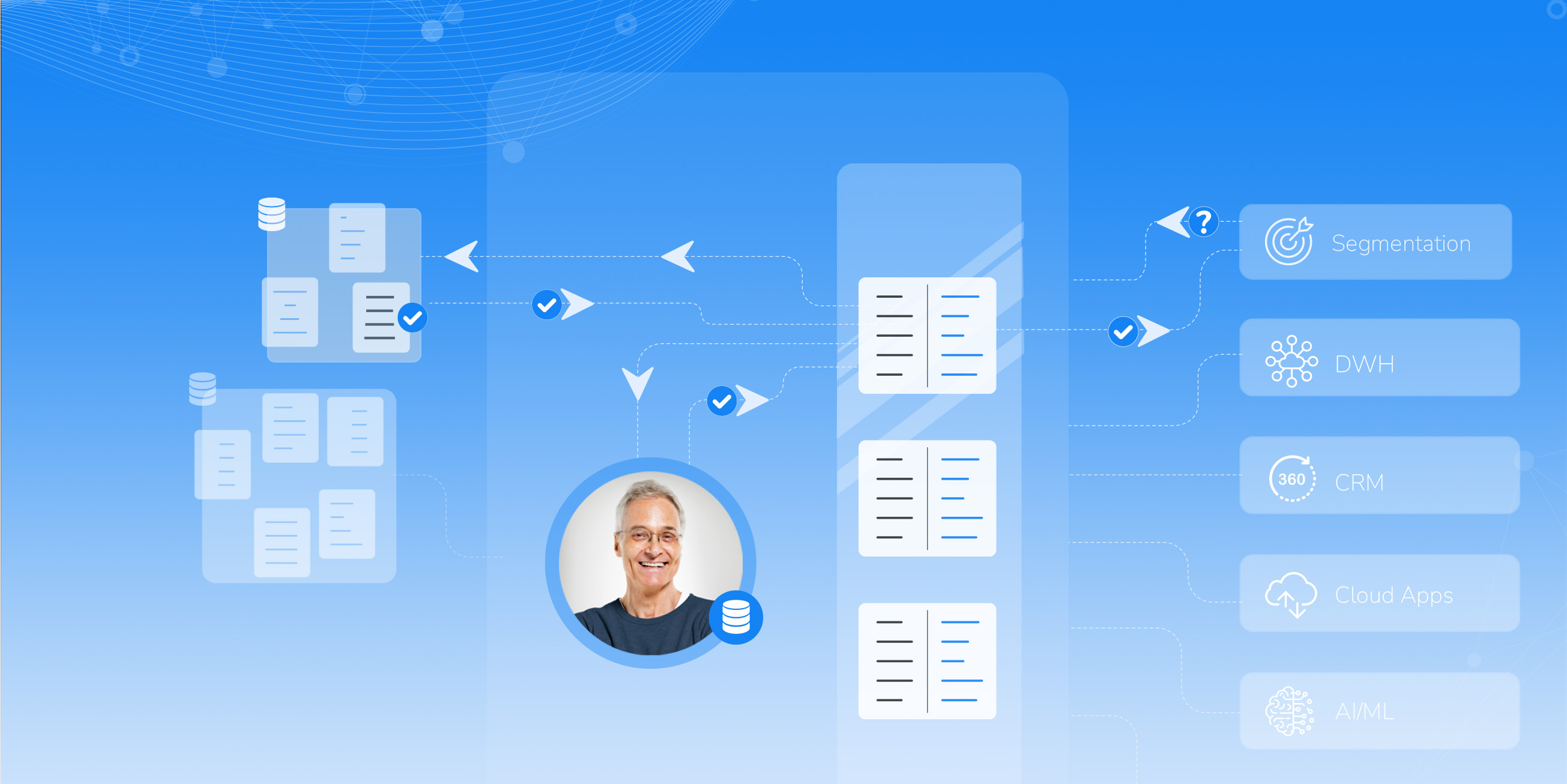

For maximum agility – in making faster decisions, serving customers more personally, and outdoing competitive offerings – enterprises recognize the need to use their data more effectively.

Exploiting the power of data analytics, business intelligence, and workflow automation is one way for companies to accelerate new revenue streams while reducing costs and improving the performance of data services.

But here lies the challenge – enterprise data is stored in disparate locations with rapidly evolving formats such as:

-

Relational and non-relational databases, like Amazon Redshift, MongoDB, and MySQL

-

Cloud/Software-as-a-Service applications, such as Mailchimp, NetSuite, and Salesforce

-

Semi-structured and unstructured data, including social media data, call recordings, email interactions, images, and more

-

Legacy systems, such as mainframe and midrange applications

-

CRM/ERP data, like Microsoft Dynamics, Oracle, and SAP

-

Flat files, such as CSV, JSON, and XML

-

Big data repositories, including data lakes and data warehouses

The demand for speed and higher volumes of increasingly complex data leads to further challenges such as:

-

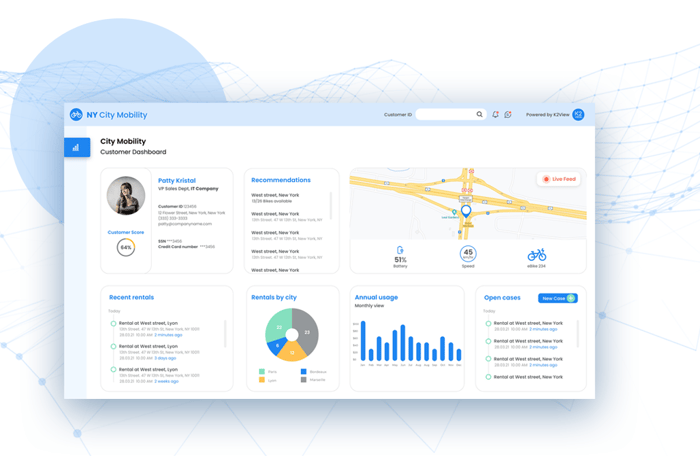

Self-service capabilities for data users

-

Time efficiency in data management

-

Trusted data quality in terms of cleanliness and freshness

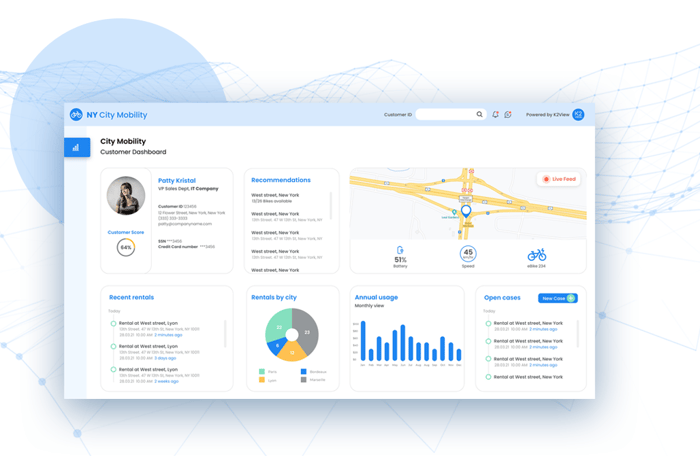

In a virtualized environment, different domains can

In a virtualized environment, different domains can

access the data they need, on their own, in real time.

To address these challenges, organizations recognize the need to move, from silos of disparate data and isolated technologies, to a business-focused strategy where data and analytics are simply a part of everyday life for business users.

Cultural obstacles

Data virtualization needs to overcome certain obstacles before it is widely accepted. For example, some may think the semantic virtual tiers built into some of their applications can substitute for data virtualization, while others might assume that data virtualization is a replacement for ETL.

Both assumptions are faulty. Data virtualization is a cross-platform technology that must be used in conjunction with other data delivery styles (e.g., bulk/batch with ETL) and data transformation and orchestration – or it might limit performance, and inhibit its adoption.

Finally, data virtualization is less appealing to traditional data management teams because it challenges existing practices. For example, those who oppose more agile approaches, like “experiment-and-fail-fast”, would most likely also resist data virtualization.

In a virtualized environment, different domains can

In a virtualized environment, different domains can