See Agentic AI in Action

Go behind the scenes and see how we ground AI agents with enterprise data

Start your live product tour

Retrieval-Augmented Generation (RAG) is an AI framework that improves LLM accuracy. Learn how to inject enterprise data into LLMs for more reliable responses.

Auto-generating reliable responses to user queries – based on an organization’s private information and data – remains an elusive goal for enterprises looking to generate value from their generative AI apps. Sure, technologies like machine translation and abstractive summarization can break down language barriers and lead to some satisfying interactions, but, overall, generating an accurate and reliable response is still a significant challenge.

01

What is retrieval-augmented generation?

Retrieval-Augmented Generation (RAG) is a Generative AI (GenAI) architecture that augments a Large Language Model (LLM) with fresh, trusted data retrieved from authoritative internal knowledge bases and enterprise systems, to generate more informed and reliable responses.

The acronym “RAG” is attributed to the 2020 publication, “Retrieval-Augmented Generation for Knowledge-Intensive Tasks”, submitted by Facebook AI Research (now Meta AI). The paper describes RAG as “a general-purpose fine-tuning recipe” because it’s meant to connect any LLM with any internal or external knowledge source.

As its name suggests, retrieval-augmented generation inserts a data retrieval component into the response generation process to enhance the relevance and reliability of the answers.

The retrieval model accesses, selects, and prioritizes the most relevant documents and data from the appropriate sources based on the user’s query, transforms it into an enriched, contextual prompt, and invokes the LLM via its API. The LLM responds with an accurate and coherent response to the user. This is LLM AI learning in action.

A helpful RAG analogy is a stock trader using the combination of publicly available historical financial information and live market feeds.

Stock traders in a firm make investment decisions based on analyzing financial markets, industry, and company performance. However, to generate the most profitable trades, they access live market feeds and internal stock recommendations provided by their firm.

In this example, the publicly available financial data used is the LLM, and the live data feeds and firm's internal stock recommendations represent the internal sources accessed by the RAG.

02

Providing reliable responses isn't easy

Traditional text generation models, typically based on encoder/decoder architectures, can translate languages, respond in different styles, and answer simple questions. But because these models rely on the statistical patterns found in their training data, they sometimes provide incorrect or irrelevant information, called hallucinations.

Generative AI leverages Large Language Models (LLMs) that are trained on massive amounts of publicly available (Internet) information. The few LLM vendors (such as Microsoft, Google, AWS, and Meta) can't retrain their models frequently due to the high cost and time involved. Since LLMs ingest public data as of a certain time and date, they’re never current – and have no access to the highly valuable, private data stored in an organization. Therefore, large language models are also prone to LLM hallucination issues.

Retrieval-Augmented Generation (RAG) is an emerging generative AI technology that addresses these limitations.

A complete RAG implementation retrieves structured data from enterprise systems and unstructured data from company knowledge bases.

RAG transforms real-time, multi-source business data into intelligent, context-aware, and compliant prompts to reduce AI hallucinations and elevate the effectiveness and trust of generative AI apps.

According to the Gartner Generative AI Hype Cycle report from 2024, "IT and data and analytics leaders looking at adopting GenAI capabilities on top of private and public corporate data should prioritize RAG investments."

03

RAG architecture

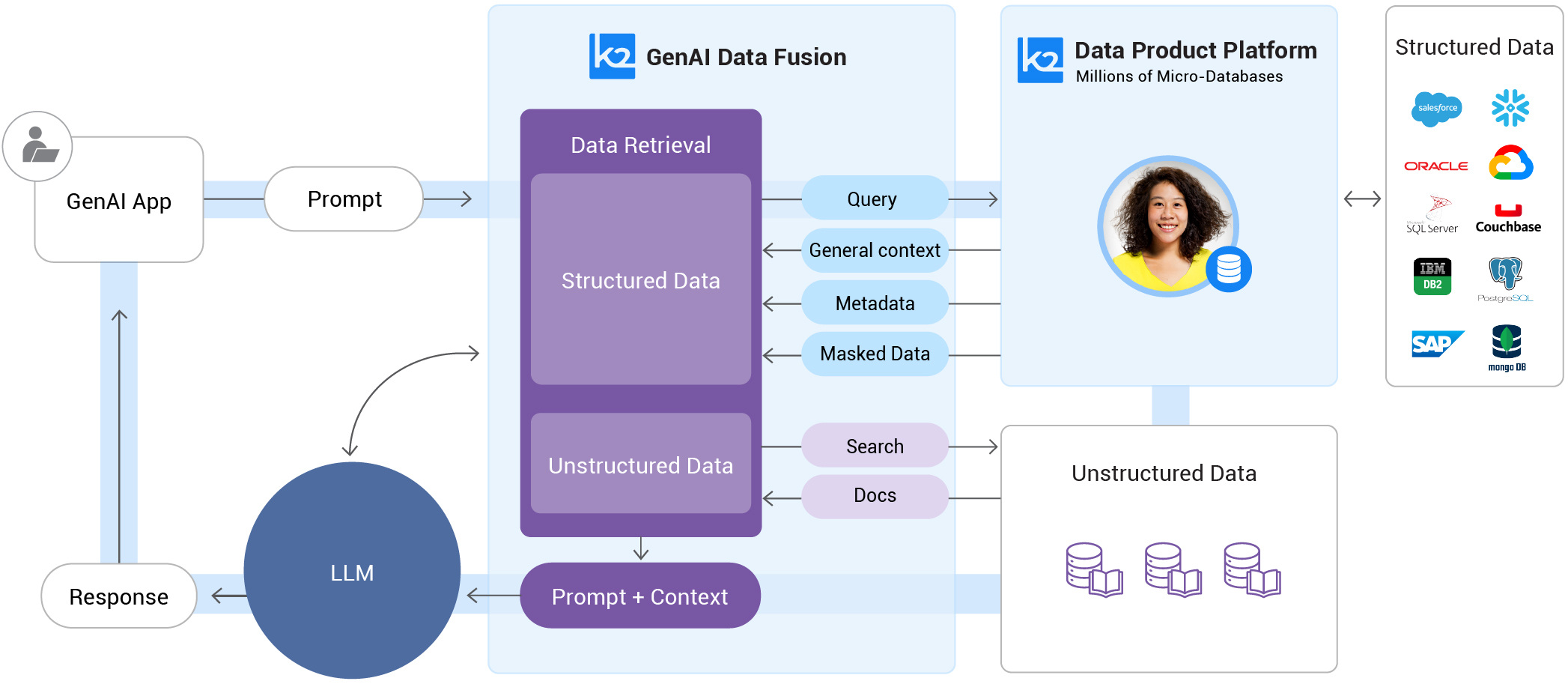

The RAG architecture diagram below illustrates the retrieval-augmented generation framework and data flow, from the user prompt to response:

-

The user enters a prompt, which triggers the data retrieval model to access the company's internal sources, as required.

-

The retrieval model queries the company’s internal sources for structured data from enterprise systems and for unstructured data like docs from knowledge bases.

-

The retrieval model crafts an enriched prompt – which augments the user’s original prompt with additional contextual information – and passes it on as input to the generation model (LLM).

-

The LLM uses the augmented prompt to generate a more accurate and relevant response, which is then provided to the user.

The end-to-end round trip – from user prompt to response – should take 1-2 seconds to support conversational interfaces.

04

The data retrieval process

The RAG lifecycle – from data sourcing to the final output – is based on a Large Language Model or LLM.

An LLM is a foundational Machine Learning (ML) model that employs deep learning algorithms used in natural language processing. It’s trained on massive amounts of open source text data to learn complex language patterns and relationships, and perform related tasks, such as text generation, summarization, translation, and, of course, the answering of questions.

These models are pre-trained on large and diverse datasets to learn the intricacies of language processing, and can be fine-tuned for specific applications or tasks. The term “large” is even a bit of an understatement because these models can contain billions of data points. For example, ChatGPT4 is reported to have over a trillion.

RAGs execute retrieval and generative models in 5 key steps:

1. Sourcing

The starting point of any RAG system is data sourcing, from internal text documents and enterprise systems. The source data is queried (if structured) and searched (if unstructured) by the retrieval model to identify and collect relevant information. To ensure accurate, diverse, and trusted data sourcing, you must maintain accurate and up-to-date metadata, as well as manage and minimize data redundancy.

2. Preparing enterprise data for retrieval

Enterprises must organize their multi-source data and metadata so that RAG can access it in real time. For example, your customer data, which includes master data (the customer's unique identifying attributes), transactional data (e.g., service requests, purchases, payments, and invoices), and interaction data (emails, chats, phone call transcripts), must be integrated, unified, and organized for real-time information retrieval.

Depending on the use case, you may need to arrange your data by other business entities, such as employees, products, suppliers, or anything else that’s relevant for your use case.

3. Preparing documents for retrieval

Unstructured data, such as textual documents, must be divided into smaller, manageable pieces of related information, a process called "chunking". Effective chunking can improve retrieval performance and accuracy. For example, a document may be a chunk on its own, but it could also be chunked down further into sections, paragraphs, sentences, or even words. Chunking also makes the retriever less costly, by only including the relevant part of a document in the LLM prompt, instead of the whole document.

Next, the textual chunks must be transformed into vectors so they can be stored in a vector database, to enable efficient semantic search. This process is called “embedding” and is achieved by applying an LLM-based embedding model. The embeddings are linked back to the source, enabling the creation of more accurate and meaningful responses.

4. Protecting the data

The RAG framework must employ role-based access controls to prevent users from gaining answers to questions beyond their assigned role. For example, a customer service rep serving a specific customer segment might be prevented from accessing data about customers not in their segment; similarly, a marketing analyst might not be given access to certain confidential financial data.

Further, any sensitive data retrieved by RAG, such as Personal Identifiable Information (PII), must never be accessible to unauthorized users – such as credit card information by salespeople, or Social Security Numbers by service agents. The RAG solution must employ dynamic data masking to protect the data in compliance with data privacy regulations.

5. Engineering the prompt from enterprise data

After enterprise application data is retrieved, the retrieval-augmented generation process generates an enriched prompt by building a “story” out of the retrieved 360-degree data. There needs to be an ongoing tuning process for prompt engineering, ideally aided by Machine Learning (ML) models, and leveraging advanced chain-of-thought prompting techniques.

For example, we can ask the LLM to reflect on the data it already has and let us know what additional data is required, or why the user terminated the conversation, etc.

6. Doc retrieval via semantic search

When RAG injects unstructured data – like articles, manuals, and other docs – into your LLM from your knowledge bases, it often uses semantic search. Semantic search is a search technique embedded in vector databases that attempts to comprehend the meaning of the words used in a search query and web content. In terms of RAG, this is relevant for docs only.

Traditional search is focused on keywords. For example, a basic query about alligator species native to Florida might scan the search database using the keywords “alligator” and “Florida” and locate data that contains both. But the system might not REALLY understand the meaning of “alligators native to Florida” and retrieve too much, too little, or even faulty information. That same search might also miss information by taking the keywords too literally: For example, alligators in the Everglades might be overlooked, even though they’re in Florida, because the keyword “Everglades” was missing.

Semantic search goes beyond keyword search by understanding the intent of questions and answering them accordingly. It’s fundamental to doc retrieval.

Today’s enterprises store vast amounts of information – like customer service guides, FAQs, HR documents, manuals, and research reports – across a wide variety of databases. However, keyword-based retrieval is challenging at scale, and may reduce the quality of the generated responses.

Traditional keyword search solutions in RAG produce limited results for knowledge-intensive tasks. Developers must also deal with word embedding, data subsetting, and other complexities as they prepare their data. In contrast, semantic search automates the process by generating semantically relevant passages, and information ordered by relevance, to maximize the quality of the RAG response.

04

Retrieval-augmented generation challenges

An organization usually stores its documentation of internal processes, procedures, and operations in offline and online docs – with its business data fragmented across dozens of enterprise systems, like billing, CRM, and ERP.

Active retrieval-augmented generation integrates fresh, trusted data retrieved from a company’s internal sources – docs stored in document databases and data stored in enterprise systems – directly into the generation process.

So, instead of relying solely on its public, static, and dated knowledge base, the enterprise LLM actively ingests relevant data from a company’s own sources to generate better-informed and relevant outputs.

The RAG model essentially grounds the LLM with an organization’s most current information and GenAI data, resulting in more accurate, reliable, and relevant responses.

However, RAG also has its challenges:

- Enterprise RAG relies on enterprise-wide data retrieval. Not only must you maintain up-to-date and accurate information and data, you must also have accurate metadata in place that describes your data for the RAG framework.

- The information and data stored in internal knowledge bases and enterprise systems must be of AI data quality: accessible, searchable, and of high quality. For example, if you have 100 million customer records – can you access and integrate all of David Smith’s details in less than a second?

- To generate smart contextual prompts, you’ll need sophisticated prompt engineering capabilities, including chain-of-thought prompting, to inject the relevant data into the RAG LLM in a way that generates the most accurate responses.

- To ensure your data remains private and secure, you must limit your LLM’s access to authorized data only – per the example above, that means only David Smith’s data and nobody else’s.

Besides these challenges, organizations have additional concerns, as revealed in our recent survey:

Top concerns in leveraging enterprise data for GenAI and RAG

Source: K2view Enterprise Data Readiness for GenAI in 2024 report

With the top concerns so evenly spread, it’s obvious that addressing only one issue, such as scalability and performance, without ensuring data quality and consistency, real-time data integration and access, data governance and compliance, or data security and privacy, is insufficient. A completely holistic approach is needed to effectively integrate enterprise data with GenAI projects.

According to the 2024 Gartner LLM report, Lessons from Generative AI Early Adopters, "Organizations continue to invest significantly in GenAI, but are challenged by obstacles related to technical implementation, costs, and talent."

Despite these challenges, RAG still represents a great leap forward in generative AI. Its ability to leverage up-to-date internal data addresses the limitations of traditional generative models by improving the user experience with more personalized and reliable exchanges of information.

RAG AI is already delivering value in several domains, including customer service, IT service management, sales and marketing, and legal and compliance. Still, depending on the use case, RAG vs fine-tuning vs prompt engineering is under constant evaluation.

06

Retrieval-augmented generation use cases

RAG AI use cases span multiple enterprise domains. Enterprises use RAG for:

|

Department |

Objective |

Types of RAG data |

|

Customer service |

Personalize the chatbot response to the customer’s precise needs, behaviors, status and preferences, to respond more effectively. |

|

|

Sales and marketing |

Engage with customers via a chatbot or a sales consultant to describe products and offer personalized recommendations. |

|

|

Compliance |

Respond to Data Subject Access Requests (DSARs) from customers. |

|

|

Risk |

Identify fraudulent customer activity. |

|

Benefits of retrieval-augmented generation

For generative AI, your data is your differentiator. Once you get started with RAG, you'll benefit from:

-

Quicker time to value, at lower cost

Training an LLM takes a long time and is very costly. By offering a more rapid and affordable way to introduce new data to the LLM, RAG makes generative AI accessible and reliable for customer-facing and back-office operations.

-

Personalization of user interactions

By integrating specific customer 360 data with the extensive general knowledge of the LLM, RAG personalizes user interactions via chatbots and customer service agents, with next-best-action and cross-sell and up-sell recommendations tailored for the customer in real time. Retrieval-augmented generation enables AI personalization at scale.

-

Improved user trust

RAG LLMs deliver reliable information through a combination of data accuracy, freshness, and relevance – personalized for a specific user. User trust protects and even elevates the reputation of your brand.

-

Elevate user experience and reduce customer care costs

Chatbots based on customer data elevate the user experience and reduce customer care costs, by increasing first contact resolution rates and decreasing the overall number of services calls.

Watch how AI-ready data powers GenAI apps: Discover how data products make GenAI apps faster, smarter, and more accurate - as explained by K2view’s CEO

07

Recommendations for implementing RAG

In its 12/2023 report, “Emerging Tech Impact Radar: Conversational Artificial Intelligence”, Gartner estimates that widespread enterprise adoption of RAG will take a few years because of the complexities involved in:

-

Applying generative AI (GenAI) to self-service customer support mechanisms, like chatbots

- Keeping sensitive data hidden from people who aren’t authorized to see it

- Combining insight engines with knowledge bases, to run the search retrieval function

-

Indexing, embedding, pre-processing, and/or graphing enterprise data and documents

-

Building and integrating retrieval pipelines into applications.

All of the above are challenging for enterprises due to skill set gaps, data sprawl, ownership issues, and technical limitations.

Also, as vendors start offering tools and workflows for data onboarding, knowledge base activation, and components for RAG application design (including conversational AI chatbots), enterprises will more actively be involved with grounding data for content consumption.

In the Gartner RAG report of 1/2024, “Quick Answer: How to Supplement Large Language Models with Internal Data”, Gartner generative AI analysts advise enterprises preparing for RAG to:

- Select a pilot use case, in which business value can be clearly measured.

- Classify your use case data, as structured, semi-structured, or unstructured, to decide on the best ways of handling the data and mitigating risk.

- Assemble all the metadata you can, because it provides the context for your RAG deployment and the basis for selecting your enabling technologies.

In its 1/2024 article, “Architects: Jump into Generative AI”, Forrester claims that for RAG-focused architectures, gates, pipelines, and service layers work best. So, if you’re thinking about implementing generative AI apps, make sure they:

- Feature intent and governance gates on both ends

- Flow through pipelines that can engineer and govern prompts

- Deal with grounding AI through RAG

RAG chatbot: A natural starting point

When customers need a quick answer to a question, a RAG chatbot can be extremely helpful. The problem is that most bots are trained on a limited number of intents (or question/answer combinations) and they lack context, in the sense that they give the same answer to different users. Therefore, their responses are often ineffective – making their usefulness questionable.

RAG tools can make conventional bots a lot smarter by empowering the LLM to provide answers to questions that aren’t on the intent list – and that are contextual to the user.

For example, an airline chatbot responding to a platinum frequent flyer asking about an upgrade on a particular flight based on accumulated miles won’t be very helpful if it winds up answering, “Please contact frequent flyer support." After all, the whole point of generative AI is to avoid a human in the loop.

But once RAG augments the airline’s LLM with that particular user’s dataset, a much more contextual response could be generated, such as, “Francis, you have 929,100 miles at your disposal. For Flight EG17 from New York to Madrid, departing at 7 pm on November 5, 2024, you could upgrade to business class for 30,000 miles, or to first class for 90,000 miles. How would you like to proceed?”

What a difference!

The Q&A nature of chat interactions make the RAG chatbot an ideal pilot use case because understanding the context of a question – and of the user – leads to a more accurate, relevant, and satisfying response.

In fact, chatbots that are used by service and sales agents are the natural entry point for RAG-based generative AI apps.

08

Enhancing RAG with Model Context Protocol (MCP)

To maximize the effectiveness of Retrieval-Augmented Generation within enterprise environments, it's crucial to provide LLMs with seamless access to relevant, real-time data. The Model Context Protocol (MCP) serves as an open standard that facilitates this integration by standardizing how applications supply context to LLMs.

Think of MCP as a universal connector—much like a USB-C port—that allows AI models to interface with various data sources and tools in a consistent manner.

By implementing MCP, enterprises can ensure that their RAG systems have structured, secure, and real-time access to the necessary data, enhancing the models' ability to generate accurate and contextually relevant responses.

K2view's platform supports MCP, enabling organizations to bridge the gap between their data ecosystems and AI applications efficiently.

09

Real-time application data retrieval

The need for RAG to access enterprise application data is especially critical in customer-facing use cases, where personalized, accurate responses are required in real time.

Sample use cases include a customer care agent answering a service call, or a conversational AI bot providing self-service technical support to authenticated users on a company portal. The data needed for these scenarios is typically found in enterprise systems, like CRM, billing, and ticketing systems.

A real-time 360° view of the relevant business entity is required, be they customers, employees, suppliers, products, loans, etc. To achieve this, you need to organize your enterprise data by whichever business entities are relevant for your use case.

But the process of locating, accessing, integrating and unifying enterprise data in real time – and then using it to ground your LLM – is extremely complex, because:

-

Fragmented enterprise data is spread across many different applications, with different databases, structures, and formats.

-

Real-time data retrieval for 1 entity (e.g., a single customer) typically requires high-complexity and high-cost data management.

-

High-scale and low-latency requirements add further complexity.

-

Clean, complete, and current data must be delivered every time.

-

Privacy and security concerns dictate that your RAG LLM must only be able to access the data the user is authorized to see.

Finally, to give your LLM tools visibility into all your cross-system data, an AI database schema generator should be part of any enterprise RAG solution.

10

Current state of retrieval-augmented generation

Many companies are piloting RAG chatbots on internal users like customer service agents because they’re hesitant to use them in production, primarily due to issues surrounding generative AI hallucinations, privacy, and security. As they become more reliable, this trend will change.

In fact, new RAG generative AI use cases are emerging all over the place, for example:

|

A stock investor wishing to see the commissions she was charged over the last quarter |

A hospital patient wanting to compare the drugs he received to the payments he made |

A telco subscriber choosing a new Internet-TV-phone plan since his is about to terminate |

A car owner requesting a 3-year insurance claim history to reduce her annual premium |

Today, RAG is mainly used to provide accurate, contextual, and timely answers to questions – via chatbots, email, texting, and other RAG conversational AI applications. In the future, RAG AI might be used to suggest appropriate actions to contextual information and user prompts.

For example, if today RAG GenAI is used to inform army veterans about reimbursement policies for higher education, in the future it might list nearby colleges, and even recommend programs based on the applicant’s previous experience and military training. It may even be able to generate the reimbursement request itself.

11

From GenAI-ready data to grounded GenAI apps

When all’s said and done, it’s difficult to deliver strategic value from generative AI today because your LLMs lack business context, cost too much to retrain, and hallucinate too frequently. What's needed is a strong dose of LLM grounding.

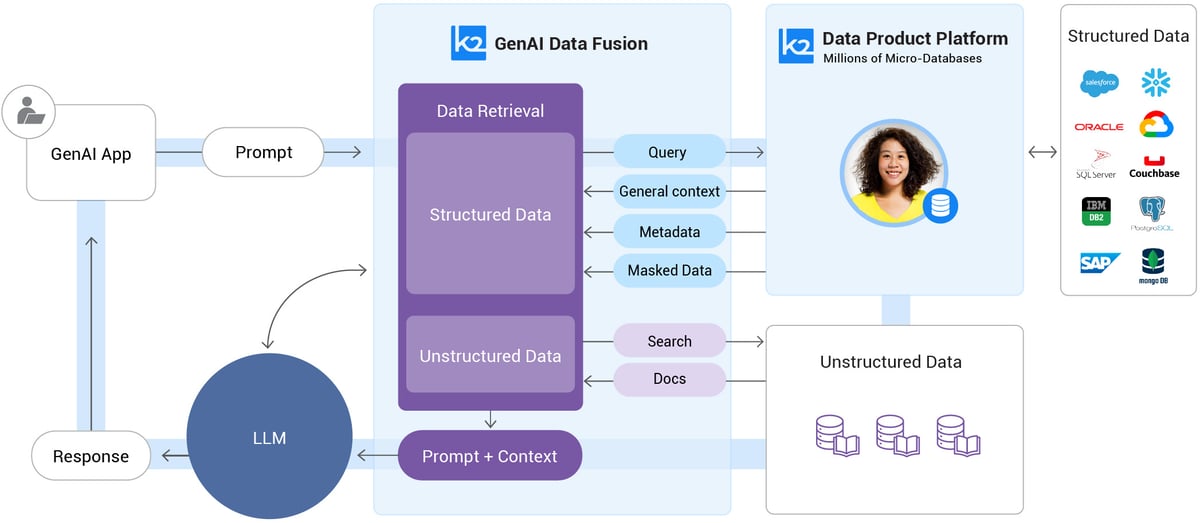

K2view GenAI Data Fusion is a patented solution that provides a complete retrieval-augmented generation solution, grounding your generative AI apps with real-time, multi-source AI-ready data.

With GenAI Data Fusion, your AI-ready data is::

- Ready for generative AI

- Deliverable in real-time and at massive scale

- Complete and up-to-date

- Governed, secured, and trusted

The diagram illustrates how K2view Data Product Platform and Micro-Database™ technology enable GenAI Data Fusion to access structured data:

-

The user enters a prompt, which triggers GenAI Data Fusion to access the company's internal data sources.

-

Micro-Database technology kicks in to perform queries on the relevant business entity only (e.g., a specific customer).

-

The original prompt is augmented with the new data, metadata, and context to create smart contextual prompts that are injected into the LLM.

-

The LLM uses the augmented prompt to generate a more accurate and relevant response, which is then provided to the user.

The Micro-Database: Fueling RAG AI with enterprise data

K2view GenAI Data Fusion is built on a Micro-Database foundation that organizes your fragmented, multi-source enterprise application data into 360° views of your business entities (customers, products, loans, work orders, etc.), making them accessible to your RAG AI apps in real time.

Each 360° view is stored in its own Micro-Database, secured by a unique encryption key, compressed by up to 90%, and optimized for low footprint and low-latency RAG access.

K2view manages the data for each business entity in its own, high-performance, always-fresh Micro-Database – ever-ready for real-time access by RAG workflows.

Designed to continually sync with underlying sources, the Micro-Database can be modeled to capture any business entity – and comes with built-in data access controls and dynamic data masking capabilities.

Micro-Database technology features:

-

Real-time data access, for any entity

-

360° views of any business entity

-

Fresh data all the time, in sync with underlying systems

-

Dynamic data masking, to protect sensitive AI data

-

Role-based access controls, to safeguard data privacy

-

Reduced TCO, via a small footprint and the use of commodity hardware

An entity’s data can be queried by, and injected into, the RAG LLM as a contextual prompt in milliseconds. Entity-based RAG essentially assures AI data readiness.

GenAI Data Fusion can:

-

Feed real-time data about a particular customer or any other business entity.

-

Dynamically mask PII (Personally Identifiable Information) or other sensitive data.

-

Be reused for handling data service access requests, or for suggesting cross-sell recommendations.

-

Access enterprise systems via API, CDC, messaging, streaming – in any combination – to unify data from multiple source systems.

It powers your RAG AI apps to respond with accurate recommendations, information, and content – and without RAG hallucination issues – for many use cases, such as:

|

Accelerating |

Creating |

Generating |

Detecting |

12

Conclusion: Empowering enterprise AI with real-time data

Retrieval-Augmented Generation (RAG) enhances large language models by integrating real-time, contextual data, ensuring AI outputs are accurate and enterprise-ready. K2view's GenAI Data Fusion platform exemplifies this by grounding AI applications with real-time enterprise data, delivering trustworthy and context-aware responses.

13

Retrieval-Augmented Generation FAQs

1. What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is a Generative AI (GenAI) framework that augments a Large Language Model (LLM) with fresh, trusted data retrieved from authoritative internal knowledge bases and enterprise systems. RAG generates more informed and reliable responses to LLM prompts, minimizing hallucinations and increasing user trust in GenAI apps.

2. What’s the relationship between Generative AI, LLMs, and RAG?

Generative AI is any type of artificial intelligence that creates new content. An excellent example of GenAI are LLMs, which can generate text, answer questions, translate languages, and even write creative content. RAG is a technique that enhances LLM capabilities by augmenting its external, publicly-available knowledge with fresh, trusted internal data and information.

3. What types of information and data does RAG make use of?

- Unstructured data found in knowledge bases such as articles, books, conversations, documents, and web pages

- Structured data found in databases, knowledge graphs, and ontologies

- Semi-structured data, stored in spreadsheets, XML and JSON files

4. Can RAG provide sources for the information it retrieves?

Yes. If the knowledge bases accessed by RAG have references to the retrieved information (in the form of metadata), sources can be cited. Citing sources allows errors to be identified and easily corrected, so that future questions won’t be answered with incorrect information.

5. How does RAG differ from traditional generative models?

Compared to traditional generative models that base their responses on input context only, retrieval-augmented generation retrieves relevant information from private company sources before generating an output. This process leads to more accurate and contextually rich responses, and, therefore, more positive and satisfying user experiences.

6. What is RAG's main component?

As its name suggests, RAG inserts a data retrieval component into the generation process, aimed at enhancing the relevance and reliability of the generated responses.

7. How does the retrieval model work?

The retrieval model collects and prioritizes the most relevant information and data from underlying enterprise systems and knowledge bases, based on the user query, transforms it into an enriched, contextual prompt, and invokes the LLM via its API. The LLM responds with an accurate and coherent response to the user.

8. What are the advantages of using RAG?

-

Quicker time to value at lower cost, versus LLM retraining or fine-tuning

-

Personalization of user interactions

-

Improved user trust because of reduced hallucinations

9. What challenges are associated with RAG?

-

Accessing all the information and data stored in internal knowledge bases and enterprise systems in real time

-

Generating the most effective and accurate prompts for the RAG framework

-

Keeping sensitive data hidden from people who aren’t authorized to see it

-

Building and integrating retrieval pipelines into applications

10. When is RAG most helpful?

Retrieval-augmented generation has various applications such as conversational agents, customer support, content creation, and question answering systems. It proves particularly useful in scenarios where access to internal information and data enhances the accuracy and relevance of the generated responses.

-1.png?width=501&height=273&name=GenAI%20survey%20news%20thumbnail%20(1)-1.png)