K2view Data Tokenization

The business entity approach:

protecting data in every industry

Financial

services providers

Reduce PCI DSS scope and achieve compliance with all relevant privacy requirements including Nacha and SOX.

Healthcare

insurance and pharma

Safeguard patient data by replacing PHI and identifiers with tokens, in compliance with HIPAA and similar regulations.

Retail

and e-commerce

Protect customer privacy, cooperatively share data,

and comply with GDPR, CPRA, PCI DSS, and other laws.

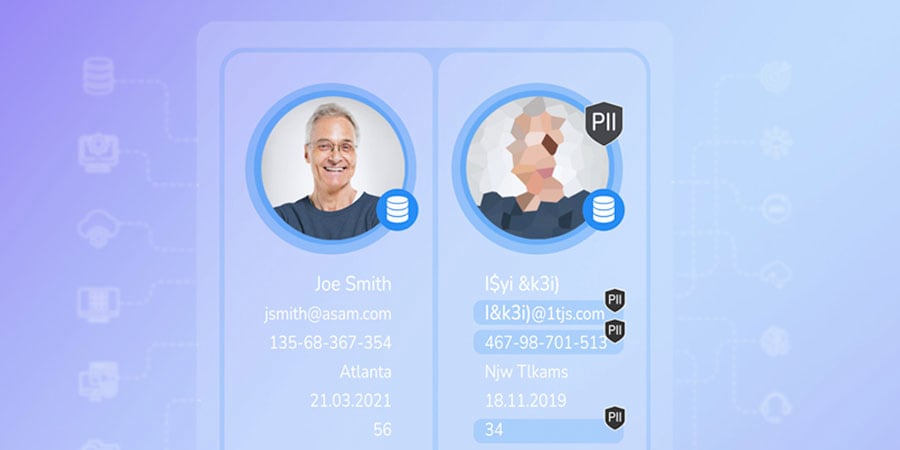

K2view brings a business entity approach to data tokenization, in which sensitive data and corresponding tokens are securely stored and managed as separate entities (e.g., customers, investors, or patients) in a patented Micro-Database™. Each Micro-Database serves as an individually encrypted and compressed "micro" token vault.

For example, instead of an enterprise maintaining 1 centralized "mega" token vault for its 10 million customers – with the ever-present potential for a mass data breach – entity-based data tokenization eliminates that risk by creating 1 micro-vault for each customer (in this case, 10 million micro-vaults).

Enterprise performance

Protect megadata

in microseconds

- Scalability to handle a high volume of tokenization requests while maintaining low latency.

- Business entity approach ensures referential integrity is maintained across all systems.

- K2view passes the ACID (Atomicity, Consistency, Isolation, Durability) test for data and token management.

Ingestion from any source

Tokenize across systems

- The solution continuously ingests fresh data from any number and/or type of source systems.

- High-performance token servers can rapidly generate new tokens and re-identify original data when needed.

- PII discovery, identification, unification, transformation, and storage all occur with zero impact on underlying systems.

- Support for "Bring Your Own Key (BYOK)" with third-party services.

Maximum security

Experience unrivaled data security without compromise

- All data is encrypted or hashed, at rest or in flight, via the AES-256 algorithm or SHA-512 hash function.

- RBAC controls access to decryption and de-tokenization, ensuring only authorized users can access sensitive data at the most granular level.

- Seamlessly incorporates LDAP for enhanced user management, authentication, and access control.

- Built-in dynamic data masking provides an added layer of protection for data that doesn't require restoration to its original value.

Token lifecycle management

Ensure the accuracy, security, and reliability of your tokens

- Centrally manage token generation, assignment, mapping, encryption, storage, retrieval, expiration, and revocation.

- Generate format-preserving tokens to accommodate various data types

- Seamlessly rotate existing tokens with new ones, via a schedule or triggered event.

- Increase usability by assigning a specific Time-To-Live (TTL) expiration date, after which, the token can revert to clear text.

- Restore tokenized data in case of accidental data loss or corruption.

K2view Data Tokenization Benefits

Better data protection

due to multiple levels of security and encryption.

DevOps-ready solution

where APIs integrate with CI/CD pipelines.

Quick and easy rollout

with software-only implementation in weeks.

Flexible deployment

in on-prem, cloud, or hybrid environments.

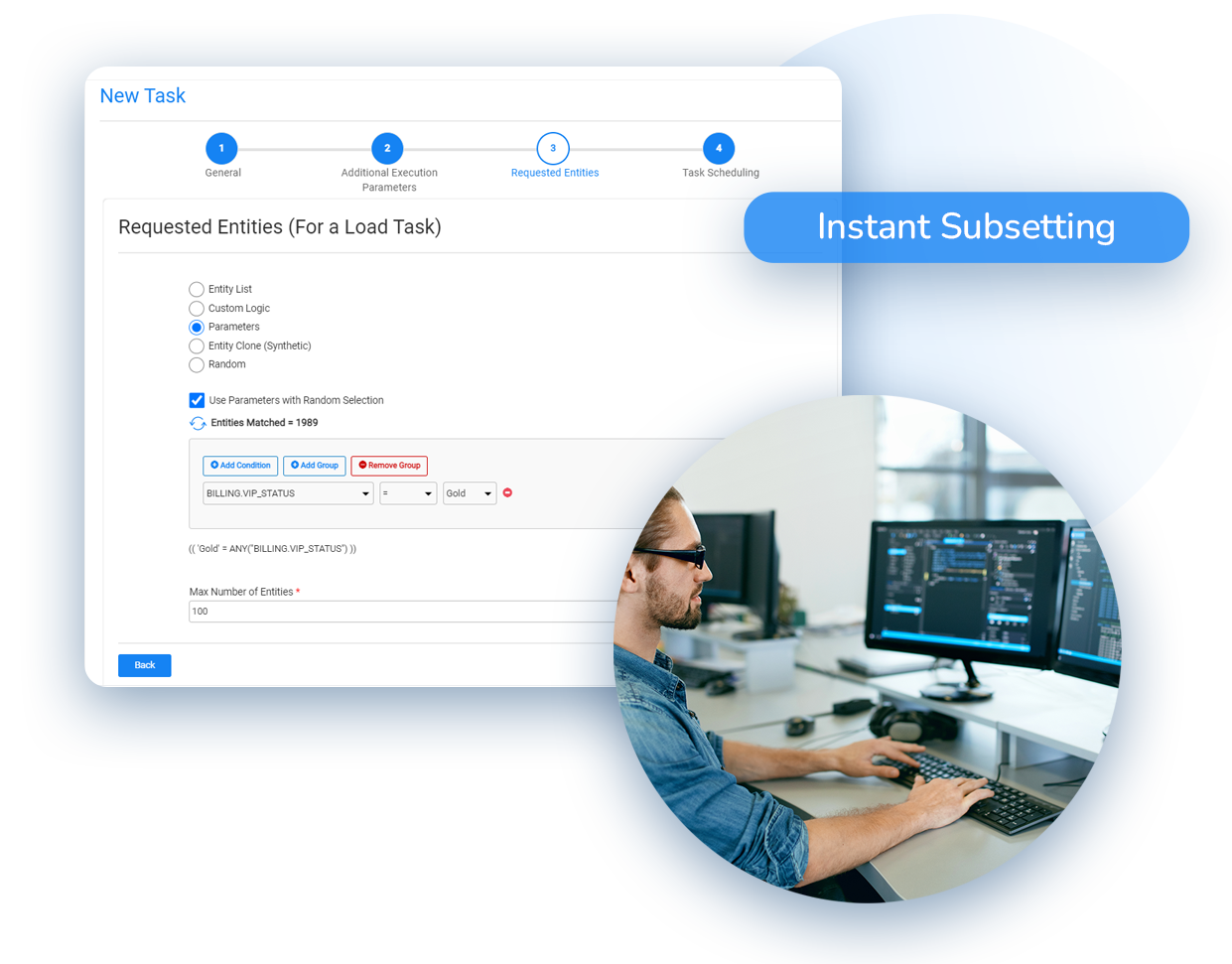

No-code intuitive GUI

for more productivity and less burden on IT.

Future-proof solution

designed to integrate with any and all data sources.

Scalable architecture

supporting an unlimited number of data sources.

Support for any data

in any format, language, or technology.

.jpg?width=1002&height=498&name=Gartner%20MQ%202024%20(1).jpg)