In the Gartner Magic Quadrant for Data Integration Tools for 2022, the following 10 trends and drivers are listed:

1. Year over year growth

The data integration market grew from 6.8% in 2020 to 11.8% in 2021, with a 5-year Compound Annual Growth Rate (CAGR) increasing from 7.0% to 8.5%. Cloud adoption is expanding, with the iPaaS market growing by 40.2% in 2021.

2. Market disruption by small vendors

The top 5 vendors in the data integration market had a combined market share of 71% in 2017. By 2021, their collective market share dropped to 52%. One explanation for this phenomenon is that smaller vendors are disrupting the market with more focused, flexible offerings.

3. Demand for modern data delivery styles and cloud data integration

Vendors gaining market share are focusing on data virtualization, data replication, or cloud data integration. The 5 top vendors in this group collectively grew their revenue by 32% in 2021. In order for the larger vendors to combat their drop in market share, they must find the right tradeoff between all-encompassing platforms, and easily accessible point solutions.

4. Augmented data integration with data fabric

Data fabric answers the immediate need for augmented data integration. By taking a data-as-a-product approach, data fabric architecture automates the design, integration and deployment of data products, resulting in faster, more automated data access and sharing.

5. Decentralization with data mesh

Enterprises exploring data mesh need decentralized, domain-oriented delivery of data product datasets. Business teams are looking to data mesh architecture to:

– Access the data they need on their own, to meet their SLAs more effectively

– Design data products based on their own, unique subject matter expertise

– Benefit from semantic modeling, ontology creation, and knowledge graph support

6. Cost optimization through financial governance

Data integration tools need to be able to track and forecast all the costs associated with cloud integration workloads. Data teams should be able to connect the cost of running data integration workloads to business value, and allocate processing capacity accordingly. Data integration tools should therefore support financial governance, including price-performance calculations.

7. Data engineering support

Data engineering translates data into usable forms by building and operationalizing a data pipeline across data and analytics platforms, making use of software engineering and infrastructure operations best practices. With more data infrastructure operating on the cloud, platform operations are becoming a core responsibility.

8. DataOps support

Data integration tools should support DataOps enablement. DataOps is focused on building collaborative workflows to make data delivery more predictable. Key features include the ability to:

– Deliver data pipelines through CI/CD

– Automate testing and validate code

– Integrate data integration tools with project management and version-control tooling

– Balance low-code capabilities

– Manage non-production environments in an agile way

9. Hybrid and inter-cloud data management support

Cloud architectures for data management (iPaaS), span hybrid, multi-cloud, and inter-cloud deployments. There are advantages and disadvantages in managing data across diverse and distributed environments. Data location affects:

– Application latency SLAs

– Data sovereignty

– Financial models

– High availability and disaster recovery strategies

– Performance

According to Gartner, almost half of all data management implementations use both on-prem and cloud environments. Support for hybrid data management and integration has therefore become critical – and today’s data integration tools are expected to dynamically construct, or reconstruct, integration infrastructure across a hybrid data management environment.

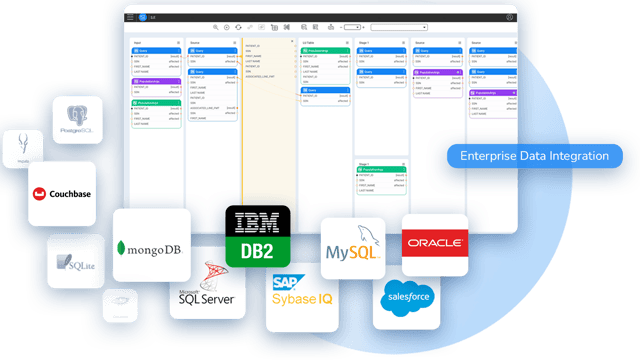

10. Prevention of application/CSP/database lock-in

There’s a definite need for independent data integration tools that do not rely on the storage of data in a vendor repository or cloud ecosystem. Embedded data integration capabilities, delivered by vendors, could lead to potential lock-in issues, costs for leaving the service, and data silos. Although some native CSP data integration tools allow for two-way integration (to and from their own cloud data stores), any organization using more than one cloud service provider should acquire independent data integration tools.

The 5 most common challenges data teams face implementing data integration include:

The 5 most common challenges data teams face implementing data integration include: A data product approach to data integration processes and delivers data by business entities. When data integration tools are based on data products, they allow data engineers to build and manage reusable data pipelines, for both operational and analytical workloads.

A data product approach to data integration processes and delivers data by business entities. When data integration tools are based on data products, they allow data engineers to build and manage reusable data pipelines, for both operational and analytical workloads.