K2VIEW EBOOK

What is test data?

The definitive guide

Test data is a compliant dataset used by development and QA teams to ensure that

software applications perform as expected while maximizing test coverage.

.jpg?width=1000&height=548&name=shutterstock_2238037475%20(1).jpg)

01

Why test data matters

Although enterprises have spent decades advancing and refining software development methodologies and practices, many data engineering teams still find it frustrating, tedious, and time-consuming to prepare test data – extracting the data from multiple sources, and then formatting, scrubbing, masking, and provisioning it to lower environments.

What’s needed is a test data management tool that automates test data preparation and provisioning, to improve test data quality, ensure compliance with data privacy laws, accelerate time to market, and validate functionality per test case or scenario. The main objective is to ensure that development and QA teams have access to the test data they need, whenever they need it.

02

What is test data?

Test data is data that’s used to test software applications – specifically, to locate defects, and generally, to make sure that the app is working as expected. Created manually or with a tool, test data can be used to test software functionality, performance, and security.

Positive test data is used to test software under normal operating conditions, while negative test data is used to test software under extreme conditions, such as when invalid inputs are entered, or when the system is overloaded.

Well-designed test data is representative of real data and compliant with privacy laws. On the flip side, poorly designed test data leads to false positives and false negatives, which waste time and effort and negatively impact the employee experience.

To create test data efficiently, testing teams need to:

-

Understand the software requirements fully.

-

Define the data subset criteria, as well as the source and target environments.

-

Generate test data, if any data is missing from the higher environment.

-

Trust that the test data is compliant and complete (based on the subset criteria).

-

Automate the entire process.

03

How quality test data drives agile development

One of the top test data management trends is shift-left testing, a quality engineering approach that prioritizes testing early in the development lifecycle – so that testing doesn’t become a bottleneck in agile software development. The idea behind shift-left testing is to identify and fix defects as early as possible in the development process, reducing the time and cost associated with testing and debugging later on in the development cycle.

Traditionally, testing is performed at the end of the development cycle once the software development has been completed. A shift-left testing approach starts testing at the beginning of the lifecycle, with tests run in parallel with software development. Early testing reduces the risk of expensive rework and delays later in the development cycle by identifying defects and fixing them earlier.

Shift-left testing also emphasizes collaboration between developers and testers, with the goal of identifying defects and issues as early as possible. Collaboration can reduce the risk of expensive rework and delays by ensuring the software being developed meets the requirements and specifications of the product team.

Shifting testing to the left requires the preparation of compliant test data subsets to enable development and QA teams to quickly test specific scenarios and/or functionality with maximum test coverage. This is achieved by automating and streamlining various processes currently done manually.

By using appropriate test data preparation tools, some organizations have reported as much as a 90-95% reduction in the time taken to provision high-quality test data.

04

How to create test data

Test data can be created manually or automatically using test data management tools (which provision test data from production environments, or generate synthetic test data).

Manually

Manmade test data relies on ETL tools and complex scripting. While adequate for smaller datasets, this approach is not an option for larger ones. Additionally, to create data manually, testers must have a deep understanding of the application, including its database design, and all the business rules related to it.

Automatically

Test data management tools, whose global market is expected to grow from 1 billion in 2022 to 2.5 billion in 2030 with a CAGR of 11% according to verified market research, provision test data that simulates real-life scenarios. Much of the functionality of these solutions (e.g., data masking, data tokenization, and synthetic data generation) can be automated, allowing users to generate realistic datasets of various sizes and distributions quickly and easily.

-

Using production data ensures that the resultant test data is fresh and accurate, because it’s already been validated against the original database schema. The main problem with this method is data privacy and security. Any sensitive data must be identified and anonymized before being used in test environments – due to legal and compliance issues, depending on the jurisdiction. Production data may also change over time, so it's crucial to consider data versioning to align the test data with the current state of the application.

-

Generating synthetic data results in test data that mimics the features, structures, and statistical attributes of production data, while maintaining compliance with data privacy regulations. Synthetic test data is commonly used to test applications in a closed test environment before deployment to production. It can be more efficient to use than test data sourced from production because it allows testers to generate large amounts of test data quickly and easily.

05

What are the challenges facing test data?

QA teams spend almost a third of their testing time dealing with faulty test data. In other words, at least a day a week is wasted on test data provisioning. That’s because the test data must be:

-

Sourced

Enterprise data is often dispersed and siloed across many different data sources, including legacy mainframes, SAP, relational databases, NoSQL and cloud environments. For companies relying on SAP, effective SAP test data management is essential to streamline provisioning and ensure compliance. Yet, because data is stored in many different formats, it remains difficult for software teams to quickly access the data they need.

-

Subset

The ability to subset large, diverse test datasets into small, focused ones, helps QA teams focus on specific test scenarios without the need to process or analyze large volumes of data. Not only is test data subsetting crucial for recreating and correcting problems, it also minimizes the amount of test data and related hardware and software costs.

-

Protected

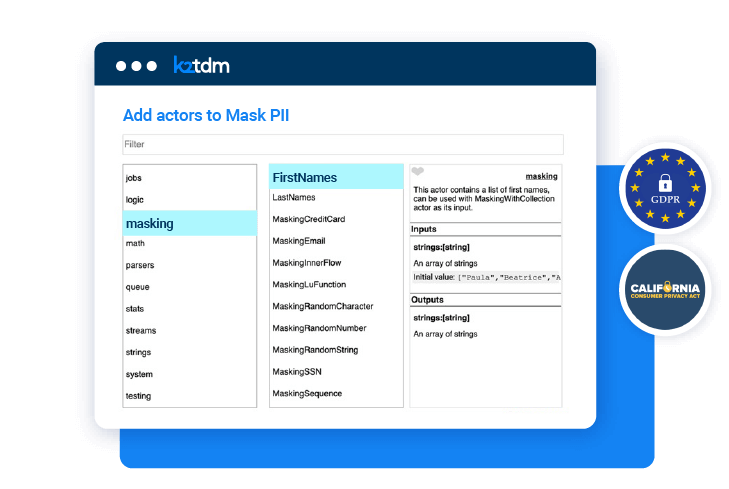

Data privacy laws, including CPRA and GDPR, and, mandate that Personally Identifiable Information (PII) – like names, addresses, telephone numbers, and Social Security Numbers – be protected in the test environment. Anonymizing sensitive data can often be complicated and time-consuming for data teams.

-

Consistent

Referential integrity is the consistency of data and schema across databases and tables. Ensuring the referential integrity of masked test data is critical to its validity.

-

Extensive

Test coverage refers to the extent an application's code has been tested. It measures the percentage of code, requirements, or functionalities that have been exercised by a set of tests. Defining the required test cases is the first step, but ensuring sufficient test data to fully challenge the test cases is equally important. Less test coverage translates into more defect density.

-

Lifelike

Test data must be as representative of the original data as possible. Badly designed test data often results in false positive errors, leading to wasted time and effort on non-existent problems. Insufficient test data leads to false negatives, which can impact the quality and reliability of the software.

-

Reusable

Reusing test data is a critical capability when verifying software fixes. Versioning test datasets for reuse lets testers perform regression testing (re-testing to ensure that the errors found in previous tests have all been resolved).

-

Reservable

Testers often override each other's test data inadvertently, leading to corrupted test data. The ability to reserve specific test datasets for specific testers prevents the need to re-provision the test data and re-run the tests.

06

What are the benefits of high-quality, on-demand test data?

Enterprises that effectively address their test data challenges benefit from improved:

-

Response times

Provisioning the right data, at the right time, to development and testing teams, improves agility and speeds up time to market for new software builds.

-

Software quality

High-quality test data, coupled with a superior management tool, optimizes the 4 DevOps research and assessment metrics – deployment frequency, lead time for changes, time to restore service, and change failure rate.

-

Cost efficiencies

Test data best practices cut costs by speeding up test data provisioning, balancing resources better, enabling test data versioning, expanding test coverage, preventing test data duplication, providing self-service capabilities, and reducing infrastructure costs.

-

Compliance

Using data masking tools and synthetic data generation tools on test data enables companies to comply with data protection regulations (like CPRA, GDPR, and HIPAA), ensures that only authorized personnel have access to real data, and minimizes the impact of a data breach by making any exposed data meaningless to hackers.

-

Employee experience

Copying production databases into staging environments, manually scrubbing, masking, and formatting data is a long, tedious, repetitive process for data engineers. Waiting for the data, using the wrong datasets, dealing with problems related to the test data (e.g., reporting false positives, lacking sufficient test coverage, overriding each other’s test data, etc.) are issues that plague DevOps and QA teams. The right test data management solution improves job satisfaction for data engineers, as well as DevOps and QA teams.

Using data masking tools and synthetic data generation tools on test data enables companies to comply with data protection regulations (like CPRA, GDPR, and HIPAA)

07

How a business entity approach improves test data

A unique test data management approach ingests and organizes data via business entities (customers, employees, devices, orders, etc.) into a test data store, compressing and masking the data, while maintaining referential integrity. It enables testers to provision compliant test data subsets to their target environments and easily move them from one test environment to another, and from one sprint to the next.

It covers every phase of the test data management lifecycle:

-

Defining and sourcing

Relevant test data is identified and extracted using a simple, customizable GUI capable of accessing hundreds of relational database technologies, NoSQL sources, legacy mainframes, flat files, and more.

-

Syncing and refreshing

Sync strategies and refresh rates for the test data are applied to each business entity, allowing for full control over the test data.

-

Segmenting and subsetting

With the ability to rapidly segment and subset test data, engineering teams speed up software delivery by eliminating long response times, expanding test coverage, and reducing test failures.

-

Masking and encrypting

With dynamic data masking, the most complex rules can be implemented, simply and consistently across all data. And each business entity is encrypted with a different key for additional protection.

-

Generating synthetic data

When a business entity schema is defined, it also defines a pathway to synthetic data creation.

-

Provisioning and moving the data

Test data management is based on its ability to move data from many sources to many target systems. An entity-based solution executes in-memory, in a distributed environment, so provisioning test data is fast and efficient.

Test Data FAQs

What are examples of test data?

Examples of test data commonly include:

- Valid data, that meets all system requirements and specifications

- Invalid data, that doesn’t meet system requirements or specifications

- Boundary data, that’s on the edge of acceptable boundaries or limits of the system

- Empty data, that’s blank (containing no values)

- Error-prone data, intentionally designed to trigger system errors or exceptions

- Real-world data, that simulates lifelike conditions and scenarios

What are the different types of test data?

Test data can be classified into several different types, such as:

- Positive test data, that’s expected to produce the desired outputs to validate system performance

- Negative test data, that’s used to deliberately introduce defects or issues into the system to produceunexpected or invalid outputs

- Random test data, that’s generated without any specific pattern or requirement

- Equivalence class test data, that falls in the same category (“equivalence class”), and is used to represent the behavior of that class

- Boundary test data, that’s used to test the boundaries of input ranges to verify system behavior at these critical junctions

- Stress test data, that’s used to rate system performance under extreme conditions

- Regression test data, that’s used to see if any changes to the system have introduced new defects or issues

How do you validate test data?

You can validate your test data in 6 steps:

- Verification, which ensures that the test datasets are accurate, relevant, and valid to the specified testing objectives

- Preparation, which organizes the test data in a manageable structure

- Execution, which provisions test data to the system, based on pre-defined test cases or scenarios

- Observation, which monitors and records system behavior and outputs, as well as any issues that may have come up during the testing process

- Analysis, which reviews the test results to see if the system has passed or failed

- Iteration, which modifies the test data or test cases, as required

What are the basic testing methods?

The testing methods most commonly employed in software testing include:

- Unit testing, which tests separate units of code to ensure they operate correctly in isolation

- Integration testing, which tests the interfaces and interactions between different components to ensure they work together as planned

- System testing, which tests the entire system to make sure it meets all requirements

- Regression testing, which repeats previous tests to check if recent changes haven’t caused new defects

- Performance testing, which monitors system performance under all kinds of conditions to measure its overall stability, scalability, and responsiveness

- Acceptance testing, which tests system compliance with expectations and requirements

- Security testing, which determines system vulnerability against potential breaches or attacks

- Usability testing, which rates how easily users can interact with the system

- Exploratory testing, where testers QA the system to reveal any issues that may not have been covered by pre-defined test cases

Why is test data important in QA?

Test data plays a critical role in Quality Assurance (QA) in that it helps:

- Detect faults, allowing them to be fixed before the application is released

- Check functionality, to verify that the system operates according to specification

- Ensure stability (with diverse, representative datasets), to measure performance under a wide range of conditions

- Validate behavior, under normal and extreme conditions, assuring correct functionality

- Reduce risk, of breach, failure, or malfunction, as the system is being deployed

- Build confidence, in the functionality, performance and reliability of the system, prior to release

What is the role of test data?

The purpose of test data is to evaluate system behavior, functionality, and performance. It’s commonly used to:

- Validate whether the system meets its specifications and produces its expected outputs

- Identify issues in the system by triggering unexpected behaviors or errors

- Ensure that different combinations, paths, and scenarios of inputs are tested, to maximize test coverage

- Assess performance by simulating different loads, real-life usage patterns, or stress levels

- Mitigate risks by uncovering potential failures, vulnerabilities, or weaknesses

- Inform stakeholders about the readiness and quality of the software to be released