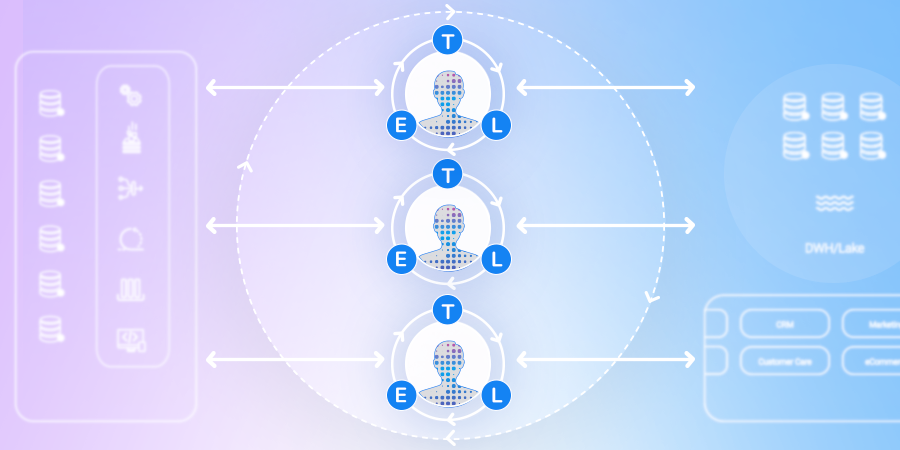

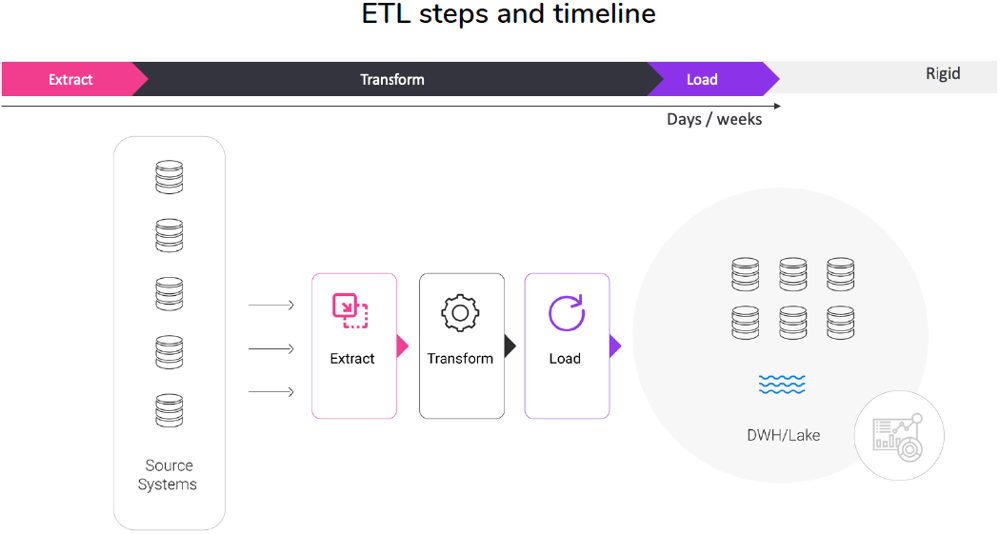

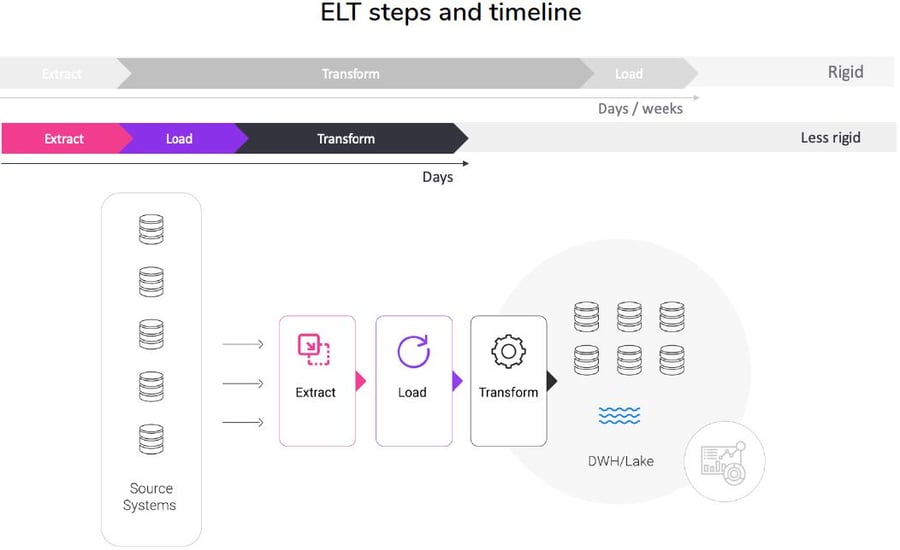

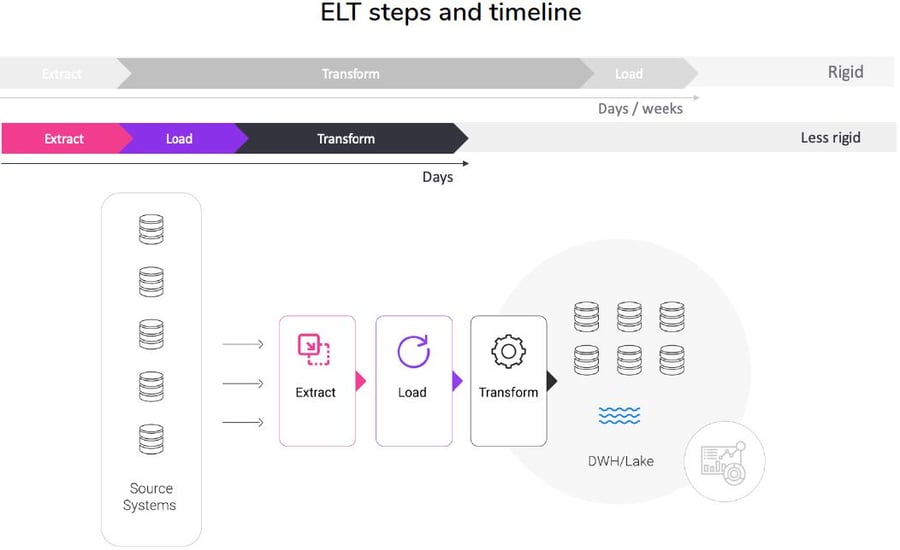

ELT stands for Extract-Load-Transform. Unlike traditional ETL, ELT extracts and loads the data into the target first, where it runs transformations, often using proprietary scripting. The target is most commonly a data lake, or big data store, such as Teradata, Spark, or Hadoop.

ELT workflow

| Extract |

Load |

Transform |

| Raw data is read and collected from various sources, including message queues, databases, flat files, spreadsheets, data streams, and event streams. |

The extracted data is loaded into a data store, whether it is a data lake or warehouse, or non-relational database. |

Data transformations are performed in the data lake or warehouse, primarily using scripts. |

Pros

ELT has several advantages over ETL, such as:

- Fast extraction and loading: Data is delivered into the target system immediately, with very little processing in-flight.

- Lower upfront development costs: ELT tools are good at moving source data into target systems with minimal user intervention, since user-defined transformations are not required.

- Low maintenance: ELT was designed for use in the cloud, so things like schema changes can be fully automated.

- Greater flexibility: Data analysts no longer have to determine what insights and data types they need in advance, but can perform transformations on the data as needed in the data warehouse or lake.

- Greater trust: All the data, in its raw format, is available for exploration and analysis. No data is lost, or mis-transformed along the way.

While the ability to transform data in the data store answers ELT’s volume and scale limitations, it does not address the issue of data transformation, which can be very costly and time-consuming. Data scientists, who are high-value company resources, need to match, clean, and transform the data – accounting for 40% of their time – before even getting into any analytics.

Cons

ELT is not without its challenges:

- Costly and time consuming: Data scientists need to match, clean, and transform the data before applying analytics.

- Compliance risks: ELT tools don’t have built-in support for data anonymization (while ensuring referential integrity) and data governance, thereby introducing data privacy risks.

- Data migration costs and risks: The movement of massive amounts of data, from on-premise to cloud environments, consumes high network bandwidth.

- Big store requirement: ELT tools require a modern data staging technology, such as a data lake, where the data is loaded. Data teams then transform the data into a data warehouse where it can be sliced and diced for reporting and analysis.

- Limited connectivity: ELT tools lacks connectors to legacy and on-premise systems, although this is becoming less of an issue as ELT products mature, and legacy systems are retired.