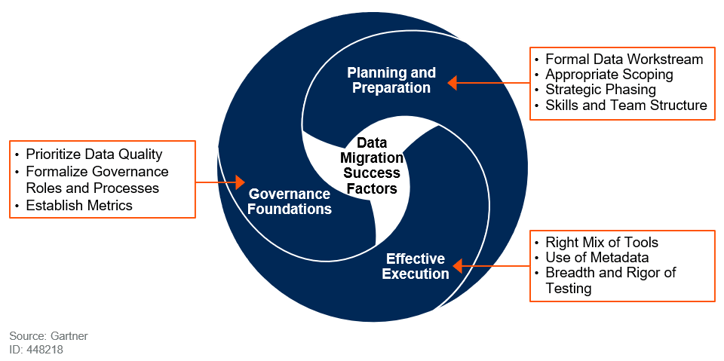

There are 3 strategies to Data Migration, as detailed below.

Big bang data migration

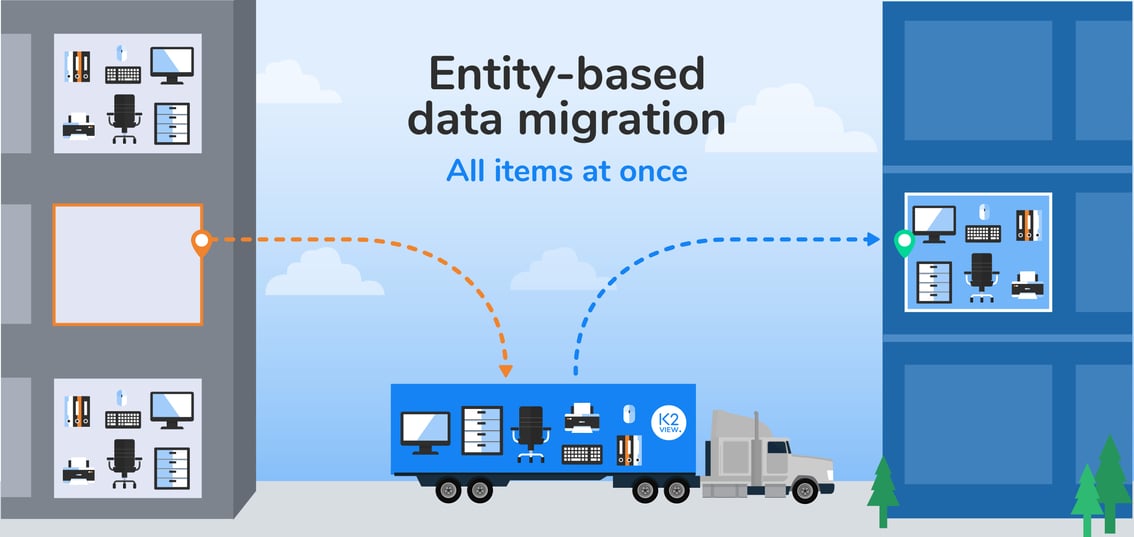

Using a big bang strategy, enterprises transfer all their data, all at once, in a just few days (like a long weekend). During the migration, all systems are down and all apps are inaccessible to users. This is because the data is in motion, and being transformed to meet the specifications of the new infrastructure. The migration is commonly scheduled for legal holidays, when customers aren’t likely to be using the application.

Theoretically, a big bang allows an enterprise to complete its data migration over the shortest period of time, and avoid working across old and new systems at the same. It’s touted as being more cost-effective, simpler, and quicker – “bang”, and we’re done.

The disadvantages of this approach include the high risk of a failure that might abort the migration process, definitive downtime (that may have to be extended due to unforeseen circumstances), and the risk of affecting the business (e.g., customer loyalty when the apps can’t be reached).

A big bang strategy is better suited to small companies, and small amounts of data. It’s not recommended for business-critical enterprise applications that must always be accessible.

Phased data migration

A phased strategy, in contrast, migrates the data in predefined stages. For example, by customer segments: VIP customers, followed by residential customers, followed by enterprise customers...or by geography.

During implementation, the old and new systems operate in parallel, until the migration is complete. This may take several months. Operational processes must cope with data residing in 2 systems, and have the ability to refer to the right system at the right time This reduces the risk of downtime, or operational interruptions.

This approach is much less likely to experience any unexpected failures, and is associated with zero downtime.

The disadvantages of this approach are that it is more expensive, time-consuming, and complex due to the need to have two systems running at once.

Phased migrations are better suited to enterprises that have zero tolerance for downtime, and have enough technical expertise to address the challenges that may arise. Examples of key industries required to provide 24/7 service include finance, retail, healthcare, and telecom.

On-demand data migration

An on-demand strategy migrates data, as the name suggests, on demand. This approach is used to move small amounts of data from one point to another, when needed.

The disadvantage of this approach is in ensuring the data integrity of a “micro” data migration. On-demand data migrations are typically implemented in conjunction with phased data migrations.