Tokenization replaces sensitive data with unique identification symbols that retain the basic information about the data without compromising its security..

Table of Contents

Why tokenize data

What is tokenization

Tokenization use cases

Encryption vs tokenization

Evaluating tokenization solutions

Data tokenization via business entity

Why tokenize data

If you work with data, you’ve probably heard a lot about data tokenization, in addition to data masking, data anonymization, and synthetic data generation.

For one thing, it’s the go-to data protection method for financial services and healthcare institutions, which face hefty requirements for securing Personally Identifiable Information (PII).

However, as enterprises, across all sectors, look for ways to fortify their cybersecurity posture and improve compliance, data tokenization tools are becoming more common.

This is because data tokenization provides a scalable, standardized, and safe way for organizations to deal with sensitive data, while reducing their audit scope. It enables a more secure and cost-effective approach to protecting data than many other methods, and enables operational and analytical workloads, without interruptions or interference.

As enterprises generate more and more data, and as requirements for protecting consumer privacy and sensitive data get tougher, tokenization software is fast becoming a staple in an enterprise's data security toolkit.

In this article, we’ll take a closer look at tokenization and cover key features to consider before implementing data tokenization solutions.

What is tokenization?

Like data masking, tokenization is a method of data anonymization – obscuring the meaning of the sensitive data, to make it usable in accordance with compliance standards, and keep it secure in the event of a data breach. Unlike data masking tools, it irreversibly replaces sensitive data with a non-sensitive substitute, called a token, which has no exploitable value. (Data tokenization vs masking is an entire subject unto itself, covered separately.)

The tokenization of data replaces sensitive data in databases, data repositories, and internal systems with non-sensitive data elements, such as a randomized string of data, which don’t have any exploitable meaning. The original sensitive data is often stored in a centralized token vault outside of the organization’s IT environment.

Although tokens themselves don’t have any value, they retain certain attributes of the original data, such as the format or length, to ensure that the business applications and analytical workloads, that depend on the data, continue to operate uninterruptedly.

Common examples of data that gets tokenized are Social Security Numbers, bank account numbers, and credit card numbers.

Tokenization use cases

Here’s a brief description of 6 key tokenization use cases.

-

Reduce compliance scope

Data tokenization software allows you to reduce the scope of data subject to compliance requirements since tokens replace data irreversibly. For example, replacing Primary Account Number (PAN) data that is stored within organizations’ IT environments with tokenized data leaves a smaller data footprint, simplifying PCI DSS compliance. -

Manage access to data

Tokenization helps you fortify access controls to sensitive data by preventing those without appropriate privileges, from performing de-tokenization. For example, when sensitive data is stored in a central repository, such as a data lake or data warehouse, tokenization helps ensure that only authorized data consumers can detokenize sensitive data. -

Fortify supply chain security

Many organizations today work with third-party software, vendors, and service providers that need access to sensitive data. Tokenization helps organizations minimize the risk of a data breach stemming from external providers by keeping sensitive data out of those environments. -

Simplify compliance of data warehouses and lakes

Centralized data repositories like data lakes and data warehouses store data from multiple sources in both structured and unstructured formats. This makes the act of demonstrating data protection controls more difficult from a compliance standpoint. When ingesting sensitive data into the repository is necessary, tokenization allows you to keep original PII out of data lakes and warehouses, which, in turn, reduces compliance implications. -

Allow sensitive data to be used for business analytics

Business intelligence and other kinds of analytical workloads are integral to every business unit, and there is often a need to perform analytics on sensitive data. By tokenizing this data, you can protect sensitive data while allowing other applications and processes to run analytics. -

Improve overall security posture

Finally, tokenization supports an overall stronger cybersecurity posture by protecting sensitive data from malicious attackers, as well as accidental insider attacks, which accounted for 60% of all data breaches in 2020. By replacing PII with randomized, non-exploitable data elements, you can maintain its full business utility while actually minimizing risk.

Encryption vs tokenization

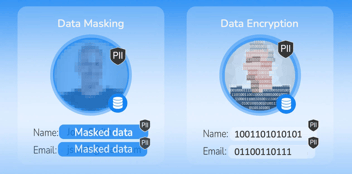

Encryption is a method commonly used for masking data. It involves transforming sensitive data into a non-readable form called ciphertext using an algorithm. The algorithm, as well as an encryption key, are required to decrypt the information and retrieve the original data.

Malicious actors can use a variety of techniques to acquire the key, such as social engineering or brute computing force. As a result, the level of protection during the encryption process relies on the complexity of the algorithm used to encrypt it. However, all encrypted data can ultimately be broken.

Another pitfall of encryption is that cipher-text rarely retains the same format as the original data, which could limit organizations’ ability to perform analytics on it, or require analytics apps to adapt to the new format.

In the tokenization vs encryption comparison, it is tokenization that can't be reversed. Tokenization permanently substitutes the data with a meaningless placeholder, with the original data kept in a separate external location. That means that if a data breach occurs, hackers can't access your data, even if they get hold of the tokens.

Evaluating tokenization solutions

If you’re ready to tokenize, consider these 4 questions before you start evaluating solutions.

-

What are your primary business requirements?

The most significant consideration – and the one to start with – is defining the business problem that needs to be resolved. After all, the ROI and overall success of a solution is based on its ability to fulfill the business need for which it was purchased. The most common needs are improving cybersecurity posture and making it easier to comply with data privacy regulations, such as Payment Card Industry Data Security Standard (PCI-DSS). Vendors vary in their offerings for other types of PII, such as Social Security Numbers or medical data. -

Where is your sensitive data?

The next step is identifying which systems, platforms, apps, and databases store sensitive data that should be replaced with tokens. It’s also important to understand how this data flows, as data in transit is also vulnerable. -

What are your system/token requirements?

What are the specific requirements for integrating a tokenization solution to your database and apps? Consider what type of database you use, what language your apps are written in, the degree of distribution of apps and data centers, and how you authenticate users. From there, you can determine whether single-use or multi-use tokens are necessary, and if tokens can be formatted to meet required business use. -

Should you custom-build a solution in-house or purchase a commercial product?

After you know your business needs and requirements for apps, integrations, and tokens, you’ll have a clearer understanding of which vendors can offer you value. Although organizations can, at times, build their own tokenization solutions internally, tackling this need in-house usually adds greater strain to data engineers and data scientists, who are already stretched thin. Most of the time, the benefits of purchasing a customizable solution, receiving tailored customer support, and alleviating data teams of this task outweighs the costs.

Data tokenization via business entity

The ideal data tokenization solution relies on a business entity approach, where a business entity could be a customer, vendor, credit card, store, payment, or claim. This approach drives operational and analytical workloads, and may be deployed in a data mesh architecture, data fabric architecture, or data hub architecture.

Unlike conventional data tokenization solutions, which store all sensitive business data in one centralized data vault, a business entity solution distributes each entity’s sensitive data into its own encrypted and tokenized Micro-Database™ (or micro-vault), one for each business entity. This dramatically reduces the chances of a breach, while making compliance easier to manage.

Entity-based data masking technology tokenizes data in real time (for operational use cases), or in batch (for analytical workloads). It's format-preserving and retains data integrity across systems to enable continued business operations.

Highly flexible and configurable, business entities – operating in a data mesh, data fabric, or customer data platform / hub – represent the most advanced approach to data tokenization today.