Data tokenization substitutes sensitive data with a non-sensitive placeholder, or token, that maps back to the original data via a secure tokenization platform, to protect the sensitive information.

01

Tokenization of Data: A Business Imperative

IT teams are involved with the tokenization of data because the amount – and cost – of data breaches are rising at an alarming rate.

There were 1,300 publicly reported data breaches in the United States in 2021, up 17% from the 1,100 breaches reported in 2020. A recent IBM report stated that the average cost of a data breach rose 10% year over year, from $3.9 million in 2020 to $4.2 million in 2021. Working from home and digital transformation, due to the COVID-19 pandemic, drove the total cost of a data breach up by another $1 million, on average.

As enterprises make their way through legacy application modernization, and as remote work becomes an everyday fixture, the risk and impact of a data breach is steadily rising.

Concurrently, the regulatory environment surrounding data privacy and protection is becoming stricter than ever. Non-compliance with data protection laws could lead to high penalties, legal litigation, and brand damage.

Luckily, today you can protect your sensitive data and prevent data breaches relatively easily – and data tokenization may be your best bet.

02

Cyberattacks on the Rise

Of all of the things that keep business leaders up at night, the threat of cyberattacks is at the top of the list. According to PwC’s latest CEO survey, almost half of the 4,446 CEOs, surveyed in 89 countries, named cyber risk as their top concern for the next 12 months.

The worry is justified. The scale, cost, and impact of data breaches is rising at an accelerated pace. In 2021 alone, there were more than 1,800 significant data breaches, up almost 70% from 2020. Among the most notable breaches were Facebook, JBS, Kaseya, and the Colonial Pipeline, whose combined effect was to expose the private information of hundreds of millions of people and, in some cases, brought business operations to a grinding halt.

With data privacy as a top priority for enterprises, enterprises must find ways to protect sensitive data, while granting data consumers authorization to access the data they need to execute operational and analytical workloads. Today, data tokenization is heralded as one of the most secure and cost-effective ways to protect sensitive data.

In this ebook, we’ll define data tokenization, explain how it supports compliance with data privacy laws, and examine the advantages of entity-based data masking technology.

03

What is Data Tokenization?

Like data masking, data tokenization is a method of data anonymization which involves obscuring the meaning of sensitive data in order to render it useless to potential attackers.

Unlike data masking tools, data tokenization involves substituting sensitive data with a non-sensitive equivalent, or “token”, for use in databases or internal systems. The token is a reference that maps back to the original sensitive data through a tokenization system.

A token consists of a randomized data string that doesn’t have any exploitable value or meaning. Although tokens themselves don’t have any value, they retain certain elements of the original data – such as format or length – to support seamless business operations. Identity and financial information are commonly tokenized, such as Social Security Numbers, passport numbers, bank account numbers, and credit card numbers.

The data tokenization process, a type of "pseudonymization", is designed to be reversible. For example, a company can present one version of de-identified data to an unauthorized user population, and support a controlled re-identification process through which certain users can still have access to the real data.

04

The Need for Data Tokenization

Data tokenization allows enterprises to protect sensitive data while maintaining its full business utility. With tokenization, data consumers can freely access data, without risk.

Data tokenization is essential for today’s businesses because the majority of data breaches are the result of insider attacks, whether intentional or accidental. Consider these enterprise statistics:

- 50% believe that detecting insider attacks has become more difficult since migrating to the cloud

- 60% think that privileged IT users pose the biggest insider security risk to organizations

- 70% feel vulnerable to insider threats, and confirm greater frequency of attacks

What Data Needs to be Tokenized?

When we talk about sensitive data, we're specifically referring to Personal Health Information (PHI) and Personally Identifying Information (PII) .

Medical records, drug prescriptions, bank accounts, credit card numbers, driver’s license numbers, social security numbers, and more, fall into this category.

Companies in the healthcare and financial services industries are currently the biggest users of data tokenization. However, businesses spanning all sectors are starting to appreciate the value of this alternative to data masking. As data privacy regulations become more stringent, and as the punishments for noncompliance become more commonplace, prudent organizations are actively looking for advanced solutions to data protection, that can also maintain full business utility.

05

To Tokenize or Not to Tokenize

If you’re ready to implement a data tokenization solution, consider these 4 questions before you start evaluating solutions.

-

What are your primary business requirements?

The most significant consideration, and the one to start with, is defining the business problem that needs to be resolved. After all, the ROI and overall success of a solution is based on its ability to fulfill the business need for which it was purchased. The most common needs are improving cybersecurity posture and making it easier to comply with data privacy regulations, such as Payment Card Industry Data Security Standard (PCI DSS). Vendors vary in their offerings for other types of PII, such as Social Security numbers or medical data. -

Where is your sensitive data?

The next step is identifying which systems, platforms, apps, and databases store sensitive data that should be replaced with tokens. It’s also important to understand how this data flows, as data in transit is also vulnerable. -

What are your system/token requirements?

What are the specific requirements for integrating a data tokenization solution to your database and apps? Consider what type of database you use, what language your apps are written in, the degree of distribution of apps and data centers, and how you authenticate users. From there, you can determine whether single-use or multi-use tokens are necessary, and if tokens can be formatted to meet required business use. -

Should you custom-build a solution in-house or purchase a commercial product?

After you know your business needs and requirements for apps, integrations, and tokens, you’ll have a clearer understanding of which vendors can offer you value.

Although organizations can, at times, build their own data tokenization solutions internally, tackling this need in-house usually adds greater strain to data engineers and data scientists, who are already stretched thin. Most of the time, the benefits of anonymizing data outweighs the costs.

06

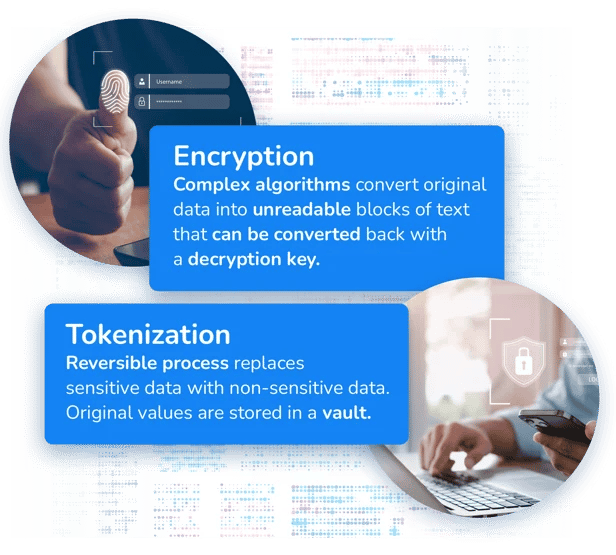

Encryption vs Data Tokenization

Encryption is another common method of data obfuscation. During the encryption process, sensitive data is mathematically changed. However, the original pattern remains within the new code, which means it can be decrypted with the appropriate key. To access the key, hackers use a variety of techniques, such as social engineering or brute computing force.

The ability to reverse encrypted data is its biggest weakness. The level of protection of the sensitive data is determined by the complexity of the algorithm used to encrypt it. However, all encrypted data can ultimately be reversed and broken.

07

4 Top Benefits of Data Tokenization

Tokenizing sensitive data offers several key benefits, such as:

-

Reduced risk

Avoid security breaches because tokens do not reveal the original value's sensitive information. -

Reduced encryption cost

Only the data within the tokenization system needs encryption, eliminating the need to encrypt all other databases. -

Reduced privacy efforts

Data tokenization minimizes the number of systems that manage sensitive data, reducing the effort required for privacy compliance. -

Business continuity

Tokens can be “format preserving” to ensure that existing systems continue to function normally.

08

Data Tokenization Use Cases

The following are 6 key use cases for data tokenization:

-

Compliance scope reduction

With data tokenization, you can reduce the scope of data compliance requirements because tokens replace data irreversibly. For instance, exchanging a Primary Account Number (PAN) with tokenized data results in a smaller data footprint, thus simplifying PCI DSS compliance.

-

Data access management

Data tokenization gives you more control over data access management, preventing those without appropriate permissions, from de-tokenizing sensitive data. For instance, when data is stored in a data lake or warehouse, tokenization assures that only authorized data consumers have access to sensitive data.

-

Supply chain security

Today, many enterprises work with third-party vendors, software, and service providers requiring access to sensitive data. Data tokenization minimizes the risk of a data breach originating externally by keeping sensitive data away from such environments.

-

Data lake/warehouse compliance

Big data repositories, such as data lakes and data warehouses, persist data in structured and unstructured formats. From a compliance perspective, this flexibility makes data protection controls more challenging. When sensitive data is ingested into a data lake, data tokenization hides the original PII, thus reducing compliance issues.

-

Business analytics

Analytical workloads, such as Business Intelligence (BI), are common to every business domain, meaning that the need to perform analytics on sensitive data may arise. When the data is tokenized, you can permit other applications and processes to run analytics, knowing that it's fully protected.

-

Avoid security breaches

Data tokenization enhances cybersecurity by protecting sensitive data from would-be attackers (including insiders, who were responsible for 60% of the total number of data breaches in 2020). By exchanging PII data with non-exploitable, randomized elements, you minimize risk, while also maintaining its business utility.

09

Conventional Data Tokenization has its Risks

Despite its many benefits, data tokenization also has its limitations. The most significant is the risk associated with storing original sensitive data in one centralized token vault. Storing all customer data in one place leads to:

-

Bottlenecks when scaling up data

-

Risks of a mass breach

-

Difficulties ensuring referential and format integrity of tokens across systems

The way to reap the benefits of data tokenization, while avoiding its risks, is by adopting a decentralized system, such as a data mesh.

10

Better Compliance through Data Tokenization

In addition to actually protecting sensitive data from being exposed, data tokenization also helps enterprises comply with an increasingly stringent regulatory environment. One of the most pertinent standards that tokenization supports is the Payment Card Industry Data Security Standard (PCI DSS).

Any business that accepts, transmits, or stores credit card information is required to be PCI-compliant, and adhere to PCI DSS to protect against fraud and data breaches. Failure to comply with this standard could lead to devastating financial consequences, such as huge penalties and fines, plus additional costs for legal fees, settlements, and judgements. It can also lead to irrevocable brand damage or even going out of business.

Tokenization supports PCI DSS compliance by reducing the amount of PAN (Primary Account Number) data stored in an enterprise’s databases. Instead of persisting this sensitive data, the organization need only work with tokens. With a smaller data footprint, businesses have fewer requirements to comply with, reducing compliance risk, and speeding up audits.

11

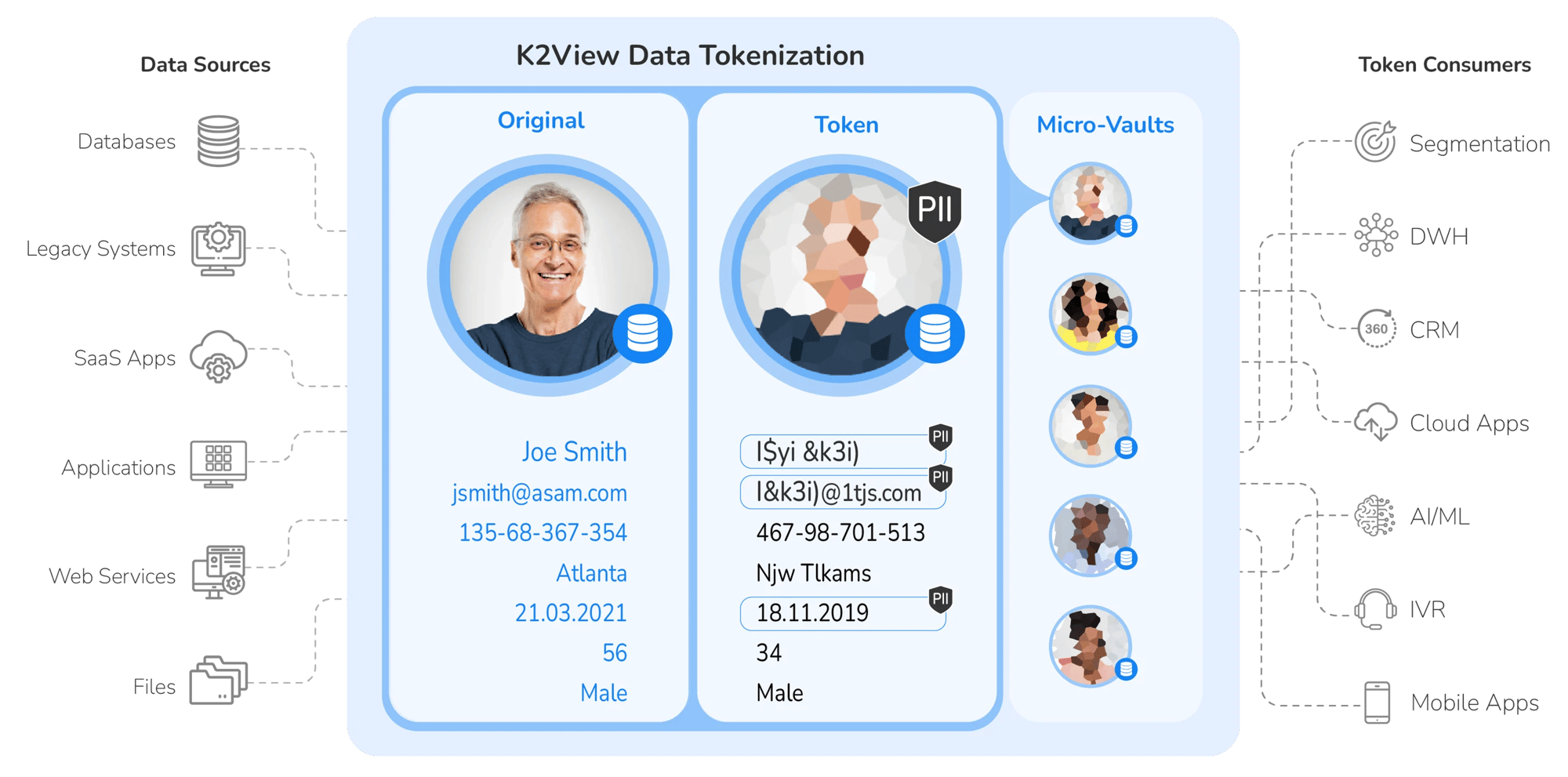

A Better Approach to Data Tokenization with Business Entities

A business entity approach eliminates the need for a single centralized vault. A business entity delivers a “ready-to-use,” complete set of data on a specific customer, credit card, store, payment, or claim, which can be used for both operational and analytical workloads.

By organizing and securing the data that is integrated by a data product for a specific business entity in its own, individually-encrypted “micro-database” – as opposed to storing all sensitive data in one location – you can distribute and therefore significantly reduce risk of a breach.

In this way, rather than having one vault for all your customer data (let’s say, 5 million customers), you will have 5 million vaults, one for each individual customer!

When every instance of a data product manages its data in its own individually encrypted and tokenized micro-database, the result is maximum security of all sensitive data.

In the context of data tokenization, data products:

-

Ingest fresh data from source systems continually

-

Identify, unify, and transform data into a self-contained micro-database, without impacting underlying systems

-

Tokenize data in real time, or in batch mode

-

Secure each micro-database with its own encryption key and access controls

-

Preserve format and maintains data consistency based on hashed values

-

Provide tokenization and detokenization APIs

-

Provision tokens in milliseconds

-

Ensure ACID (Atomicity, Consistency, Isolation, Durability) compliance for token management

When the data for each business entity is managed in its own secure vault, you get the best of all worlds: enhanced protection of sensitive data, total compliance with customer data privacy regulations, and optimal functionality from a business perspective.

CHAPTER 11

Summary

Rapidly evolving data privacy and security laws are making data tokenization a top priority for enterprise IT.

This paper examined the definitions of, and the need for, data tokenization, including benefits, use cases, and risks. It concludes by discussing a business entity approach to data tokenization, a superior alternative to the current standalone or homegrown solutions.