01

How synthetic data generation accelerates innovation

Synthetic data can be described as fake data, generated by computer systems but based on real data.

Enterprises create artificial data to test software under development and at scale, and to train Machine Learning (ML) models.

There are 2 types of data:

- Structured, tabular data

- Unstructured, image and video data

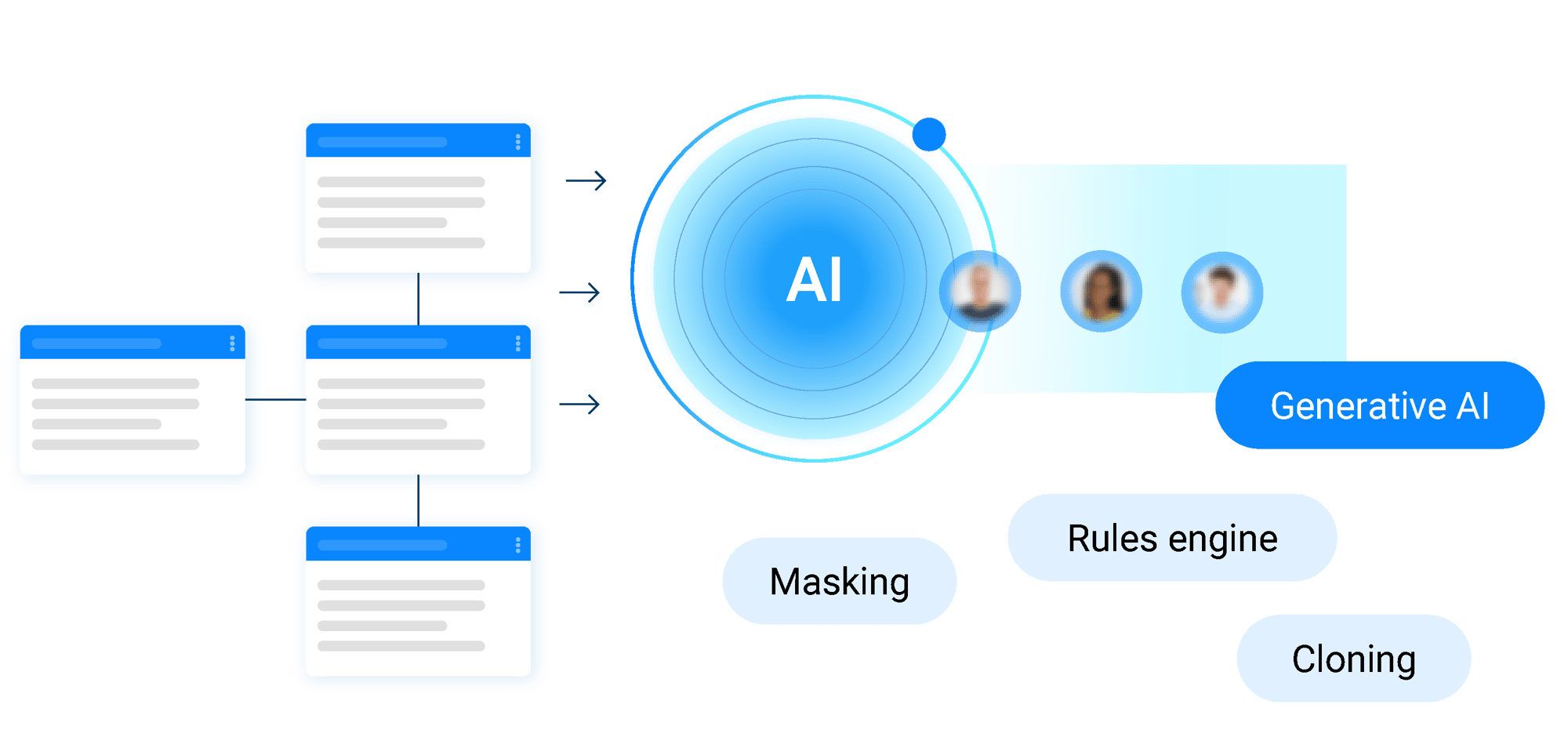

This paper focuses on structured, tabular data and the 4 methods used to synthesize it:

- Generative AI creates realistic, synthetic data via Machine Learning (ML) algorithms.

- A rules engine provisions data using user-defined business policies.

- Entity cloning extracts business entity data, masks it, and then replicates it.

- Data masking anonymizes PII and sensitive data to generate new, compliant data.

This guide also covers the end-to-end synthetic data management processes and the tools needed to support operational and analytical workloads – with capabilities like multi-source data extraction, data subsetting, data masking, data versioning, rollback, and more.

02

What is synthetic data generation?

Synthetic data generation is the process of creating artificial data that mimics the statistical patterns and properties of real-life data using algorithms, models, and other techniques.

Even though it’s usually based on real data, fake data often contains no actual values from the original dataset.

Unlike real data, which may contain sensitive or Personally Identifiable Information (PII), fake data ensures data privacy, while enabling data analysis, research, and software testing.

The 4 key synthetic data generation techniques are listed below:

-

Generative AI – modeled using Generative Pre-trained Transformers (GPT), Generative Adversarial Networks (GANs), or Variational Auto-Encoders (VAEs) – learns the underlying distribution of real data to generate similarly distributed synthetic data.

-

A rules engine creates artificial data via user-defined business rules. Intelligence can be added to the generated data by referencing the relationships between the data elements, to ensure the relational integrity of the generated data.

-

Entity cloning extracts data from the source systems of a single business entity (e.g., customer) and masks it for compliance. It then clones the entity, generating different identifiers for each clone to ensure uniqueness.

-

Data masking replaces PII with fictitious, yet structurally consistent, values. The objective of data masking is to ensure that sensitive data can’t be linked to individuals, while retaining the overall relationships and statistical characteristics of the data.

These 4 techniques are discussed in greater detail in chapter 6.

Here are some other, less common methods:

-

Copula models discover correlations and dependencies within the production data sets, and then use them to generate new, realistic data.

-

Data augmentation applies supplementary techniques – such as flipping, rotation, scaling, and translation – to existing values to create new data.

-

Random sampling and noise injection add random sampling data points from known distributions, as well as noise to existing data, to create new data points that closely resemble real-world data.

03

What are common synthetic data generation use cases?

There are 2 primary use cases that rely on synthetic data solutions:

-

Software testing needs compliant synthetic test data provisioned to test environments, to ensure that the applications being developed perform as expected.

-

Machine Learning (ML) model training relies on synthetic data generation to supplement existing datasets when production data is scarce or non-existent.

Each of these synthetic data use cases will be discussed in greater detail in chapters 4 and 5.

Additional use cases include:

-

Privacy-compliant data sharing uses fictitious data to distribute datasets internally (to other domains) or externally (to partners or other authorized third parties) without revealing PII. Good examples of this are synthetic financial data and synthetic patient data.

-

Product design deploys fake data to provide standardized benchmarks for evaluating product performance in a controlled environment.

-

Behavioral simulations employ artificial data to explore different scenarios, validate models, and test hypotheses without using real-life data.

In our recent survey of 300 data pros, 53% of companies listed edge case testing as their top use case for synthetic data:

Top use cases for synthetic data

Edge case testing, by industry

Source: K2view 2025 State of Test Data Management report

Financial service providers, such as banks and insurance companies, lead the way in synthetic data adoption, particularly for edge case testing.

04

How to use synthetic data for testing

Test data generation plays a critical role in software testing by creating representative datasets specifically designed to evaluate the functionality, performance, and reliability of an application under development. It’s typically used when production data isn’t accessible, or when testing new functionality for which production data isn’t available.

Test data generation must be configurable, enabling data teams to request the amount, and type, of data they want to generate, as well as the characteristics it should have.

Synthetic test data, which is commonly used to test applications in a closed test environment before deployment to production, provides several benefits to testing teams. Not only does it give them total control over the datasets in use, but it also protects data privacy by delivering fake, but lifelike, information. Fictitious data can also be more efficient to use than production data because it allows testers to generate large amounts of test data quickly and easily.

Synthetic test data is used for:

-

Progression testing, which tests new functionality that has been developed

-

Negative testing, which ensures that the application handles invalid inputs correctly

-

Boundary testing, which tests the limits an app can handle, like the largest input, or the maximum number of users

-

Load testing, which stress-tests a system to ensure that it can accommodate massive amounts of data or simultaneous users

Synthetic data for testing is critical to data testing and DevOps teams, in cases where there’s not enough complete or relevant real-life data at hand. Not only does synthetic test data reduce the non-compliance and security risks (associated with using actual data in a test environment), but it’s also ideal for validating new applications, for which no data exists. In such cases, testing teams can match their requirements to the closest profiles available.

For test data management teams, whether the data is real or fake may not be an important criterion. More critical are the balance, bias, and quality of the datasets – and that the data maximizes test coverage. The benefits of synthetic test data include:

-

Extended data coverage

By controlling the specifications of the generated data, testing teams can synthesize the precise data they need for their specific test cases, to ensure full test coverage.

-

Increased speed

It’s a lot quicker and easier to define the parameters for synthetic data generation than to provision data subsets from disparate systems in the higher environment.

-

Enhanced data protection

As opposed to data masking tools that are used to protect production data, synthetic data generation solutions create fake data that closely resembles real data, but without actual values that could lead to the identification of individuals.

In the synthetic test data vs test data masking comparison, IT and testing teams must decide which model suits their specific needs best.

05

How to use synthetic data for AI training

Artificial data is used to train AI models because it's typically provisioned more quickly and easily than real data. The synthetic datasets let the model practice its skills in a closed environment before going live in production. The model learns patterns from the synthetic data and gets better at its task.

Data scientists often prefer synthetic over production data for training AI models, due to data:

-

Augmentation

Fake data is often used to supplement the original dataset by adding anomalies, noise, or other variations. Such additions improve the model’s ability to manage a wider range of input conditions, making it more versatile and robust.

-

Diversity

Real-life datasets don’t necessarily capture all possible scenarios, leading to biases or limited generalization. Fictitious data can introduce more diverse scenarios to ensure the model learns how to deal with a broader range of situations and inputs.

-

Imbalance

At times, a dataset has imbalanced classes, for example, when a particular class is either over- or under-represented. Artificial data addresses the imbalance by distributing majority and minority classes more equally.

-

Privacy

When handling sensitive information, fake data can be used to generate similar info without revealing an individual’s personal details. This anonymity lets model developers and testers use the data freely while also maintaining data privacy. Key privacy-driven synthetic data examples include users like clinics and hospitals (synthetic patient data) and banks and brokerages (synthetic financial data).

-

Scarcity

Sometimes, provisioning enough real-world data for training ML models can be extremely difficult. Fabricated data can augment available real data, increasing the size of the dataset to enable the model to learn more effectively

06

What are the core synthetic data techniques?

The 4 core synthetic data generation techniques are:

-

Generative AI

Generative AI uses ML models, including Generative Pre-trained Transformer (GPT), Generative Adversarial Networks (GANs), and Variational Auto-Encoders (VAEs). The models’ algorithms learn from existing data to create an entirely new synthetic dataset that closely resembles the original.

-

GPT is a language model trained on extensive tabular data, capable of generating lifelike synthetic tabular data. GPT-based synthetic data generation solutions rely on understanding and replicating patterns from the training data, useful for augmenting tabular datasets and generating realistic tabular data for ML tasks.

-

GANs are based on "generator" and "discriminator" neural networks. While the generator creates realistic synthetic data, the discriminator distinguishes real data from synthetic data. During training, the generator competes with the discriminator to produce data that attempts to fool the model, eventually resulting in a high-quality synthetic dataset that closely resembles real data.

-

VAEs are based on an "encoder" and a "decoder". The encoder encapsulates the patterns and characteristics of actual data into a summary of sorts. The decoder attempts to turn that summary back into realistic data. In terms of tabular data, VAEs create fake rows of information that still follow the same patterns and characteristics as the real data.

-

-

Rules engine

A rules engine creates data via user-defined business policies. Intelligence can be added to the generated data by referencing the relationships between the data elements, to ensure the relational integrity of the generated data.

-

Entity cloning

Entity cloning extracts the data for a selected business entity (e.g., specific customer or loan) from all underlying sources, masking and cloning it on the fly. Since unique identifiers are created for each cloned entity, it’s ideal for quickly generating the massive amounts of data needed for load and performance testing.

-

Data masking

Data masking retains the statistical properties and characteristics of the original production data, while protecting sensitive or personal information. It replaces private data with pseudonyms or altered values, ensuring privacy while preserving utility.

Here’s a synopsis of the pros and cons for each synthetic data generation method:

|

Method |

Pros |

Cons |

Key reason for use |

|---|---|---|---|

|

Generative AI |

Speed (time to data) |

|

|

|

Rules engine |

Creates large quantities of data, without having to access production data |

|

|

|

Entity cloning |

Instantly generates large datasets for testing and ML training |

|

Performance and load testing |

|

Data masking |

|

|

|

07

What capabilities should synthetic data tools include?

There are various synthetic data generation solutions on the market today. Before selecting one, make sure it can:

- Generate imitation data to support a broad set of use cases:

-

Software testing

-

Training ML models

-

Privacy-compliant data sharing

-

Building product demos and prototypes

-

Behavioral simulations

-

- Support the 4 key synthetic data generation techniques:

-

Generative AI

-

Rules engine

-

Entity cloning

-

Data masking

-

- Manage the synthetic data lifecyle end to end:

-

Extract training data from all relevant source systems

-

Subset the training data to improve accuracy

-

Mask data to ensure compliance

-

Generate new data via any combination of data generation techniques

-

Reserve synthetic data to avoid users from overriding each other’s data

-

Version the generated data to allow for rolling back the data to prior versions

-

Load the data to the target systems

-

-

Mask data

Automatically discover and mask personal identifiable information (PII) and sensitive data from the source data used to generate synthetic data, to ensure data privacy and compliance of production-sourced data.

-

Maintain relational integrity

Ensure relational integrity – by leveraging metadata, schemas, and rules to learn the hierarchy and relationship between data objects across data sources – and preserve relational consistency of the data.

-

Access all data sources and targets

Easily connect to, and integrate with, the source and target data stores relevant for the synthetic data.

-

Serve yourself for greater agility

Provide testing and data science teams with self-service tools to control and manage the data generation process, e.g., configure and customize data generation functions, without being dependent on data & AI teams.

-

Integrate into CI/CD and ML automation pipelines

Easily integrate the synthetic data management process into your testing CI/CD and ML pipelines via APIs

08

How to generate synthetic data via business entities

Enterprises are turning to entity-based synthetic data generation software to create realistic but fake data, whose referential integrity is always enforced.

When artificial data is generated by business entity (customer, device, order, etc.), all the relevant data for each business entity is always generated, is contextually accurate, and consistent across systems.

The entity data model captures and classifies all the fields to be generated, across all systems, databases, and tables. It enforces referential integrity of the generated data by serving as a blueprint for the data generator – regardless of the synthetic data generation technique(s) employed:

-

Generative AI: A GenAI approach is used to auto-discover PII and then train the model to create synthetic data based on the highest standards of AI data quality.

-

Rules engine: A data generation rule is associated with each field in the entity schema, and the rules are auto-generated based on the field classifications

-

Entity cloning: A single instance of a business entity is extracted and masked from the source systems, and is then cloned however many times are required, while generating new identifiers for each cloned entity.

-

Data masking: When provisioning production data for software testing and analytics, the entity data is masked as a unit, ensuring referential integrity of the masked data.

09

What are the top synthetic data tools for 2026?

There are many providers of tabular synthetic data generation software. The market is continually evolving, with vertical specialization tools, pure-play horizontal platforms, and extensions of existing data management platforms.

Here's a list of the top 6 synthetic data companies:

-

K2view

K2view goes beyond synthetic data generation with end-to-end management of the entire synthetic data lifecycle, including source data extraction, subsetting, pipelining, and synthetic data operations. It uses a combination of techniques to generate accurate, compliant, and realistic synthetic data for software testing and ML model training. Patented entity-based technology ensures referential integrity of the generated data by creating a schema which serves as a blueprint for the data model. K2view has been successfully implemented in numerous Fortune 500 companies around the world.-Dec-26-2024-01-43-38-3744-PM.png?width=1950&height=924&name=image%20(5)-Dec-26-2024-01-43-38-3744-PM.png)

- Gretel

Gretel provides a synthetic data platform for developers and ML/AI engineers who use the platform's APIs to generate anonymized and safe synthetic data while preserving data privacy.

-

MOSTLY AI

The MOSTLY AI synthetic data platform enables enterprises to unlock, share, fix, and simulate data. Although similar to actual data, its synthetic data retains valuable, granular-level information, while assuring private information is protected.

-

Syntho

The Syntho AI-based engine generates a completely new, artificial dataset that reproduces the statistical characteristics of the original data. It allows users to minimize privacy risk, maximize data utility, and boost innovation through data sharing.

-

YData

The YData data-centric platform enables the development and ROI of AI applications by improving the quality of training datasets. Data teams can use automated data quality profiling and improve datasets, leveraging state-of-the-art synthetic data generation.

-

Hazy

Hazy models are capable of generating high quality synthetic data with a differential privacy mechanism. Data can be tabular, sequential (containing time-dependent events, like bank transactions), or dispersed through several tables in a relational database.

10

What is the future of synthetic data?

Synthetic data generation processes and solutions are evolving at a rapid pace. The following areas promise to introduce innovation that delivers better business outcomes across synthetic data use cases.

-

Synthetic data operations

The generation of artificial data is just one step in the synthetic data lifecycle. Data teams are now seeking methods and solutions to manage and automate the entire synthetic data lifecycle.

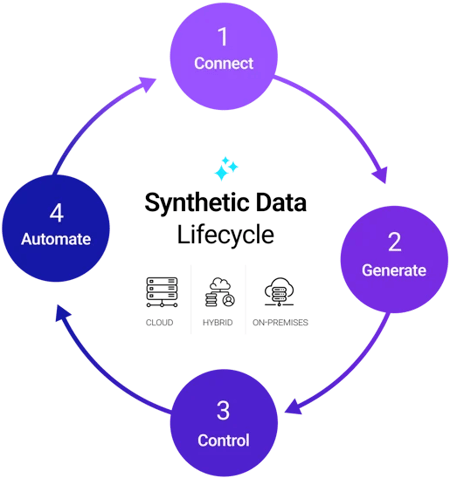

The synthetic data lifecycle can be divided into 4 basic phases:

1. Connect to data sources and discover PII automatically.

2. Generate the right synthetic data for each test case on demand.

3. Control the generated data via reserving, aging, versioning, and rollback techniques.

4. Automate your testing workflows by integrating synthetic data into your CI/CD pipelines.

-

Improved data quality, accuracy, and reliability

Since data professionals rely on accurate and high-quality data for their workloads, synthetic data companies will be driven to continually optimize their synthetic data generation algorithms, and solutions will emerge that generate vertical-specific synthetic data.

-

Ethical and legal perspectives

With the spread of synthetic data, legislators and regulators are paying more attention to its ethical and legal implications. IT and business teams need to be aware of these issues, and take them into account, as they develop.

-

Integration with production data

By integrating fake data with real-life data, data teams hope to generate more realistic and comprehensive datasets. For example, fake data could be used to close any gaps in actual datasets, augment real-life information to cover a broader scope of edge cases, and create test data to cover new application functionality being developed.

-

Emerging use cases

Fictitious data is increasingly being used in new applications, such as autonomous automobiles and virtual reality. Researchers are exploring how artificial data can be used to improve the performance of AI systems in these, and other, emerging technologies.

11

Conclusion and key takeaways

Synthetic data generation helps teams move faster with realistic datasets that preserve the statistical properties of real data, without exposing real PII. As privacy requirements tighten and production data becomes harder to access across multiple sources, synthetic data is becoming a core capability for enterprise testing and AI initiatives.

Key takeaways:

-

Choose the right generation method per use case

The four common approaches include generative AI, rules-based generation, entity cloning, and data masking, each suited to different needs across the SDLC and analytics.

-

Treat synthetic data as a lifecycle

Enterprise-ready programs manage prepare, generate, operate, and deliver, including integration into pipelines and controls like versioning and rollback.

-

Prioritize quality, not just privacy

Synthetic data must be accurate enough for testing and model training, including relationships and edge cases, not only safe.

-

Use entity-based generation for realism at scale

Generating by business entity (such as customer or invoice) helps maintain completeness and consistency across disparate systems.

-

Align to the biggest value drivers

The most common drivers are software testing and AI model training, especially when production data is restricted, scarce, or too risky to use.

-

Look for an end-to-end, enterprise-ready solution

Point tools that only generate tabular data for a single use case can’t keep up with modern, multi-source demands; favor a platform that supports multiple techniques and broad enterprise use cases with governance built in.

12

Synthetic data FAQs

1. How do I decide whether to generate fully synthetic data or a hybrid of real and synthetic data?

Choose fully synthetic when you want to minimize privacy exposure and remove dependency on production access. Choose hybrid when you need a small amount of real data to capture rare patterns or complex relationships, then expand coverage with synthetic.

Use these practical decision cues:

- Fully synthetic

If your data access is slow or blocked, then your privacy risk is high – and you need “infinite” volume quickly. - Hybrid

If you’d like to preserve specific distributions or relationships – but still want privacy-safe sharing and broad scenario coverage – then keep the “real” portion small, gated, and audited (and use synthetic data for scale).

2. What KPIs show synthetic data is working?

Pick KPIs that prove impact in speed, coverage, and risk reduction:

- Time-to-data: Hours/minutes to provision a usable dataset (vs days/weeks)

- Test cycle speed: Reduced lead time for CI/regression runs due to on-demand datasets

- Coverage: Measurable increase in edge-case or scenario coverage (not just row counts)

- Privacy risk gates: Pass rate on re-identification or leakage checks, as well as audit trail completeness

- AI utility: Comparable model performance on downstream tasks (plus stability across segments)

3. How can I measure realism without accidentally re-identifying people?

Go for utility-based realism, instead of gut feeling (“this row looks like production”).

Use checks like:

- Statistical similarity: Key distributions, correlations, and missing patterns

- Constraint satisfaction:

Valid ranges, formats, and domain rules (IDs, dates, and state transitions) - Downstream performance: Do your analytics queries, tests, and AI pipelines behave similarly on synthetic?

Include privacy risk testing as a release gate before broad sharing.

4. How do I validate relational integrity?

Relational integrity validation means confirming the synthetic dataset behaves like a real relational system, in terms of:

- PK/FK validity

There are no orphan records. Keys are unique when required. - Cardinality checks

One-to-many and many-to-many relationships match expectations. - Cross-table business rules

For example, “A closed account must have a closure date” or “Your transaction successfully references a valid account”. - Sequence-aware integrity

Ordering and transitions are plausible for time-series and event data.

5. Is synthetic data always safe from a privacy perspective?

No – not automatically. Synthetic data can reduce exposure because it doesn’t need to contain real PII, but risk depends on how it’s generated and how closely it can leak information about the training data.

Safer-by-design practices include:

- Running leakage risk assessments before release

- Maintaining audit trails of data creation and access

- Using differential privacy methods when you need formal privacy guarantees

6. How do I test for data leakage?

Treat leakage testing like a security gate before sharing, via:

- Similarity checks

Verify synthetic rows aren’t too close to any source record. - Outlier review

Investigate rare combinations that could be identifiable. - Inference testing

Evaluate membership/attribute inference risk where relevant. - Operational controls

Record your configs, versions, run IDs, and all those who accessed data.

7. What does “synthetic data operations” mean?

Synthetic data ops is everything required to make synthetic data repeatable, shareable, and governable, including:

- On-demand generation for tests, dev, analytics, and AI

- Standard quality and privacy gates before loading and distribution

- Lifecycle controls, like versioning, rollback, retention, aging, and refreshes

- Environment coordination, such as reserving data to avoid collisions

- Auditability, in terms of lineage and policy enforcement

8. How do I make synthetic datasets reproducible?

Reproducibility comes from treating datasets like versioned artifacts:

- Version the spec in terms of schema mappings, constraints, rules, model settings.

- Pin the generator version and dependencies.

- Track lineage, from config, through run ID, to output dataset ID.

- Support rollback to prior dataset versions for regression testing.

9. How do I validate synthetic data for AI models?

Validate via task, using:

- Train/evaluate comparisons

Train on synthetic (or both synthetic and real data) and evaluate on a representative holdout. - Slice metrics

Compare performance across key segments to detect bias issues. - Stress tests

Generate targeted edge scenarios to confirm expected behavior.

10. What capabilities matter most when comparing synthetic data tools?

Focus on capabilities that support multiple methods, governance, and scale:

- Data shape support: Tabular, relational, time-series/event data

- Technique flexibility: Statistical, rule-based, generative models, with simulation (if needed)

- Quality and privacy gates: Validation, leakage testing, and audit logs

- Operational lifecycle: Versioning, reproducibility, automation and CI/CD integration

- Usability: Self-service controls with guardrails for different teams

.jpg?width=506&height=298&name=Generating%20Realistic%20Synthetic%20Data%20with%20GenAI%20(2).jpg)