Data masking and tokenization are used to protect sensitive data. Discover where and when to use each – and how a business entity approach optimizes both.

Table of Contents

What is the best solution for your use case

Comparing data masking vs tokenization

Key advantages and disadvantages of each

Which one should you choose

Business entities deliver the best of both worlds

What's the best solution for your use case?

The need to protect sensitive data is primarily driven by internal company policies, and external regulations, such as GPDR, HIPPA, and PCI DSS.

Enterprises commonly use 2 methods to protect sensitive data, and ensure compliance with mounting legal requirements: data masking (aka data anonymization) vs tokenization.

In this article, we’ll explain what data masking and tokenization are all about, their differences and similarities, their pros and cons, and how a Data Product Platform maximizes their performance.

Comparing data masking vs tokenization

When it comes to protecting sensitive data, such as Personally Identifiable Information (PII), and fulfilling compliance standards, both data masking tools and data tokenization tools offer effective solutions. However, it’s important to understand their differences and similarities.

Data masking defined

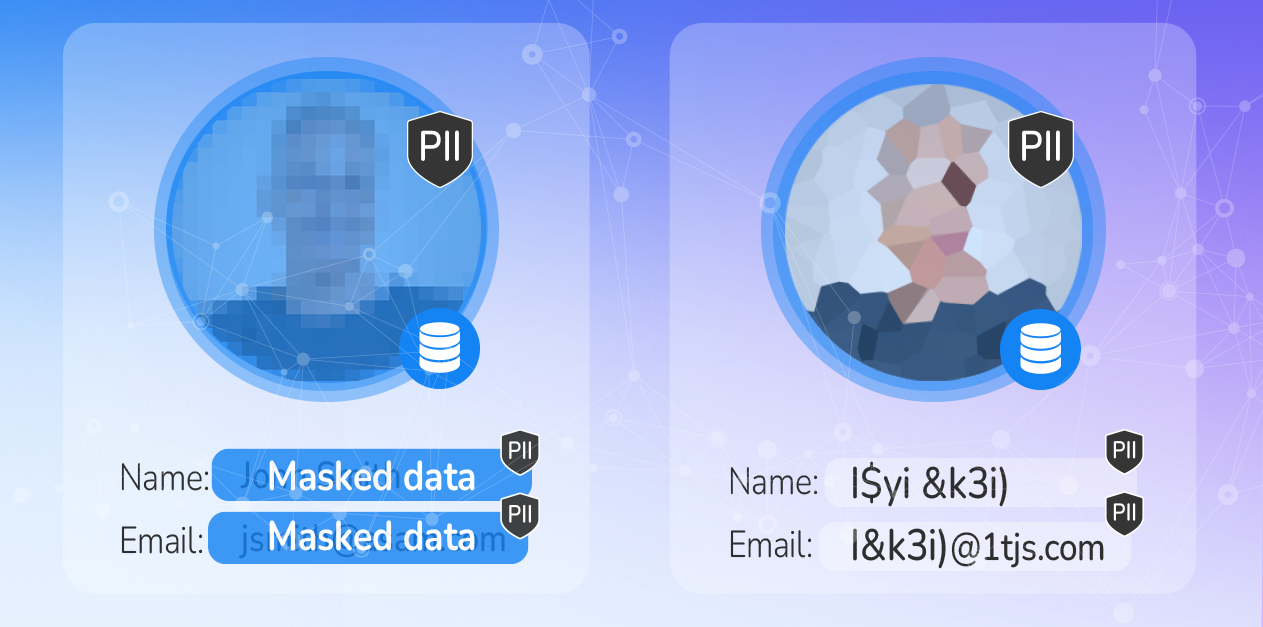

Data masking best practices call for the replacement of real, sensitive data with fictitious, yet statistically equivalent, data, maintaining its ability to carry out business processes. This new version of the data can’t be identified, or reverse-engineered. Usually, this involves replacing sensitive information with scrambled values, without a mechanism to retrieve the original ones.

By modifying sensitive data in this way, the new version is worthless to unauthorized users, but still valuable to software and authorized personnel. An effective data masking tool ensures data consistency (referential integrity), and usability, across multiple databases and analytics platforms – statically or dynamically.

Data tokenization defined

Data tokenization obscures the meaning of sensitive data by substituting it for a valueless equivalent, or token, for use in databases or internal systems. When data is tokenized, it replaces sensitive data – in applications, databases, data repositories, and internal systems – with random data elements, such as a string of characters and/or figures, that have zero value in the event of a breach.

The tokenization of data process protects data at rest, as well as data in motion. If an application or user needs the real data value, the token can be “detokenized” back to the real data.

Here’s a side-by-side comparison:

|

Data masking |

Data tokenization |

|

|

Definition |

|

|

|

Key use cases |

|

|

|

Format preservation |

|

|

|

Reversibility |

|

|

Key advantages and disadvantages of each

Due to the differences outlined above, each method has its advantages and disadvantages:

Data masking

Advantages

-

Reduced cyber risk

Data masking addresses several critical threats, including data loss, data exfiltration, insider threats, insecure integrations with third-party software, and risks associated with cloud adoption. -

Preserved functionality

Masking maintains data’s inherent functional properties, while rendering it useless to an attacker. -

Data sanitization

Instead of deleting files, which could leave behind traces of data in storage media, sanitization replaces personal information with anonymized data.

Disadvantages

-

Difficulty preserving formats, and ensuring referential integrity

In order to create a meaningful, masked copy of production data, the data masking system must be able to understand what the data represents. If it can’t, it might not consistently preserve the format, or ensure referential integrity. -

Difficulty preserving gender, and semantic integrity

When replacing names in a database, the data masking system must be aware of which names are male and which are female, otherwise gender distribution could be impacted. The same goes for the semantics, or the meaning, of the data. -

Difficulty maintaining data uniqueness

If the original sensitive data is unique (such as a Social Security Number, phone number, or bank account number), the masking system must be able to interpret this, and provide unique masked values that will be used consistently throughout the enterprise.

Get Gartner’s market guide for data masking and synthetic data FREE.

Data tokenization

Advantages

-

Reduced cyber risk

All sensitive data within databases and data lakes are replaced with non-sensitive tokens with no exploitable value, so even in the case of a breach, personal information is never compromised, and financial fallout is avoidable. -

Reduced encryption cost

Only the data within the tokenization system needs encryption, eliminating the need to encrypt all other databases. -

Simplified effort to comply with privacy regulations

Data tokenization minimizes the number of systems that manage sensitive data, reducing the effort required for privacy compliance. -

Business continuity

Tokens can be format-preserving, to ensure business continuity.

Disadvantages

The main disadvantages of data tokenization relate to storing original sensitive data in a centralized token vault, resulting in:

-

Risk of a massive breach

If a malicious attacker successfully breaks through the encrypted vault, all of your most valuable sensitive data is at risk. That’s why tokenization servers are stored in a separate, secure location. -

Bottlenecks, when scaling up data

With some system architectures, centralized token vault can stifle your ability to scale up data, so it’s important to consider the availability vs performance equation. -

Compromised referential and formatting integrity

Many existing data tokenization solutions experience difficulty ensuring referential and formatting integrity of tokens across systems.

Which one should you choose?

When comparing data masking vs data tokenization, it’s important to understand that one approach isn’t inherently better than the other. Both solutions include sensitive data discovery and obfuscation, leading to compliance with privacy laws.

To decide which approach is best for each of your use cases, start by answering these questions:

-

Where is sensitive data used most? Where is it as risk?

-

Which industry-related privacy regulations and security standards is the organization subject to?

-

What are the greatest vulnerabilities?

The answers to these questions provide an indication whether data masking or data tokenization is most appropriate for a given enterprise architecture.

Business entities deliver the best of both worlds

Entity-based data masking technology can be used for both data masking software and data tokenization software. It delivers all of the data related to a specific business entity – such as a customer, payment, order, or device – to authorized data consumers. The data for each instance of a business entity is persisted and managed in its own individually encrypted Micro-Database™.

It performs structured and unstructured data masking on the fly, while maintaining referential integrity. Images, PDFs, text files, and other formats that contain sensitive data are secured with static and dynamic masking capabilities.

And with regard to tokenization, it essentially eliminates the risk of a massive data breach associated with centralized data vaults, the possibility of scaling bottlenecks, and any compromise in referential and formatting integrity.

In terms of PII discovery, the entity-based approach introduces a game-changer: automatic profiling using a generative AI Large Language Model (LLM). A GenAI LLM lets you delve deeper into your data, accurately identifying and classifying even the most ambiguous or complex PII.

To summarize, a business entity approach – that automatically discovers, masks and tokenizes data, and stores it in decentralized Micro-Databases – makes data protection and compliance easier to achieve, and much more cost-effective, than any other data anonymization tools.

Learn how one set of K2view data masking tools

address all your masking and tokenization needs.