The complete guide to data agents

What are data agents? The bridge between agentic AI and enterprise data.

Last updated on Feb 12, 2026

See Agentic AI in Action

Go behind the scenes and see how we ground AI agents with enterprise data

Start your live product tour

Table of Contents

Table of Contents

Data agents connect agentic AI to live, trusted enterprise data, so AI can answer, decide, and act with speed and confidence.

01

Key takeaways

-

Most agentic AI projects fail in production, not because of LLM capabilities, but because enterprise data is fragmented, governed inconsistently, and difficult to operationalize at runtime.

-

Data agents act as a control layer between agentic AI systems to enterprise data, decoupling reasoning from data discovery, access, governance, and context assembly.

-

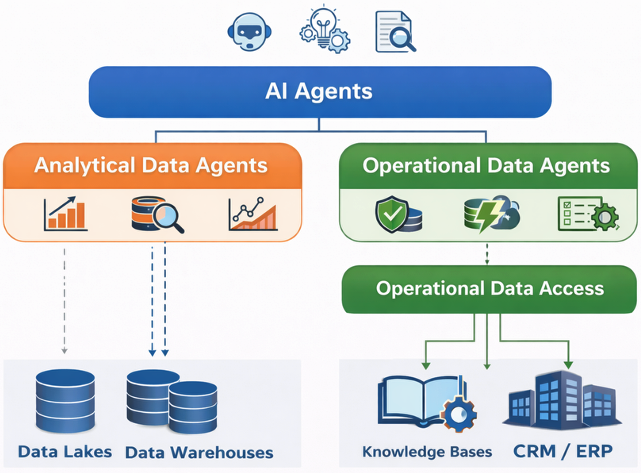

There are two distinct types of data agents: analytical data agents, optimized for historical analysis and insight, and operational data agents, optimized for real-time accuracy, governance, and action.

-

Minimum Viable Data (MVD) is a core design principle for operational data agents, ensuring that only task-relevant, entity-scoped, and governed data is delivered at runtime.

-

Entity-centric data products provide the operational data foundation that enables MVD, by organizing, governing, and synchronizing live information around business entities (customers, orders, devices, etc.).

-

Organizations can move agentic AI from isolated pilots to reliable, production-scale operational systems, by combining operational data agents with entity-centric data products.

02

Data agents defined: Bridging AI agents with enterprise data

As AI systems become more agentic, enterprise data becomes a limiting factor. Business data is fragmented across operational systems, analytical platforms, and unstructured knowledge sources, each with different schemas, access rules, and freshness requirements.

AI agents need to reason and act using this data, yet they lack a consistent way to determine what data is relevant, how current it must be, or how it should be safely accessed and reliably assembled into context at scale.

This is where data agents come in. Data agents serve as a control layer between AI agents and enterprise data, determining what data is needed, how it must be governed, and how it should be accessed, then assembling that data into task-ready context for AI agents to reason over.

Analytical vs operational data agents

Data agents fall into two broad categories – analytical data agents and operational data agents – based on the task they need to perform and the latency and action requirements involved.

The two types of data agents solve fundamentally different problems. Analytical agents optimize for scale, history, and insight, while operational agents optimize for accuracy, latency, governance, and action. Confusing the two leads to unreliable systems and the wrong data architecture.

By aligning each data agent type with the appropriate data foundation, organizations can support both large-scale analytical exploration and real-time, data-driven decisioning and operational action.

Analytical data agents

Analytical data agents typically query data directly from data lakes, data warehouses, or lakehouses, or access these platforms through data virtualization. This allows them to analyze broad datasets across time and domains, supporting tasks such as trend analysis, root-cause investigation, and forecasting.

Operational data agents

Operational data agents depend on a unified data access layer that combines data products for multi-source enterprise data retrieval with Retrieval-Augmented Generation (RAG) for knowledge search. They translate intent expressed in natural language into precise queries (SQL, APIs, and semantic search), then transform the retrieved data into coherent, task-ready context that AI agents can reliably reason over and act on.

Decoupling AI agents from data access establishes a clear control plane for context. It centralizes how data is discovered, governed, retrieved, and transformed so this logic is built once and reused everywhere, rather than re-implemented across every AI agent. This prevents duplication, reduces inconsistency, and allows AI agents to remain lightweight, portable, and focused on reasoning rather than infrastructure.

03

Examples of data agents

Here are some common examples of analytical and operational data agents:

1. Analytical data agents

Analytical data agents support business users, analysts, and data scientists who ask exploratory or aggregate questions about the business. These agents typically:

• Answer historical, trend-based, or comparative questions

• Operate over large time windows and datasets

• Tolerate higher latency

• Do not trigger operational actions

Examples:

• “Analyze VIP customer churn over the last 8 quarters in Chicago.”

• “Compare revenue growth by region and product over the past year.”

• “Analyze the correlation between support ticket volume and customer churn over the last 18 months.”

• “Break down average revenue per client by plan type and acquisition channel over the past two years.”

2. Operational data agents

Operational data agents operate in transactional contexts and are subject to strict latency, governance, and accuracy requirements. They drive workflows, decisions, and actions that must happen in near real time and often involve systems of record. Within this category, several common patterns emerge.

3. Entity insight data agents

Entity insight data agents answer questions about the current or recent operational state of a specific business entity, like customer, loan, work order, or device.

Examples:

• “Why is this customer’s bill higher this month vs last?”

• “Which services are currently active for this account?”

• “Is this customer eligible for refinancing, and what options are available based on their current terms and payment history?”

• “What is the current status of this work order, and are there any blocking issues or delays?”

• “Has this loan had any missed or late payments in the last 60 days, and what is its current risk status?”

• “Which components of this product are currently under warranty, and when do those warranties expire?”

4. Entity action data agents

Entity action data agents orchestrate safe updates and actions against backend systems for a specific entity.

Examples:

• Updating a customer’s service plan

• Applying a billing adjustment

• Suspending or reactivating an account

• Updating the status of a work order to "completed" after validation checks

• Approving a loan based on current terms and payment status

• Reassigning a field service task to a different technician

• Deactivating a device following a reported fault

5. Synthetic data agents

Synthetic data agents generate realistic, policy-compliant synthetic data on demand. Common use cases include:

• Test data management

• Machine learning model training

• AI agent testing and validation

These agents ensure that no sensitive or production data is exposed while still preserving structural and statistical fidelity.

6. Data compliance agents

Data compliance agents continuously scan non-production and lower environments to detect sensitive data exposure. They can:

• Identify PII or regulated data

• Flag violations of privacy or security policies

• Automatically mask sensitive fields based on policy

These agents help enforce compliance with regulations such as GDPR, CPRA, HIPAA, and DORA across the data lifecycle.

Unless otherwise noted, the remainder of this article focuses on operational data agents, which present more complex data architecture challenges than their analytical counterparts.

04

Inside data agents

Operational data agents are specialized AI agents that focus on one critical responsibility: connecting AI agents to multi-source enterprise data in a reliable, governed, and operational way. While AI agents focus on reasoning and decision-making, data agents handle data discovery, governed access, context assembly, and operational action.

Data agents run inside an agentic AI framework, as a dedicated data control layer. They interpret requests coming from AI agents, determine what data is required, and orchestrate access across operational data products and RAG-based retrieval pipelines. This separation allows AI agents to remain lightweight and portable, while data agents encapsulate data-specific logic.

How do data agents work?

Data agents use AI to dynamically reason about data access at runtime. They can:

- Interpret natural language intent and infer data requirements

- Identify the relevant data products, RAG pipelines, and systems to access

- Generate the appropriate data queries, RAG searches, and API calls

- Enforce data governance and security policies dynamically

- Transform and assemble results into task-ready context

- Safely execute updates or actions against systems of record when required

All of this happens in a few seconds, without custom code, manual integration, or the creation of new API endpoints.

05

Challenges of connecting data agents to multi-source enterprise data

Consider an AI agent-assist customer service use case. To help a customer with a billing question, an AI agent needs accurate, up-to-date context about the customer’s account status, recent payments, active services, and billing history. This context is typically spread across multiple systems, such as a CRM platform, billing and payment systems, and a customer data warehouse.

As the AI agent attempts to assemble this context on demand, several challenges emerge:

- Accuracy and consistency

AI agents are only as reliable as the context they receive. Incomplete, outdated, or conflicting data leads directly to incorrect outputs. At the same time, overloading agents with excessive information increases variability, causing inconsistent responses when the same task is executed multiple times. - Latency and user experience

Assembling context often requires querying multiple systems, unifying fragmented data, enforcing access and data quality policies at runtime, and transforming results into task-ready context. Without an AI-ready data layer, this process introduces latency. In operational scenarios, even small delays quickly degrade the user experience. - Escalating operational costs

LLM costs scale with the volume of data processed. When AI agents are forced to ingest large amounts of irrelevant or redundant data, inference costs rise rapidly. At enterprise scale, with hundreds or thousands of agent interactions per day, this inefficiency becomes financially unsustainable. - Security and regulatory exposure

Granting AI agents broad or direct access to enterprise systems unnecessarily expands the attack surface and increases the risk of sensitive data exposure. This makes it significantly harder to enforce fine-grained access controls, as well as GDPR, CPRA, HIPAA, and DORA compliance.

06

Minimum Viable Data (MVD): A core design principle for operational data agents

A foundational design principle for operational data agents is Minimum Viable Data (MVD). MVD means accessing the smallest, most relevant, and most precise dataset required for a data agent to perform a specific task accurately and safely.

In operational AI systems, this principle is enforced through entity-centric data access. Operational data agents reason and act at the level of business entities such as customers, orders, invoices, or devices.

MVD ensures that data access is scoped to the specific entity involved in the task, rather than broad datasets or system-level abstractions.

1. MVD is entity-centric by design

MVD is not about providing less data. It is about providing the right data, precisely scoped to a task and a business entity, across 3 dimensions:

1. Task relevance: Only data that directly contributes to the current task is accessed. Irrelevant attributes, historical records, or unrelated entities are excluded.

2. Context richness: Entity-level data is coupled with semantic metadata so the data agent understands not just the values, but their meaning, relationships, and constraints.

3. Data minimization: Data access is limited to the specific entity in scope, such as a single customer or account, significantly reducing privacy, security, and regulatory exposure.

2. Applying MVD in a customer service example

If a customer contacts support about upgrading their internet speed, the operational data agent does not need years of historical support tickets or unrelated account activity. The minimum viable data for this task is the current customer entity context, including the active plan, available upgrade options, contract terms, and relevant billing information.

Anything beyond this entity-scoped dataset increases cost and risk while often making the outcome less reliable.

3. Why context matters as much as content

Minimal data without context lacks meaning. MVD depends on rich, entity-level metadata to ensure that even small datasets can be interpreted correctly via:

• Clear meaning for each data element

• Relationships between entities and attributes

• Valid values and data types

• Data access, privacy, and governance constraints

• Data quality indicators and freshness signals

MVD calls for minimum data volume with maximum understanding. Operational data agents enforce this principle by assembling compact, entity-centric, context-rich datasets. Whether this is possible in practice depends on the underlying data architecture.

Unfortunately, traditional approaches struggle to deliver entity-scoped, governed data at runtime.

07

Why traditional data architectures fall short for operational data agents

Many companies attempt to implement operational AI systems using data lakes, APIs, or vector stores. While each plays an important role in modern data platforms, none is designed to consistently deliver MVD at runtime.

Data lakes

Data lakes are well suited for analytical data agents, but they introduce several limitations for operational data agents when evaluated through the lens of MVD:

- Data is often stale due to batch-oriented updates, violating real-time freshness requirements

- Entity information is fragmented across tables and files, requiring complex and compute-intensive runtime reconciliation

- Large volumes of irrelevant data make it difficult and compute-intensive to isolate the minimum dataset required for a specific task

- Semantic meaning and relationships are implicit or lost, forcing agents to infer context rather than receive it explicitly.

- Broad access to raw data makes fine-grained data minimization, governance, and compliance enforcement difficult.

As a result, operational data agents must scan, join, and filter excessive data at runtime to create their MVD. This introduces latency, drives up cloud compute costs, and makes consistent policy enforcement difficult.

APIs

APIs are essential for system integration, but they are not designed to support MVD-driven access for operational data agents:

- Each new agent task may require a new API. Since future tasks and questions cannot be predicted upfront, this quickly results in API sprawl and operational complexity.

- APIs reflect system boundaries rather than business entities.

- Constructing MVD requires orchestrating multiple API calls across systems.

- Chained calls increase latency, failure modes, and cloud compute costs.

- APIs expose fields without semantic context, relationships, or data quality signals.

As a result, operational data agents are forced to implement custom logic to unify data, enforce governance, and assemble MVD-driven context at runtime, increasing complexity and reducing reliability.

Vector stores

Vector stores have become a standard way of making unstructured content accessible to AI agents through retrieval augmented generation. They’re effective for semantic search over documents, policies, manuals, and other knowledge assets, but aren’t designed to retrieve enterprise operational data. Vector stores are poorly suited for real-time structured entity-level data retrieval because they're unable to:

- Retrieve authoritative system-of-record data (just relevant text).

- Natively support entities, transactions, or operations.

- Enforce enterprise governance, validation, or consistency.

- Safely support updates or operational actions.

Vector stores may excel at knowledge retrieval, but data agents require access to governed, real-time enterprise data.

The limitations of data lakes, APIs, and vector stores all point to the same conclusion: operational data agents need a different kind of data foundation. One that delivers entity-scoped, governed, real-time data by design, rather than forcing agents to assemble it at runtime.

08

Entity-centric data products: The foundation for operational data agents

Operational data agents require a dedicated data access layer that consistently delivers Minimum Viable Data (MVD): task-scoped, entity-specific, governed data in real time. This is the role of entity-centric data products.

Traditional data architectures organize information by applications or databases. An entity-centric data product organizes data around core business entities such as customers, orders, invoices, or devices. This distinction matters because operational data agents reason and assemble context at the level of business entities, and MVD is inherently entity-scoped, not system-scoped.

What is an entity-centric data product?

An entity-centric data product is a self-contained, operational data unit that manages all the data related to a single business entity.

For example, an instance of a customer data product unifies profile information, account status, billing and payment details, service entitlements, recent interactions, and preferences – for a specific customer – regardless of where that data originates. This entity-scoped view ensures that operational data agents can access exactly the data required for a specific task.

Each instance of an entity-centric data product:

- Retrieves data from all relevant source systems for a specific entity

- Syncs changes continually

- Maintains metadata, relationships, and business rules – semantically

- Enforces governance, security, and acccess policies at the entity level

- Exposes a unified view of the entity, in a business context, across multiple access methods (APIs, JDBC, MCP)

By constraining access to a single entity while preserving full semantic context, entity-centric data products provide the precise data scope operational data agents need to assemble task-ready context reliably and efficiently.

Why this matters for operational data agents

Because entity-centric data products deliver MVD by design, operational data agents no longer need to scan, join, filter, or govern data at runtime. Instead, they can focus on reasoning, orchestration, and action, relying on a data foundation that consistently provides compact, governed, real-time entity context.

This is what enables operational data agents to scale from isolated use cases to production-grade systems.

09

Building operational data agents with K2view

K2view provides dedicated tooling to build and deploy both sides of the operational data agent architecture.

Using the K2view Data Agent Builder, teams graphically configure how operational data agents reason about tasks, interpret intent, enforce governance, and assemble task-ready context at runtime. This is where agent logic lives, including data product selection, access decisions, and orchestration across data products and knowledge retrieval.

In parallel, the K2view Data Product Studio is used to design and manage the supporting entity-centric operational data products. Here, data teams define how data is retrieved from source systems, synchronized in real time, governed, and exposed as business-ready entity views.

Together, these two layers allow organizations to build operational data agents that are decoupled from systems, grounded in trusted enterprise data, and designed to deliver Minimum Viable Data consistently at scale.

Integration with agentic frameworks

Data agents operate within an organization’s broader agentic AI framework. This may be implemented using platforms such as Microsoft Copilot Studio, Salesforce Agentforce, AWS Bedrock, or open-source agent frameworks.

To support scale, security, and low latency, data agents are typically deployed close to the entity-centric data products they access through the K2view Data Product Platform.

Within this environment, data agents work alongside other LLM agents that handle user interaction, planning, and decision logic. Data agents provide governed, task-ready data context to the rest of the system. This clear separation of responsibilities allows each agent to specialize, ensuring that AI-driven workflows remain grounded in accurate, current, and policy-compliant enterprise data.

15

Conclusion

Agentic AI represents a fundamental shift in how software operates. Instead of relying on predefined workflows, AI agents can reason, plan, and act autonomously. This shift has the potential to transform business operations, but only when AI agents are grounded in accurate, timely, and governed enterprise data.

Data agents provide the missing connection. They form the control layer that links agentic AI to operational data, ensuring that agents receive the right context at the right time, without unnecessary cost or risk. By applying design principles such as minimum viable data, entity-centric data products, and data agents, organizations can move agentic AI from isolated pilots to reliable, production-scale enterprise systems.

11

FAQs about data agents

1. What are the benefits of using data agents in agentic AI?

Data agents centralize data access, governance, and context assembly, allowing AI agents to focus on reasoning and action. By decoupling AI agents from underlying data systems, they improve reliability, reduce latency and cost, and enable agentic AI systems to scale from pilots to production.

2. How are data agents different from traditional middleware or orchestration tools?

Traditional middleware and orchestration tools follow predefined flows and static integrations. Data agents reason dynamically at runtime, determining what data is required for a task, how it should be accessed, and how it must be governed before assembling task-ready context for AI agents.

3. Can data agents be reused across multiple AI agents and use cases?

Yes. Data agents are designed to be reusable and shared. By decoupling data access and governance from individual AI agents, the same data agents can support multiple AI agents and use cases without duplicating logic or integrations.

4. Do data agents replace existing APIs?

Data agents work alongside existing APIs. They invoke APIs, data products, and RAG pipelines as needed, but remove the need to build new APIs for every AI agent task or question.

5. Where do data agents fit in an agentic AI architecture?

Data agents are deployed as first-class components within the same agentic AI framework as other AI agents, but their physical deployment is aligned with the location of the enterprise data they access.

Operational data products are typically deployed close to the systems of record they synchronize with, whether on-premises, in a private cloud, or in a public cloud environment. For performance, security, and compliance reasons, data agents are deployed in the same environment as those data products, rather than being separated by network or trust boundaries.

6. Do data agents require a specific LLM or agent framework?

No. Data agents are LLM- and framework-agnostic. They can integrate with commercial platforms and open-source agent frameworks, allowing organizations to evolve their AI stack without redesigning the data layer.

7. How do data agents help reduce hallucinations in agentic AI systems?

They reduce hallucinations by grounding AI agents in accurate, current, and governed enterprise data. By delivering task-relevant context rather than broad or inferred information, they limit ambiguity and improve response consistency.

8. How are access control and data masking enforced for AI agents?

Access control and data masking are enforced by data products, based on intent supplied by the data agent. Data products apply authorization, privacy, and masking policies at runtime, ensuring only permitted data is returned and in the appropriate form.

Data agents interpret the task, resolve the entity in scope, and pass intent to the relevant data products. The returned, policy-compliant data is then assembled by the data agent into task-ready context for the AI agent.

In short: data agents provide intent and assemble context; data products enforce governance.

9. How does this architecture scale as the number of AI agents grows?

Because data access, governance, and context assembly are centralized in data agents, new AI agents can be added without creating new integrations. This reuse model allows the architecture to scale efficiently as both AI agents and use cases increase.

-1.png?width=501&height=273&name=GenAI%20survey%20news%20thumbnail%20(1)-1.png)