Table of contents

MCP SQL server lets AI tools securely access real-time SQL server data and context, enhancing AI insights, accuracy, and data governance across systems.

Why MCP and SQL servers matter for AI agents

Many enterprises rely on Microsoft SQL Server to manage and store vast amounts of critical business data, from financial transactions and inventory to employee records and customer interactions. The SQL server often acts as the backbone for operational reporting and analytics across the organization. At the same time, enterprises are embracing AI agents powered by Large Language Models (LLMs) to automate tasks, deliver recommendations, and provide instant, data-driven insights.

However, SQL server data is just one part of the overall enterprise data scene. Key information may also exist across other databases, cloud platforms, business applications, and external systems. For AI agents to work effectively, they need access to all relevant, up-to-date data, not just the records within SQL server. If enterprise data remains siloed, AI agents have an incomplete view, which limits their accuracy and usefulness, and can lead to gaps or errors in responses.

This is where MCP AI enters the picture. MCP is an open, standardized protocol that enables LLMs and AI agents to securely access live, well-governed enterprise data – from SQL server and beyond – on demand, while maintaining strict privacy controls.

Rather than copying or syncing data, MCP allows AI models to dynamically retrieve exactly the data needed, straight from the original source. This orchestrated, real-time access gives AI agents the most current and relevant information available, while upholding essential privacy and security guardrails to protect sensitive enterprise data.

By connecting SQL server to AI agents through MCP, organizations can unlock the true value of their data, powering advanced generative AI use cases and enabling smarter, more responsive business decision-making.

MCP SQL server use cases

Many organizations are realizing the value of integrating Microsoft SQL Server data – and data from other business systems – with AI agents using the Model Context Protocol (MCP). By enabling secure, governed, and real-time access to enterprise data across multiple sources, MCP allows AI agents to deliver value in a variety of business scenarios.

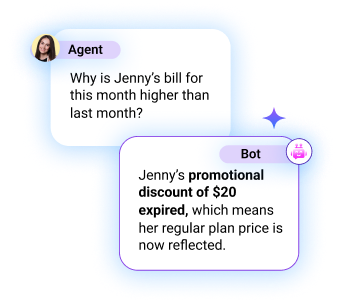

A key use case is customer service. For instance, when customers inquire about order status, billing, or account issues, the necessary information might reside in SQL server (for transaction records or account details) as well as in support, billing, or CRM systems. With MCP, AI agents can quickly and securely pull fresh data from SQL server and other sources, ensuring fast, accurate responses. Privacy and audit features in MCP help protect sensitive customer and business data throughout every interaction.

Analytic and reporting processes are also improved with MCP. Business teams often need real-time insights into operations, sales, inventory levels, or financials. While transactional and historical data might be stored in SQL server, related details can exist in marketing, logistics, or cloud platforms. MCP enables AI agents to unify and surface this information instantly, giving business leaders a holistic and current view, often right within a conversational interface.

Personalizing the AI customer experience and automating workflows are further advantages of connecting SQL server to AI agents. Imagine an assistant recommending the right offer based on purchase history and current engagement, or automating onboarding by integrating applicant data from SQL server, HR systems, and electronic forms—all orchestrated securely through MCP.

According to the State of Data for GenAI survey by K2view, only 2% of organizations in the US and UK are truly GenAI-ready, with fragmented enterprise data (like the data in SQL Server) identified as a top hurdle. By solving these data access challenges with open standards like MCP, organizations can unlock the real potential of AI, grounded in unified and trusted data from every core system.

MPC SQL server challenges

Enterprise data is rarely all in one place. While Microsoft SQL Server may store much of an organization’s transactional, financial, or operational information, most businesses have essential data distributed across other databases, cloud services, and business applications. As a result, an AI agent – acting as an MCP client – often needs to communicate with several MCP servers, each connected to a different data source, which introduces several challenges:

Security and privacy

When AI agents access sensitive business data from SQL server and other sources, ensuring security and privacy becomes a critical concern. Connecting an MCP client to multiple MCP servers means organizations must set up LLM guardrails, data governance, access controls, and audit logs for each MCP server separately to maintain compliance and protect sensitive information.

Fresh data in real time

Speed is crucial for conversational AI. Outdated data can lead to missed opportunities or poor recommendations. One of the main challenges is enabling each MCP server to deliver rapid, real-time data from SQL Server and other platforms, rather than relying on stale records from a data warehouse. To keep responses relevant, MCP servers must quickly process and supply current information from all sources.

Data integration

For a complete customer or business entity view, data must be unified from multiple SQL server environments and additional systems like support, HR, or finance, often, each behind its own MCP server. This approach leaves the AI agent responsible for the complex work of harmonizing and integrating all this information.

Solving this challenge demands a centralized data catalog with rich metadata, solid master data management (MDM) for golden records, and semantic layers to align data across the environment.

End-to-end AI automation also relies on:

- Metadata enrichment and semantic layers

- Entity resolution (MDM)

- Tooling descriptions and ontologies

- Aggregator layers to combine responses from multiple systems

- Few-shot learning and chain-of-thought reasoning to manage data complexities

Accurate answers

The lack of unified, up-to-date data can lead to LLM hallucinations. AI agents need a standardized, trustworthy way to access data from multiple sources, and protocols like MCP are key to achieving this.

Addressing these challenges requires advanced generative AI capabilities such as chain-of-thought prompting, retrieval-augmented generation (RAG), and table-augmented generation (TAG).

Equally important are robust metadata management, strong data governance, and real-time integration of information. However, implementing these solutions also introduces increased complexity, more potential points of failure, and higher risk.

The latest K2view survey found that fragmented and inaccessible data remains a leading obstacle for organizations adopting GenAI. Overcoming these barriers is crucial for AI agents to consistently provide reliable, secure, and value-driven answers, grounded in real enterprise information.

Accessing SQL Server data for MCP with K2view

K2view GenAI Data Fusion streamlines the process of implementing MCP for Microsoft SQL Server, providing a scalable and robust way to deliver multi-source, SQL Server-centric data to MCP clients.

Our patented semantic data layer makes all your enterprise data, from Microsoft SQL Server, as well as other databases and business systems, instantly and securely available to GenAI applications. With K2view, you can expose both structured and unstructured data through a single MCP server, grounding your GenAI apps in current, unified information and enabling them to deliver precise, personalized responses.

At the core of our solution is the K2view Data Product Platform, which operates as a high-performance, entity-based MCP server. This platform is built for real-time delivery of multi-source enterprise data to MCP clients, ensuring your AI tools always have access to the most reliable and up-to-date information.

If your business information spans SQL server, other databases, and diverse operational or analytical systems, K2view acts as the unified MCP server, seamlessly connecting and virtualizing data across silos to provide fast, secure, and governed access for AI agents and LLMs.

K2view makes MCP enterprise-ready by:

- Unifying fragmented data, including key SQL server records, directly from all core systems and making it instantly available for immediate AI use

- Enforcing granular privacy and compliance controls, so sensitive SQL server and non-SQL server data remains protected and accessible only to authorized users

- Delivering real-time data to AI agents and LLMs, with built-in data virtualization and transformation for consistency and business context

- Supporting both on-premises and cloud deployments, enabling secure AI usage across your entire data landscape

See how MCP connects AI chatbots with SQL Server and enterprise data

MCP Data Integration Product Tour

Take a 15-minute product tour to watch MCP in action. See how an AI chatbot is grounded with live SQLserver and enterprise data — from text-to-SQL queries, to context-aware reasoning, to secure data fusion — all in real time.

Orchestrating the MCP workflow with K2view

Here's a step-by-step breakdown of the K2view MCP orchestrator workflow:

- Get the schema for the 'Customer' entity

- Use LLM to translate user query + schema into SQL

- Run the SQL query in the customer's micro-DB context

- Use LLM to convert the SQL result to a user-facing answer

- Return the final answer

The code might look something like this:

| orchestrator handleChatbotQuery(input) { var customerId = input.customerId; var userText = input.userText; var entityName = "Customer"; // Micro-DB context // Step 1: Get the schema for the 'Customer' entity var schemaResponse = callDataService("k2view/getEntitySchema", { entity: entityName }); var entitySchema = schemaResponse.schema; // Step 2: Use LLM to translate user query + schema into SQL var prompt = ` You are a data assistant working with the 'Customer' micro-DB. Given this schema and the user's question, generate a SQL query that retrieves the relevant data. Limit all queries to: customer_id = '${customerId}' User question: "${userText}" Schema: ${entitySchema} `; var llmResponse = callExternal("llm/generateSQL", { prompt: prompt }); var sql = llmResponse.generatedSQL; // Step 3: Run the SQL query in the customer's micro-DB context var queryResult = execSQL(entityName, customerId, sql); // Step 4: Use LLM to convert the SQL result to a user-facing answer var explanationPrompt = ` You are a helpful assistant in a telecom chatbot. The user asked: "${userText}" The SQL used: ${sql} The data returned: ${JSON.stringify(queryResult)} Craft a concise and clear response that explains this result to the user in natural language. Do not mention SQL or database terms. `; var explanationResponse = callExternal("llm/explainResult", { prompt: explanationPrompt }); // Step 5: Return the final answer return { explanation: explanationResponse.text // This is what the chatbot will show }; } |

SQL server teams benefit from K2view MCP orchestration in the following ways:

- No hardcoded logic

Queries are generated dynamically using LLMs and live schema context. - Real-time personalization

The answers reflect the actual plan details stored in the SQL server for that customer. - Built-in upsell logic

LLMs can guide the user to upgrade options without needing a predefined flow. - MCP-compliant structure

Responses are auditable, traceable, and safely scoped to each customer.

K2view makes it possible to turn your enterprise SQL server records into intelligent, conversational AI answers — allowing AI agents to respond naturally and accurately with governed, real-time data.

Integrating MCP with SQL Server via K2view

K2view solves the challenges of multi-server MCP deployments by unifying SQL Server data with Salesforce, SAP, Workday, and other enterprise sources into a single governed data product – delivered through one MCP server. Rather than forcing AI agents to reconcile fragmented records and inconsistent governance across silos, K2view MCP data integration ensures secure, real-time access to harmonized information that is accurate, compliant, and always up to date. Its patented semantic data layer enriches both structured SQL Server tables and unstructured enterprise data, applies strict privacy and compliance controls, and delivers responses at conversational latency.

With K2view MCP integration, organizations eliminate the inefficiencies and risks of running multiple MCP servers while simplifying MCP client development and protecting sensitive SQL Server and non-SQL Server data. By exposing unified, entity-based data through one MCP server, K2view empowers AI agents to deliver precise, context-aware insights grounded in real enterprise information. The result is a scalable, enterprise-ready MCP implementation that accelerates GenAI adoption, drives business value faster, and enables companies to innovate with confidence.

With K2view MCP data integration, disconnected data

becomes clear, consistent, and LLM-ready.