Table of contents

For operational use, LLMs must be fed governed enterprise data in real time. Combining a trusted semantic data layer with the Model Context Protocol (MCP) is the right combo.

AI data layer + MCP combo brings new life to LLMs

In the era of GenAI apps powered by LLMs, the combination of an AI data layer and MCP AI is emerging as a critical AI design pattern.

While powerful LLMs – like Gemini, GPT-4o, or Claude – can reason, remember, and converse, they’re basically limited to generic public information, and frozen in time (on the date their training ended)

The only way to ground them with information about your specific business (your customers and business transactions, for example) is to safely integrate live enterprise data into the prompting process.

This article discusses the value of an AI data layer coupled with MCP, and why it is the ideal solution for enabling contextual, grounded, and real-time operational workloads, such as generative AI use cases in customer service.

Prompt engineering challenges

Prompt engineering for large language models (LLMs) is a sophisticated art and science that moves beyond simple queries to create powerful, tailored AI applications. While it may seem like a complex task, this approach to crafting prompts is essential for unlocking the full potential of LLMs. By providing clear, well-structured instructions, users can guide the model to produce personalized and highly accurate real-time responses, making it possible to create dynamic, context-aware user experiences that were previously out of reach.

Ultimately, the true value of prompt engineering lies in its ability to ground the model and prevent the common problem of "hallucinations", where the AI fabricates information. This technique allows for system grounding, ensuring the LLM's outputs are based on specific, provided data rather than its general, pre-trained knowledge. A key benefit is that it enables domain-specific behavior without the need for expensive and time-consuming model retraining. This efficiency allows developers and businesses to quickly adapt off-the-shelf LLMs to specialized tasks, from legal document analysis to medical diagnosis assistance, simply by engineering the right prompt.

Before you can prompt at scale, however, you first need to address 4 key challenges:

-

Knowing what context is needed

-

Deciding which data sources to get the context from

-

Retrieving and harmonizing the data

-

Formulating the context efficiently, safely, and cleanly

…and all this at the speed of conversational AI.

Fortunately, two constructs have evolved that enable us to overcome these prompt engineering challenges.

Model Context Protocol to the rescue

The first construct enables prompt engineering at scale is the Model Context Protocol (MCP), a standard means for a AI client applications (MCP clients) to connect with external applications, tools, and resources through an MCP server. MCP enables us to systematically:

-

Understand user intent

-

Identify what context the model needs

-

Retrieve the needed enterprise data

-

Assemble context based on policy constraints

-

Inject context into the response sent to the GenAI client

How an AI-ready data layer complements MCP

In most enterprises, the data needed to fulfill a user query is scattered across dozens of systems with no unified API, schema, or key resolution strategy.

Instead of the MCP server trying to federate across those systems, it makes a single call to the AI data layer, like:

GET /data-products/customer?id=ABC123

AI data layer based on business entities

When the AI data layer is based on business entities (such as customers, loans, orders, or devices) :

-

Every field in the data product is mapped to the appropriate underlying system.

-

Transformation logic is applied (status normalization, date formats).

-

All the relevant data for each instance (a single customer, for example) is secured, managed, and stored in its own Micro-Database™.

-

Real-time access is supported via API, and updates can be continuous or on-demand.

End-to-end workflow

Here’s how the full stack works:

[User query] > [MCP server] > [AI data layer] > [MCP prompt] > [LLM] > [Response]

MCP server limitations

A common misconception is that the MCP server uses an LLM to construct prompts. It doesn’t – and shouldn’t . AI prompt engineering is handled by deterministic logic, not generative models.

Why MCP has become the de facto protocol for GenAI

A Practical Guide

Model Context Protocol (MCP) is a standard for connecting LLMs to enterprise data sources in real time, to ensure compliant and complete GenAI responses.

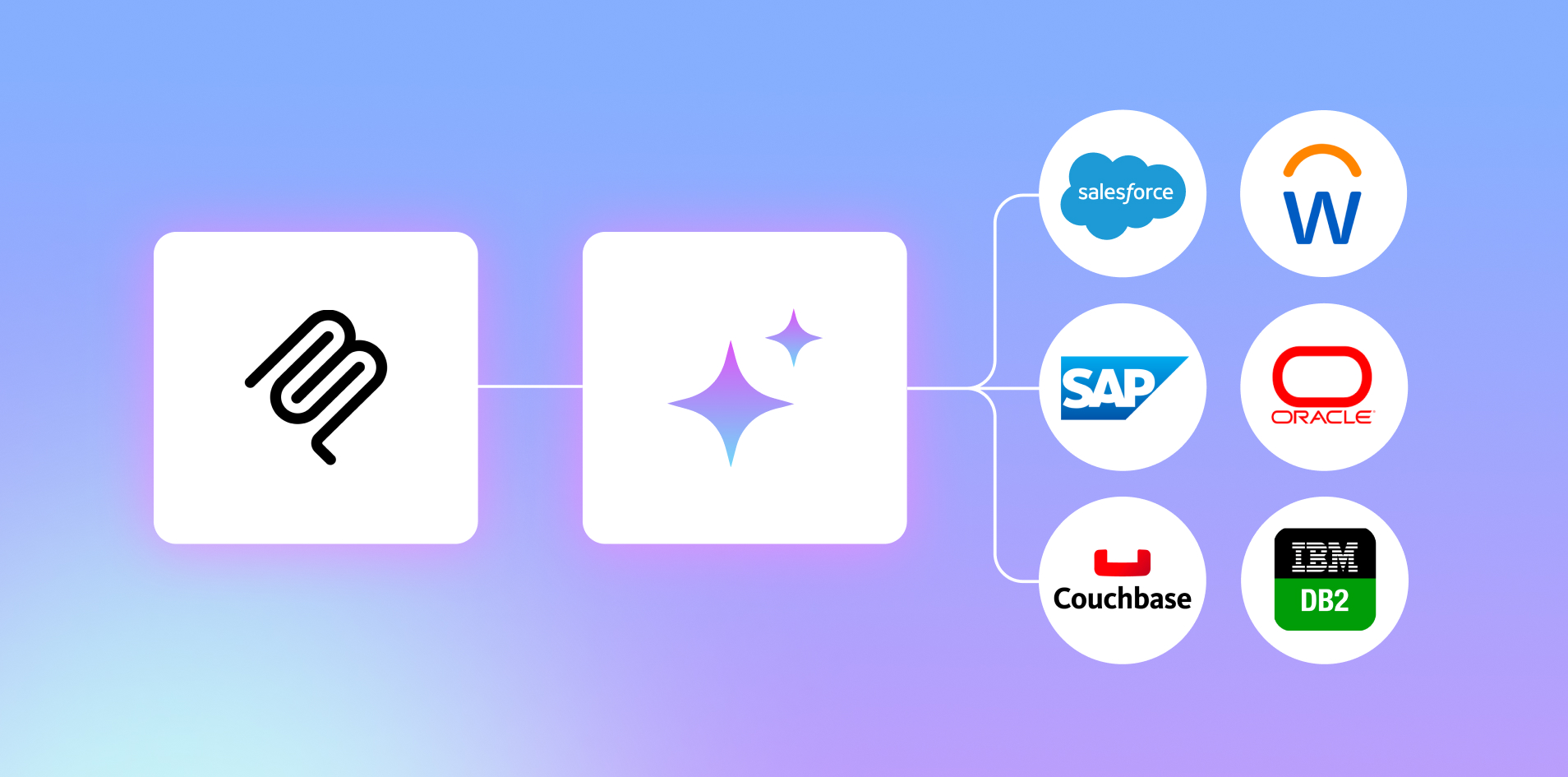

Powering MCP with an AI data layer from K2view

Operational GenAI requires more than protocol connectivity – it depends on real-time, trusted business context delivered at conversational speed. K2view provides both the AI data layer and the MCP server in a single platform, unifying multi-source enterprise data into governed, entity-centric data products – and exposing them through one MCP server. Its patented semantic data layer enriches structured and unstructured sources, applies transformations and normalization, enforces granular governance controls, and ensures every response is delivered with conversational latency. This combination removes the complexity of fragmented integrations and makes MCP enterprise-ready for production-scale AI workloads.

With K2view MCP integration, organizations gain a consistent and secure way to feed GenAI models with complete, harmonized business context. Instead of relying on brittle prompt engineering or isolated system queries, AI agents can draw on a unified, real-time view of customers, products, accounts, and transactions across the enterprise. The result is cleaner prompts, more accurate outputs, and scalable operational use of LLMs – all grounded in governed enterprise data.

Here's what you get when K2view does your MCP data integration:

-

One MCP endpoint, many systems

All relevant data sources are harmonized and exposed as a single, governed interface.

-

Real-time, entity-level context

Data virtualization and transformation deliver low-latency, AI-ready data.

-

Built-in governance

Control your data integration with fine-grained access controls, masking/tokenization, lineage, and full audit-ability by design.

-

Semantic layer and MDM

Identity resolution and schema mapping to produce golden records for every entity.

-

Deterministic orchestration

Policy-aware context packaging keeps prompts clean and predictable.

-

Deploy anywhere

Implement on premises or on the cloud, with the same control plane and SLAs.

Before you integrate your enterprise data with MCP, make sure it's trusted, unified, and compliant – with K2view.

Make your LLMs smarter by feeding them harmonized,

trusted data with K2view MCP data integration.