Synthetic data generation lifecycle management is the process of creating, validating, and maintaining synthetic data that's lifelike yet protects privacy.

Thinking about Synthetic Data Holistically

Synthetic data has the market buzzing about its business potential. From leveraging generative AI for software testing to powering Machine Learning (ML) model training, the possibilities are vast.

Yet, the key lies not just in generating data but in embracing a holistic, data product approach to the entire synthetic data generation lifecycle – from thorough data preparation to ongoing synthetic data operations.

A data product approach not only guarantees the quality and diversity of the generated data but also ensures the effective management and utilization of a synthetic dataset.

Recent IDC research emphasizes the need for synthetic data solutions to manage the entire journey – from source to target. A data product engineered to span data integration, preparation, generation, validation, post-processing, and delivery provides a comprehensive, reusable, and scalable solution to provisioning synthetic data.

Get the IDC Report on Synthetic Data Generation – it's on us!

Data Integration and Preparation

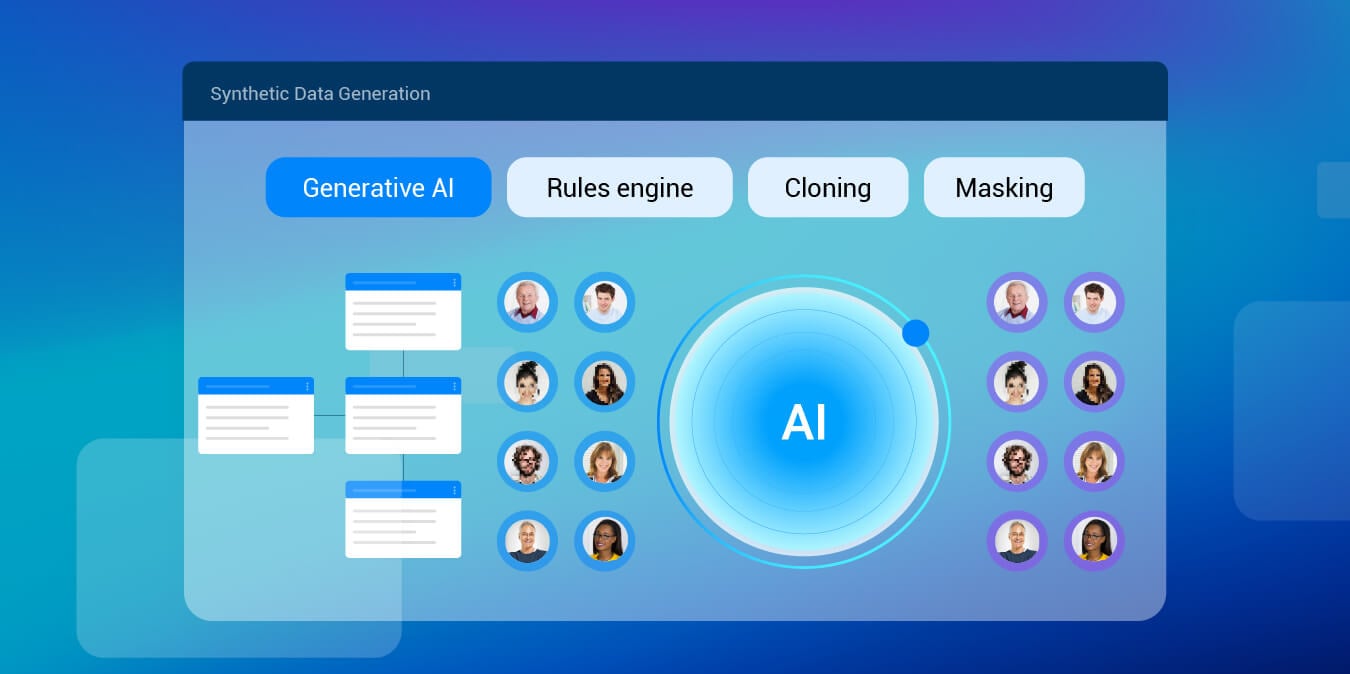

Let’s take, for example, a generative AI synthetic data model.

The main steps are extracting, masking, and subsetting multi-source production data to train the synthetic data generation ML models, and subsequently to generate new data.

Data extraction requires integrating with a company’s database technologies and applications, and the extracted data must be masked to safeguard sensitive information (PII) and preserve privacy.

Next in order is to subset the masked data, so that the specific features, distributions, or relationships present in the production dataset are captured to train the synthetic data generation ML models.

The ML models can be trained on these prepared subsets, allowing them to learn underlying patterns, correlations, and statistical distributions from the original data. Post-generation, validation and post-generation transformation is required to ensure the synthetic data is fit for use.

Synthetic Data Operations

Efficiently delivering the generated data into target systems and data stores requires careful data orchestration and integration. Data transformations may be necessary to align the data with specific formats and structures in the target systems.

Depending on the use case, mechanisms may need to be established to reserve a subset of the synthetic data to prevent others from overring it. Data aging might also be needed to simulate the evolution of real-world datasets over time. Additionally, data rollback mechanisms are essential to revert changes made to the generated data in case of errors or the need to return to a previous state.

End-to-End Synthetic Data Management

Most synthetic data companies focus on generating the data, leaving the complex phases of data preparation, provisioning, and ongoing operations for their clients to figure out through data engineering efforts – which oftentimes create delays, effort overruns, and inaccurate data. For a successful deployment of synthetic data, robust synthetic data generation tools are essential, but addressing the intricate steps of preparing and managing data in operational environments is equally important.

Learn more about K2view entity-based synthetic data generation tools.