Learn why software developers prefer smaller, more representative subsets of data for testing, validating, and preparing applications for production use.

Understanding Test Data Management

Test data management assures that software applications are thoroughly tested before going live. One of the most important test data management techniques is data subsetting which, as the name suggests, breaks up large datasets into smaller ones. By preparing and delivering only the information needed by the testers, subsetting results in test data of the highest relevance.

But before diving into data subsetting, let’s better understand the role test data management plays in the Software Development Life Cycle (SDLC). Test data management refers to the planning, design, creation, and management of the data required for testing software applications. Its primary purpose is to make sure that the testing process is effective in locating and correcting potential faults in the software before or after it’s released.

However, test data management has its own challenges, such as:

-

Managing massive amounts of data

-

Complying with data privacy regulations

-

Provisioning test data quickly and at scale

Data subsetting helps address these issues more effectively.

What is Data Subsetting?

Data subsetting is a test data management technique that involves creating smaller, more representative subsets of real-world data for use in testing environments. Subsetting allows enterprises to work with a precise dataset that retains the same patterns and characteristics of the real data while complying with data privacy laws, reducing storage costs, and speeding up the testing process. With data subsetting enterprises benefit from a more agile and affordable testing process.

Unlike data masking, where fictional data is substituted for Personally Identifiable Information (PII), or synthetic data generation, where new sets of artificial data are created, data subsetting pinpoints specific portions of production data for selection, while maintaining the relationships between the different data elements. For

For example, a retailer may be interested in testing an existing app for new VIP functionality. The required data for such a scenario might be “gold” customers, living in a particular region, who spend more than $1,000 per month on average.

Benefits of Data Subsetting

Data subsetting provides 4 major benefits to enterprises trying to optimize their test data management strategy:

-

Enhanced data privacy and security

There’s less PII to deal with in a data subset, so compliance with data protection regulations is quicker and easier.

-

Lower infrastructure and storage costs

Smaller datasets require less storage space, saving costs on hardware and maintenance.

-

Quicker test data provisioning

With the right test data management software, creating subsets is typically a lot faster than replicating entire databases, so test environments can be provisioned more rapidly.

-

Improved data relevance

By concentrating on data relevance, data teams benefit from more effective testing and fewer defects.

Data Subsetting Techniques

Data subsetting involves 3 basic techniques, appropriate for different scenarios:

-

Column-level subsetting selects specific columns or attributes from tables, without including any sensitive or unnecessary data. It ensures the accuracy, consistency, and integrity of data within individual columns of a database or dataset. It’s widely used in database migration projects, data transformation processes, and data warehouses, where it locates missing values, mismatched data types, and outliers specific to each column.

-

Row-level subsetting filters rows based on specific criteria such as dates, ID numbers, or other business rules. It’s used in scenarios where the accuracy of individual records (or rows within a dataset) needs to be verified – for example, in data integration, data quality assessment, and data validation processes. It helps identify anomalies, errors, or inconsistencies that may have occured at the record level.

-

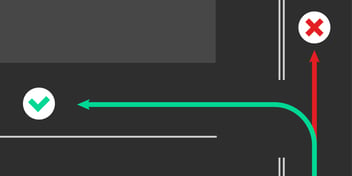

Referential integrity preservation ensures that the relationships and dependencies between tables are maintained, in order to create the most representative subset of data possible. It validates that the data subset behaves correctly in relation to the database schema and is commonly used in application testing, database migration testing, and data warehouse testing.

For example, take a software application that tracks customer orders, where the app sources its data from 2 databases: Customers and Orders. The Customers DB has a primary key called CustomerID, while the Orders DB has a foreign key also called CustomerID – designed to reference the specific CustomerID in the Customers DB.

For the app to work, every order must reference a corresponding customer in the Customers DB. If it can’t, the app can’t create an order. Referential integrity ensures that the relationship between the databases is always maintained.

Data Subsetting Challenges

While data subsetting provides significant advantages, it also has its challenges:

-

Large volumes of diverse data

Subsetting and unifying data from many different databases (e.g., billing, invoicing, and payments) requires careful planning to avoid data inconsistencies and performance issues.

-

Data consistency

Ensuring that the data subset accurately represents the entire dataset is crucial for effective testing.

-

Complex data relationships

Enforcing referential integrity can be difficult when working with data subsets with intricate relationships between multiple systems.

Best Practices for Data Subsetting

To address these challenges, follow the best practices listed below:

-

Define requirements

Testers must clearly identify the type of data they need.

-

Ensure compliance

Implement data masking, synthetic data generation, or data tokenization tools to protect personal information.

-

Automate the process

Use specialized tools (with embedded functionality like a shift-left testing approach) to automate the subsetting process and reduce the risk of errors.

-

Work together

Collaboration between development and testing teams assures successful subsetting.

Data Subsetting by Business Entity

An entity-based test data management approach to data subsetting selects and extracts data from any number of source systems based on specific business entities, such as customers, products, or orders.

Entity-based data subsetting is built into K2view test data management tools that also feature:

-

Multi-source data extraction

Move test data from any source to any target.

-

Data masking or tokenization

Protect PII/PHI while maintaining referential integrity.

-

Synthetic data generation

Provision realistic fake data to support functional testing, negative testing, and more.

-

Data subset reservation

Reserve data subsets to prevent testers from overriding each other’s data.

-

Data versioning

Roll back test data subsets to support regression testing (i.e., re-running tests using the same data).

-

Role-based access control

Manage user access with a multi-layer security portal.

-

Data service APIs

Integrate test data into your DevOps CI/CD pipelines to accelerate software delivery.

The result is greater test data automation, leading to faster testing cycles, better software quality, and quicker time-to-market.

Learn more about entity-based test data management.